A visitor publish by the XR Improvement workforce at KDDI & Alpha-U

Please observe that the knowledge, makes use of, and purposes expressed within the beneath publish are solely these of our visitor writer, KDDI.

|

| KDDI is integrating text-to-speech & Cloud Rendering to digital human ‘Metako’ |

VTubers, or digital YouTubers, are on-line entertainers who use a digital avatar generated utilizing pc graphics. This digital development originated in Japan within the mid-2010s, and has turn into a global on-line phenomenon. A majority of VTubers are English and Japanese-speaking YouTubers or reside streamers who use avatar designs.

KDDI, a telecommunications operator in Japan with over 40 million clients, wished to experiment with varied applied sciences constructed on its 5G community however discovered that getting correct actions and human-like facial expressions in real-time was difficult.

Creating digital people in real-time

Introduced at Google I/O 2023 in Could, the MediaPipe Face Landmarker resolution detects facial landmarks and outputs blendshape scores to render a 3D face mannequin that matches the person. With the MediaPipe Face Landmarker resolution, KDDI and the Google Associate Innovation workforce efficiently introduced realism to their avatars.

Technical Implementation

Utilizing Mediapipe’s highly effective and environment friendly Python bundle, KDDI builders had been capable of detect the performer’s facial options and extract 52 blendshapes in real-time.

import mediapipe as mp |

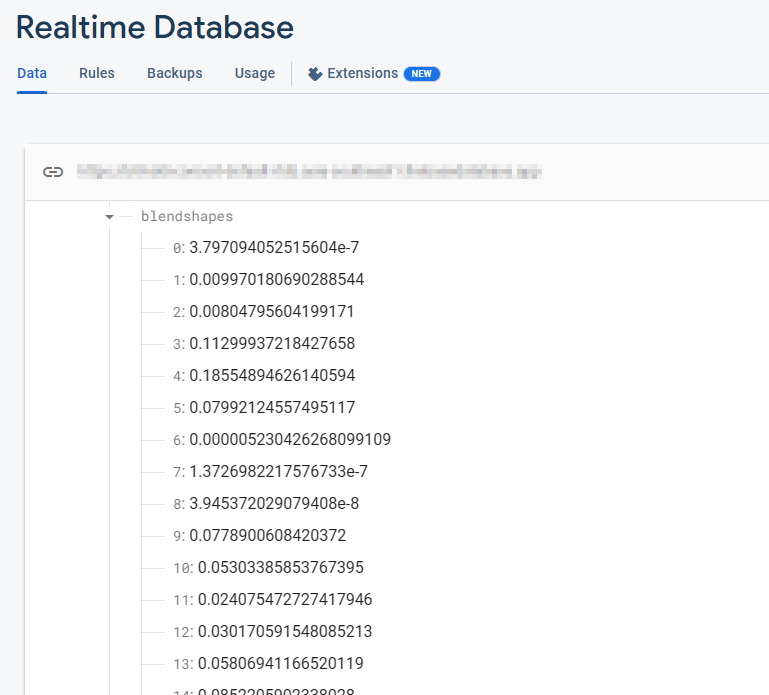

The Firebase Realtime Database shops a set of 52 blendshape float values. Every row corresponds to a particular blendshape, listed so as.

_neutral, |

These blendshape values are repeatedly up to date in real-time because the digital camera is open and the FaceMesh mannequin is working. With every body, the database displays the most recent blendshape values, capturing the dynamic modifications in facial expressions as detected by the FaceMesh mannequin.

|

After extracting the blendshapes information, the following step includes transmitting it to the Firebase Realtime Database. Leveraging this superior database system ensures a seamless circulate of real-time information to the shoppers, eliminating issues about server scalability and enabling KDDI to concentrate on delivering a streamlined person expertise.

import concurrent.futures |

To proceed the progress, builders seamlessly transmit the blendshapes information from the Firebase Realtime Database to Google Cloud’s Immersive Stream for XR cases in real-time. Google Cloud’s Immersive Stream for XR is a managed service that runs Unreal Engine mission within the cloud, renders and streams immersive photorealistic 3D and Augmented Actuality (AR) experiences to smartphones and browsers in actual time.

This integration permits KDDI to drive character face animation and obtain real-time streaming of facial animation with minimal latency, guaranteeing an immersive person expertise.

|

On the Unreal Engine facet working by the Immersive Stream for XR, we use the Firebase C++ SDK to seamlessly obtain information from the Firebase. By establishing a database listener, we will immediately retrieve blendshape values as quickly as updates happen within the Firebase Realtime database desk. This integration permits for real-time entry to the most recent blendshape information, enabling dynamic and responsive facial animation in Unreal Engine initiatives.

|

After retrieving blendshape values from the Firebase SDK, we will drive the face animation in Unreal Engine through the use of the “Modify Curve” node within the animation blueprint. Every blendshape worth is assigned to the character individually on each body, permitting for exact and real-time management over the character’s facial expressions.

|

An efficient strategy for implementing a realtime database listener in Unreal Engine is to make the most of the GameInstance Subsystem, which serves instead singleton sample. This permits for the creation of a devoted BlendshapesReceiver occasion answerable for dealing with the database connection, authentication, and steady information reception within the background.

By leveraging the GameInstance Subsystem, the BlendshapesReceiver occasion might be instantiated and maintained all through the lifespan of the sport session. This ensures a persistent database connection whereas the animation blueprint reads and drives the face animation utilizing the acquired blendshape information.

Utilizing only a native PC working MediaPipe, KDDI succeeded in capturing the true performer’s facial features and motion, and created high-quality 3D re-target animation in actual time.

Getting began

To be taught extra, watch Google I/O 2023 classes: Simple on-device ML with MediaPipe, Supercharge your internet app with machine studying and MediaPipe, What’s new in machine studying, and take a look at the official documentation over on builders.google.com/mediapipe.

What’s subsequent?

This MediaPipe integration is one instance of how KDDI is eliminating the boundary between the true and digital worlds, permitting customers to get pleasure from on a regular basis experiences resembling attending reside music performances, having fun with artwork, having conversations with associates, and procuring―anytime, wherever.

KDDI’s αU offers companies for the Web3 period, together with the metaverse, reside streaming, and digital procuring, shaping an ecosystem the place anybody can turn into a creator, supporting the brand new technology of customers who effortlessly transfer between the true and digital worlds.