OpenAI CEO Sam Altman responded to a request by the Federal Commerce Fee as a part of an investigation to find out if the corporate “engaged in unfair or misleading” practices referring to privateness, knowledge safety, and dangers of shopper hurt, notably associated to repute.

it is rather disappointing to see the FTC’s request begin with a leak and doesn’t assist construct belief.

that stated, it’s tremendous necessary to us that out know-how is protected and pro-consumer, and we’re assured we observe the regulation. in fact we’ll work with the FTC.

— Sam Altman (@sama) July 13, 2023

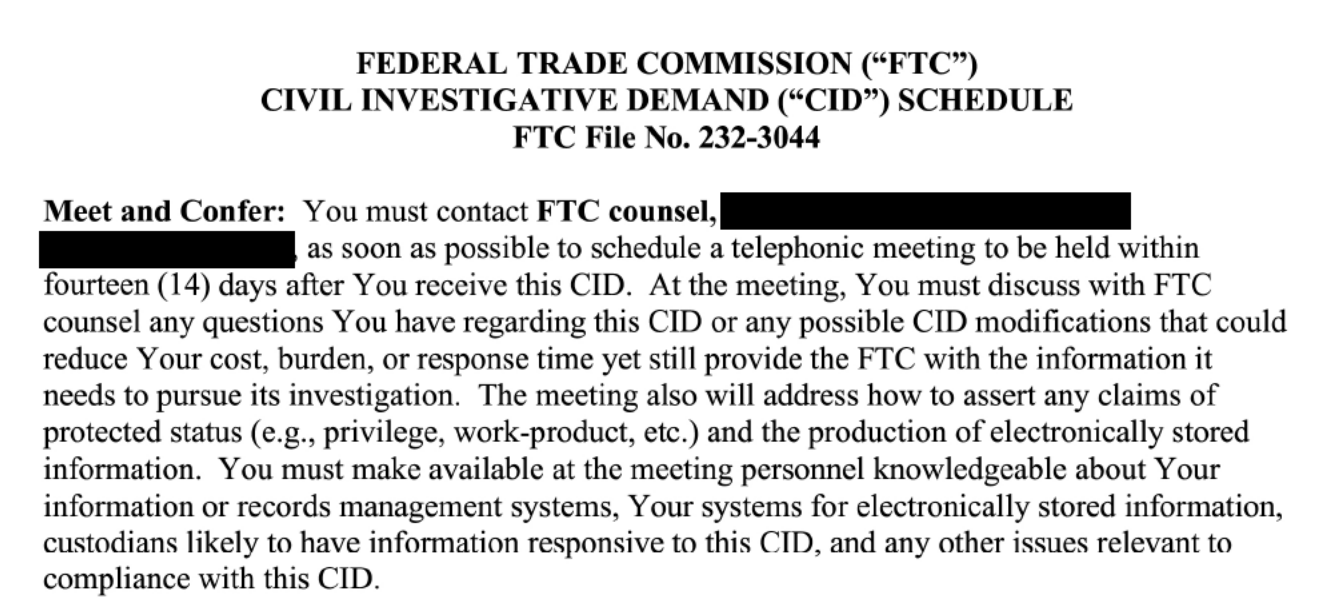

The FTC has requested data from OpenAI courting again to June 2020, as revealed in a leaked doc obtained by the Washington Submit.

Screenshot from Washington Submit, July 2023

Screenshot from Washington Submit, July 2023The topic of investigation: did OpenAI violate Part 5 of the FTC Act?

The documentation OpenAI should present ought to embrace particulars about massive language mannequin (LLM) coaching, refinement, reinforcement via human suggestions, response reliability, and insurance policies and practices surrounding shopper privateness, safety, and danger mitigation.

we’re clear concerning the limitations of our know-how, particularly after we fall brief. and our capped-profits construction means we aren’t incentivized to make limitless returns.

— Sam Altman (@sama) July 13, 2023

The FTC’s Rising Concern Over Generative AI

The investigation into a significant AI firm’s practices comes as no shock. The FTC’s curiosity in generative AI dangers has been rising since ChatGPT skyrocketed into recognition.

Consideration To Automated Choice-Making Know-how

In April 2021, the FTC printed steering on synthetic intelligence (AI) and algorithms, warning firms to make sure their AI programs adjust to shopper safety legal guidelines.

It famous Part 5 of the FTC Act, the Honest Credit score Reporting Act, and the Equal Credit score Alternative Act as legal guidelines necessary to AI builders and customers.

FTC cautioned that algorithms constructed on biased knowledge or flawed logic might result in discriminatory outcomes, even when unintended.

The FTC outlined finest practices for moral AI improvement primarily based on its expertise imposing legal guidelines towards unfair practices, deception, and discrimination.

Suggestions embrace testing programs for bias, enabling unbiased audits, limiting overstated advertising and marketing claims, and weighing societal hurt versus advantages.

“In case your algorithm ends in credit score discrimination towards a protected class, you would end up dealing with a grievance alleging violations of the FTC Act and ECOA,” the steering warns.

AI In Verify

The FTC reminded AI firms about its AI steering from 2021 with regard to creating exaggerated or unsubstantiated advertising and marketing claims relating to AI capabilities.

Within the put up from February 2023, the group warned entrepreneurs towards getting swept up in AI hype and making guarantees their merchandise can not ship.

Frequent points cited: claiming that AI can do greater than present know-how permits, making unsupported comparisons to non-AI merchandise, and failing to check for dangers and biases.

The FTC pressured that false or misleading advertising and marketing constitutes unlawful conduct whatever the complexity of the know-how.

The reminder got here a number of weeks after OpenAI’s ChatGPT reached 100 million customers.

The additionally FTC posted a job itemizing for technologists round this time – together with these with AI experience – to help investigations, coverage initiatives, and analysis on shopper safety and competitors within the tech trade.

Deepfakes And Deception

A few month later, in March, the FTC warned that generative AI instruments like chatbots and deepfakes might facilitate widespread fraud if deployed irresponsibly.

It cautioned builders and firms utilizing artificial media and generative AI to think about the inherent dangers of misuse.

The company stated dangerous actors can leverage the practical however faux content material from these AI programs for phishing scams, identification theft, extortion, and different hurt.

Whereas some makes use of could also be useful, the FTC urged companies to weigh making or promoting such AI instruments given foreseeable prison exploitation.

The FTC suggested that firms persevering with to develop or use generative AI ought to take strong precautions to stop abuse.

It additionally warned towards utilizing artificial media in deceptive advertising and marketing and failing to reveal when shoppers work together with AI chatbots versus actual folks.

AI Spreads Malicious Software program

In April, the FTC revealed how cybercriminals exploited curiosity in AI to unfold malware via faux advertisements.

The bogus advertisements promoted AI instruments and different software program on social media and search engines like google and yahoo.

Clicking these advertisements lead customers to cloned websites that obtain malware or exploit backdoors to contaminate gadgets undetected. The stolen data was then offered on the darkish net or used to entry victims’ on-line accounts.

To keep away from being hacked, the FTC suggested not clicking on software program advertisements.

If contaminated, customers ought to replace safety instruments and working programs, then observe steps to take away malware or get better compromised accounts.

The FTC cautioned the general public to be cautious of cybercriminals rising extra subtle at spreading malware via promoting networks.

Federal Businesses Unite To Sort out AI Regulation

Close to the tip of April, 4 federal businesses – the Shopper Monetary Safety Bureau (CFPB), the Division of Justice’s Civil Rights Division (DOJ), the Equal Employment Alternative Fee (EEOC), and the FTC – launched an announcement on how they’d monitor AI improvement and implement legal guidelines towards discrimination and bias in automated programs.

The businesses asserted authority over AI below present legal guidelines on civil rights, honest lending, equal alternative, and shopper safety.

Collectively, they warned AI programs might perpetuate illegal bias resulting from flawed knowledge, opaque fashions, and improper design selections.

The partnership aimed to advertise accountable AI innovation that will increase shopper entry, high quality, and effectivity with out violating longstanding protections.

AI And Shopper Belief

In Could, the FTC warned firms towards utilizing new generative AI instruments like chatbots to control shopper choices unfairly.

After describing occasions from the film Ex Machina, the FTC claimed that human-like persuasion of AI chatbots might steer folks into dangerous selections about funds, well being, schooling, housing, and jobs.

Although not essentially intentional, the FTC stated design components that exploit human belief in machines to trick shoppers represent unfair and misleading practices below FTC regulation.

The company suggested companies to keep away from over-anthropomorphizing chatbots and guarantee disclosures on paid promotions woven into AI interactions.

With generative AI adoption surging, the FTC alert places firms on discover to proactively assess downstream societal impacts.

These dashing instruments to market with out correct ethics evaluate or protections would danger FTC motion on ensuing shopper hurt.

An Opinion On The Dangers Of AI

FTC Chair Lina Khan argued that generative AI poses dangers of entrenching important tech dominance, turbocharging fraud, and automating discrimination if unchecked.

In a New York Occasions op-ed printed a few days after the patron belief warning, Khan stated the FTC goals to advertise competitors and defend shoppers as AI expands.

Khan warned a number of highly effective firms managed key AI inputs like knowledge and computing, which might additional their dominance absent antitrust vigilance.

She cautioned practical faux content material from generative AI might facilitate widespread scams. Moreover, biased knowledge dangers algorithms that unlawfully lock out folks from alternatives.

Whereas novel, Khan asserted AI programs should not exempt from FTC shopper safety and antitrust authorities. With accountable oversight, Khan famous that generative AI might develop equitably and competitively, avoiding the pitfalls of different tech giants.

AI And Knowledge Privateness

In June, the FTC warned firms that shopper privateness protections apply equally to AI programs reliant on private knowledge.

In complaints towards Amazon and Ring, the FTC alleged unfair and misleading practices utilizing voice and video knowledge to coach algorithms.

FTC Chair Khan stated AI’s advantages don’t outweigh the privateness prices of invasive knowledge assortment.

The company asserted shoppers retain management over their data even when an organization possesses it. Strict safeguards and entry controls are anticipated when workers evaluate delicate biometric knowledge.

For teenagers’ knowledge, the FTC stated it might absolutely implement the youngsters’s privateness regulation, COPPA. The complaints ordered the deletion ill-gotten biometric knowledge and any AI fashions derived from it.

The message for tech companies was clear – whereas AI’s potential is huge, authorized obligations round shopper privateness stay paramount.

Generative AI Competitors

Close to the tip of June, the FTC issued steering cautioning that the speedy progress of generative AI might increase competitors issues if key inputs come below the management of some dominant know-how companies.

The company stated important inputs like knowledge, expertise, and computing sources are wanted to develop cutting-edge generative AI fashions. The company warned that if a handful of huge tech firms achieve an excessive amount of management over these inputs, they may use that energy to distort competitors in generative AI markets.

The FTC cautioned that anti-competitive techniques like bundling, tying, unique offers, or shopping for up opponents might enable incumbents to field out rising rivals and consolidate their lead.

The FTC stated it can monitor competitors points surrounding generative AI and take motion towards unfair practices.

The goal was to allow entrepreneurs to innovate with transformative AI applied sciences, like chatbots, that might reshape shopper experiences throughout industries. With the proper insurance policies, the FTC believed rising generative AI can yield its full financial potential.

Suspicious Advertising Claims

In early July, the FTC warned of AI instruments that may generate deepfakes, cloned voices, and synthetic textual content improve, so too have emerged instruments claiming to detect such AI-generated content material.

Nevertheless, specialists warned that the advertising and marketing claims made by some detection instruments might overstate their capabilities.

The FTC cautioned firms towards exaggerating their detection instruments’ accuracy and reliability. Given the constraints of present know-how, companies ought to guarantee advertising and marketing displays practical assessments of what these instruments can and can’t do.

Moreover, the FTC famous that customers must be cautious of claims {that a} instrument can catch all AI fakes with out errors. Imperfect detection might result in unfairly accusing harmless folks like job candidates of making faux content material.

What Will The FTC Uncover?

The FTC’s investigation into OpenAI comes amid rising regulatory scrutiny of generative AI programs.

As these highly effective applied sciences allow new capabilities like chatbots and deepfakes, they increase novel dangers round bias, privateness, safety, competitors, and deception.

OpenAI should reply questions on whether or not it took enough precautions in growing and releasing fashions like GPT-3 and DALL-E which have formed the trajectory of the AI area.

The FTC seems centered on making certain OpenAI’s practices align with shopper safety legal guidelines, particularly relating to advertising and marketing claims, knowledge practices, and mitigating societal harms.

How OpenAI responds and whether or not any enforcement actions come up might set important precedents for regulation as AI advances.

For now, the FTC’s investigation underscores that the hype surrounding AI mustn’t outpace accountable oversight.

Strong AI programs maintain nice promise however pose dangers if deployed with out adequate safeguards.

Main AI firms should guarantee new applied sciences adjust to longstanding legal guidelines defending shoppers and markets.

Featured picture: Ascannio/Shutterstock