(SuPatMaN/Shutterstock)

It might usually take fairly a little bit of complicated SQL to tease a multi-pronged reply out of Kinetica’s high-speed analytics database, which is powered by GPUs however wire-compataible with Postgres. However with the brand new pure language interface to ChatGPT unveiled immediately, non-technical customers can get solutions to complicated questions written in plain English.

Kinetica was incubated by the U.S. Military over a decade in the past to pour via enormous mounds of fast-moving geospatial and temporal information in quest of terrorist exercise. By leveraging the processing functionality of GPUs, the vector database might run full desk scans on the information, whereas different databases had been pressured to winnow down the information with indexes and different methods (it has since embraced CPUs with Intel’s AVX-512).

With immediately’s launch of its new Conversational Question function, Kinetica’s large processing functionality is now inside the attain of staff who lack the flexibility to put in writing complicated SQL queries. That democratization of entry means executives and others with ad-hoc information questions at the moment are capable of leverage the ability of Kinetica’s database to get solutions.

The overwhelming majority of database queries are deliberate, which permits organizations to put in writing indexes, de-normalize the information, or pre-compute aggregates to get these queries to run in a performant method, says Kinetica co-founder and CEO Nima Negahban.

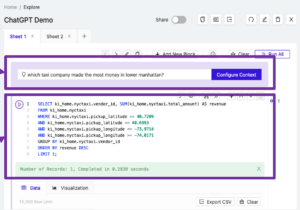

A person can submit a pure langauge question immediately on the Kinetica dashboard, which ChatGPT converts to SQL for execution

“With the arrival of generative giant language fashions, we predict that that blend goes to vary to the place lots greater portion of it’s going be advert hoc queries,” Negahban tells Datanami. “That’s actually what we do finest, is do this advert hoc, complicated question in opposition to giant datasets, as a result of we have now that skill to do giant scans and leverage many-core compute units higher than different databases.”

Conversational Question works by changing a person’s pure language question into SQL. That SQL conversion is dealt with by OpenAI’s ChatGPT giant language mannequin (LLM), which confirmed itself to be a fast learner of language–spoken, laptop, and in any other case. OpenAI API then returns the finalized SQL, and customers can then select to execute it in opposition to the database immediately from the Kinetica dashboard.

Kinetica is leaning on the ChatGPT mannequin to know the intent of language, which is one thing that it’s superb at. For instance, to reply the query “The place do folks hang around essentially the most?” from an enormous database of geospatial information of human motion, ChatGPT is sensible sufficient to know that “hang around” is a synonym for “dwell time,” which is how the information is formally recognized within the database. (The reply, by the best way, is 7-Eleven.)

Kinetica can be doing a little work forward of time to organize ChatGPT to generate good SQL via its “hydration” course of, says Chad Meley, Kinetica’s chief advertising officer.

“We’ve native analytic capabilities which are callable via SQL and ChatGPT, via a part of the hydration course of, turns into conscious of that,” Meley says. “So it will probably use a selected time-series be a part of or spatial be a part of that we make ChatGPT conscious of. In that method, we transcend your typical ANSI SQL capabilities.”

The SQL generated by ChatGPT isn’t excellent. As many are conscious, the LLM is vulnerable to seeing issues within the information, the so-called “hallucination” downside. However regardless that it’s SQL isn’t fully freed from defect, ChatGPT remains to be fairly helpful at this state, says Negahban, who was a 2018 Datanami Particular person to Watch.

“I’ve seen that it’s type of ok,” he says. “It hasn’t been [wildly] fallacious in any queries it generates…I believe will probably be higher with GPT-4.”

Ultimately evaluation, by the point it takes a SQL professional to put in writing the proper seven-way be a part of and get it over to the database, the chance to behave on the information could also be gone. That’s why the pairing of a “ok” question generator with a database as highly effective as Kinetica could make a distinct for decision-makers, Negahban says.

“Having an engine like Kinetica that may really do one thing with that question with out having to do planning beforehand” is the large get, he says. “In case you attempt to do a few of these queries with the Snowflake, or insert your database du jour, they actually battle as a result of that’s simply not what they’re constructed for. They’re good at different issues. What we’re actually good at, as an engine, is to do advert hoc queries regardless of the complexity, regardless of what number of tables are concerned. So that actually pairs properly with this skill for anybody to generate SQL throughout all their information asking questions on all the information of their enterprise.”

Conversational Question is accessible now within the cloud and on-prem variations of Kinetica.

Associated Objects:

ChatGPT Dominates as High In-Demand Office Talent: Udemy Report

Financial institution Replaces Tons of of Spark Streaming Nodes with Kinetica

Stopping the Subsequent 9/11 Objective of NORAD’s New Streaming Information Warehouse

advert hoc analytics, Allen NLP, ChatGPT, Conversational Question, denormalization, GPU, GPU database, index, multi-way be a part of, pure langauge processing, pure language era, Nima Negahan, NLP, pre-aggregation, SQL era