A brand new report from Forrester is cautioning enterprises to be looking out for 5 deepfake scams that may wreak havoc. The deepfake scams are fraud, inventory worth manipulation, fame and model, worker expertise and HR, and amplification.

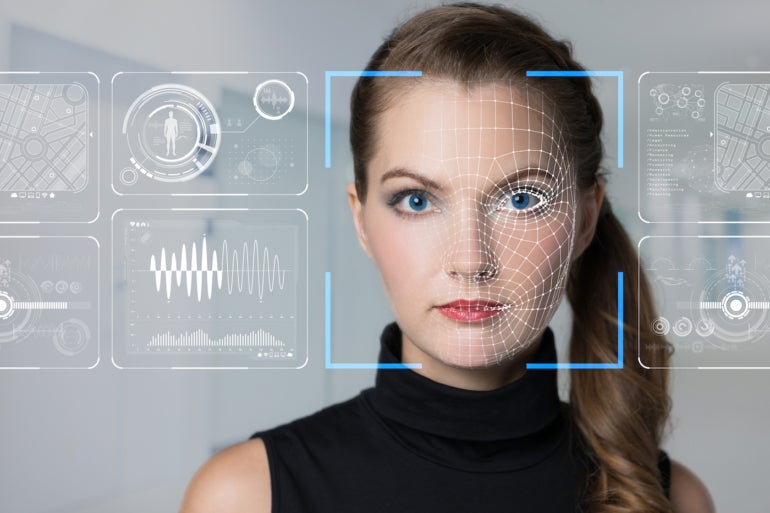

Deepfake is a functionality that makes use of AI expertise to create artificial video and audio content material that might be used to impersonate somebody, the report’s creator, Jeff Pollard, a vp and principal analyst at Forrester, instructed TechRepublic.

The distinction between deepfake and generative AI is that, with the latter, you sort in a immediate to ask a query, and it probabilistically returns a solution, Pollard mentioned. Deepfake “…leverages AI … however it’s designed to supply video or audio content material versus written solutions or responses that a big language mannequin” returns.

Deepfake scams concentrating on enterprises

These are the 5 deepfake scams detailed by Forrester.

Fraud

Deepfake applied sciences can clone faces and voices, and these strategies are used to authenticate and authorize exercise, in line with Forrester.

“Utilizing deepfake expertise to clone and impersonate a person will result in fraudulent monetary transactions victimizing people, however it can additionally occur within the enterprise,” the report famous.

One instance of fraud can be impersonating a senior govt to authorize wire transfers to criminals.

“This state of affairs already exists right this moment and can improve in frequency quickly,” the report cautioned.

Pollard referred to as this essentially the most prevalent sort of deepfake “… as a result of it has the shortest path to monetization.”

Inventory worth manipulation

Newsworthy occasions could cause inventory costs to fluctuate, equivalent to when a well known govt departs from a publicly traded firm. A deepfake of such a announcement might trigger shares to expertise a brief worth decline, and this might have the ripple impact of impacting worker compensation and the corporate’s skill to obtain financing, the Forrester report mentioned.

Status and model

It’s very simple to create a false social media put up of “… a outstanding govt utilizing offensive language, insulting prospects, blaming companions, and making up details about your services or products,” Pollard mentioned. This state of affairs creates a nightmare for boards and PR groups, and the report famous that “… it’s all too simple to artificially create this state of affairs right this moment.”

This might harm the corporate’s model, Pollard mentioned, including that “… it’s, frankly, virtually unattainable to stop.”

Worker expertise and HR

One other “damning” state of affairs is when one worker creates a deepfake utilizing nonconsensual pornographic content material utilizing the likeness of one other worker and circulating it. This could wreak havoc on that worker’s psychological well being and threaten their profession and can “…virtually definitely lead to litigation,” the report said.

The motivation is somebody considering it’s humorous or in search of revenge, Pollard mentioned. It’s the rip-off that scares firms essentially the most as a result of it’s “… essentially the most regarding or pernicious long run as a result of it’s essentially the most troublesome to stop,” he mentioned. “It goes towards any standard worker habits.”

Amplification

Deepfakes can be utilized to unfold different deepfake content material. Forrester likened this to bots that disseminate content material, “… however as a substitute of giving these bots usernames and put up histories, we give them faces and feelings,” the report mentioned. These deepfakes is also used to create reactions to an authentic deepfake that was designed to break an organization’s model, so it’s doubtlessly seen by a broader viewers.

Organizations’ finest defenses towards deepfakes

Pollard reiterated you can’t stop deepfakes, which will be simply created by downloading a podcast, for instance, after which cloning an individual’s voice to make them say one thing they didn’t really say.

“There are step-by-step directions for anybody to do that (the flexibility to clone an individual’s voice) technically,” he famous. However one of many defenses towards this “… is to not say and do terrible issues.”

Additional, if the corporate has a historical past of being reliable, genuine, reliable and clear, “… it will likely be troublesome for individuals to imagine suddenly you’re as terrible as a video may make you seem like,” he mentioned. “However in case you have a monitor file of not caring about privateness, it’s not onerous to make a video of an govt…” saying one thing damaging.

There are instruments that provide integrity, verification and traceability to point that one thing isn’t artificial, Pollard added, equivalent to FakeCatcher from Intel. “It appears to be like at … blood move within the pixels within the video to determine what somebody’s considering when this was recorded.”

However Pollard issued a be aware of pessimism about detection instruments, saying they “… evolve after which adversaries get round them after which they should evolve once more. It’s the age-old story with cybersecurity.”

He harassed that deepfakes aren’t going to go away, so organizations must suppose proactively in regards to the chance that they may develop into a goal. Deepfakes will occur, he mentioned.

“Don’t make the primary time you’re eager about this when it occurs. You need to rehearse this and perceive it so you realize precisely what to do when it occurs,” he mentioned. “It doesn’t matter if it’s true – it issues if it’s believed sufficient for me to share it.”

And a remaining reminder from Pollard: “That is the web. Every part lives endlessly.”