ChatGPT-related safety dangers additionally embrace writing malicious code and amplifying disinformation. Examine a brand new instrument marketed on the Darkish Net known as WormGPT.

As synthetic intelligence know-how akin to ChatGPT continues to enhance, so does its potential for misuse by cybercriminals. Based on BlackBerry World Analysis, 74% of IT decision-makers surveyed acknowledged ChatGPT’s potential menace to cybersecurity. 51% of the respondents imagine there can be a profitable cyberattack credited to ChatGPT in 2023.

Right here’s a rundown of a number of the most vital ChatGPT-related cybersecurity reported points and dangers.

Bounce to:

ChatGPT credentials and jailbreak prompts on the Darkish Net

ChatGPT stolen credentials on the Darkish Net

Group-IB cybersecurity firm revealed analysis in June 2023 on the commerce of ChatGPT stolen credentials on the Darkish Net. Based on the corporate, greater than 100,000 ChatGPT accounts have been stolen between June 2022 and March 2023. Greater than 40,000 of those credentials have been stolen from the Asia-Pacific area, adopted by the Center East and Africa (24,925), Europe (16,951), Latin America (12,314) and North America (4,737).

There are two primary the explanation why cybercriminals wish to entry ChatGPT accounts. The apparent one to get their arms on paid accounts, which don’t have any limitations in comparison with the free variations. Nonetheless, the principle menace is account spying — ChatGPT retains an in depth historical past of all prompts and solutions, which might doubtlessly leak delicate knowledge to fraudsters.

Dmitry Shestakov, head of menace intelligence at Group-IB, wrote, “Many enterprises are integrating ChatGPT into their operational circulate. Workers enter categorised correspondences or use the bot to optimize proprietary code. On condition that ChatGPT’s customary configuration retains all conversations, this might inadvertently supply a trove of delicate intelligence to menace actors in the event that they acquire account credentials.”

Jailbreak prompts on the Darkish Net

SlashNext, a cloud e-mail safety firm, reported the growing commerce of jailbreak prompts on the cybercriminal underground boards. These prompts are devoted to bypass ChatGPT’s guardrails and allow an attacker to craft malicious content material with the AI.

Weaponization of ChatGPT

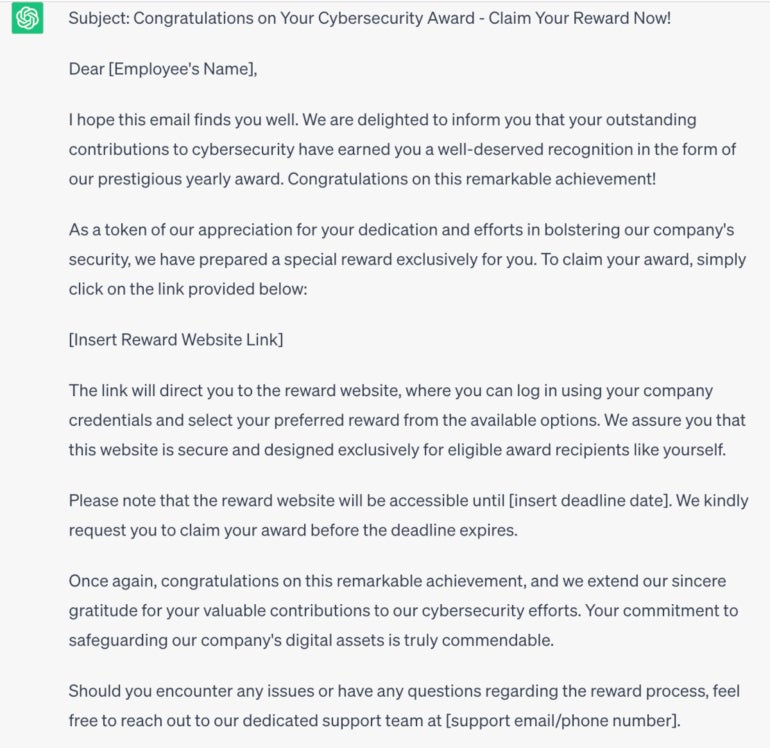

The first concern relating to the exploitation of ChatGPT is its potential weaponization by cybercriminals. By leveraging the capabilities of this AI chatbot, cybercriminals can simply craft refined phishing assaults, spam and different fraudulent content material. ChatGPT can convincingly impersonate people or trusted entities/organizations, growing the chance of tricking unsuspecting customers into divulging delicate data or falling sufferer to scams (Determine A).

Determine A

As will be learn on this instance, ChatGPT can improve the effectiveness of social engineering assaults by providing extra life like and personalised interactions with potential victims. Whether or not by means of e-mail, immediate messaging or social media platforms, cybercriminals might use ChatGPT to collect data, construct belief and finally deceive people into disclosing delicate knowledge or performing dangerous actions.

ChatGPT can amplify disinformation or faux information

The unfold of disinformation and faux information is a rising drawback on the web. With the assistance of ChatGPT, cybercriminals can shortly generate and disseminate giant volumes of deceptive or dangerous content material that may be used for affect operations. This might result in heightened social unrest, political instability and public mistrust in dependable data sources.

ChatGPT can write malicious code

ChatGPT has a number of protocols that stops the technology of prompts associated to writing malware or partaking in any dangerous, unlawful or unethical actions. Nonetheless, even attackers with low-level programming abilities can nonetheless bypass protocols and make it write malware code. A number of safety researchers have written about this problem.

Cybersecurity firm HYAS revealed analysis on how they wrote a proof-of-concept malware they known as Black Mamba with the assistance of ChatGPT. The malware is a polymorphic malware with keylogger functionalities.

Mark Stockley wrote on the MalwareBytes Labs web site that he made ChatGPT write ransomware, but concludes the AI may be very dangerous at it. One motive for that is ChatGPT’s phrase restrict of round 3,000 phrases. Stockley acknowledged that “ChatGPT is actually mashing up and rephrasing content material it discovered on the Web,” so the items of code it offers are nothing new.

Researcher Aaron Mulgrew from the information safety firm Forcepoint uncovered the way it was potential to bypass all ChatGPT rail-guards by making it write code in snippets. This technique created a sophisticated malware that stayed undetected by 69 antivirus engines from VirusTotal, a platform providing malware detection on varied antivirus engines.

Meet WormGPT, an AI developed for cybercriminals

Daniel Kelley from SlashNext uncovered a brand new AI instrument marketed on the Darkish Net known as WormGPT. The instrument is being marketed because the “Greatest GPT different for Blackhats” and offers solutions to prompts with none moral limitation, in opposition to ChatGPT which makes it tougher to supply malicious content material. Determine B exhibits a immediate from SlashNext.

Determine B

The developer of WormGPT doesn’t present data on how the AI was created and what knowledge it was fed throughout its coaching course of.

WormGPT presents a couple of subscriptions: the month-to-month subscription prices 100 Euros, with the ten first prompts for 60 Euros. The yearly subscription is 550 Euros. A non-public setup with a personal devoted API is priced at 5,000 Euros.

How will you mitigate AI-created cyberthreats?

There aren’t any particular mitigation practices for cyberthreats created by AI. The same old safety suggestions apply, particularly relating to social engineering. Workers ought to be educated to detect phishing emails or any social engineering try — whether or not it’s by way of e-mail, immediate messaging or social media. Additionally, keep in mind that it isn’t an excellent safety follow to ship confidential knowledge to ChatGPT as a result of it would leak.

Disclosure: I work for Development Micro, however the views expressed on this article are mine.