At this yr’s Information+AI Summit, Databricks SQL continued to push the boundaries of what a knowledge warehouse could be, leveraging AI throughout your entire product floor to increase our management in efficiency and effectivity, whereas nonetheless simplifying the expertise and unlocking new alternatives for our prospects. In parallel, we proceed to ship enhancements to our core information warehousing capabilities that can assist you unify your information stack underneath Lakehouse.

On this weblog publish, we’re thrilled to share the highlights of what is new and coming subsequent in Databricks SQL:

The AI-optimized warehouse: Prepared for all of your workloads – no tuning required

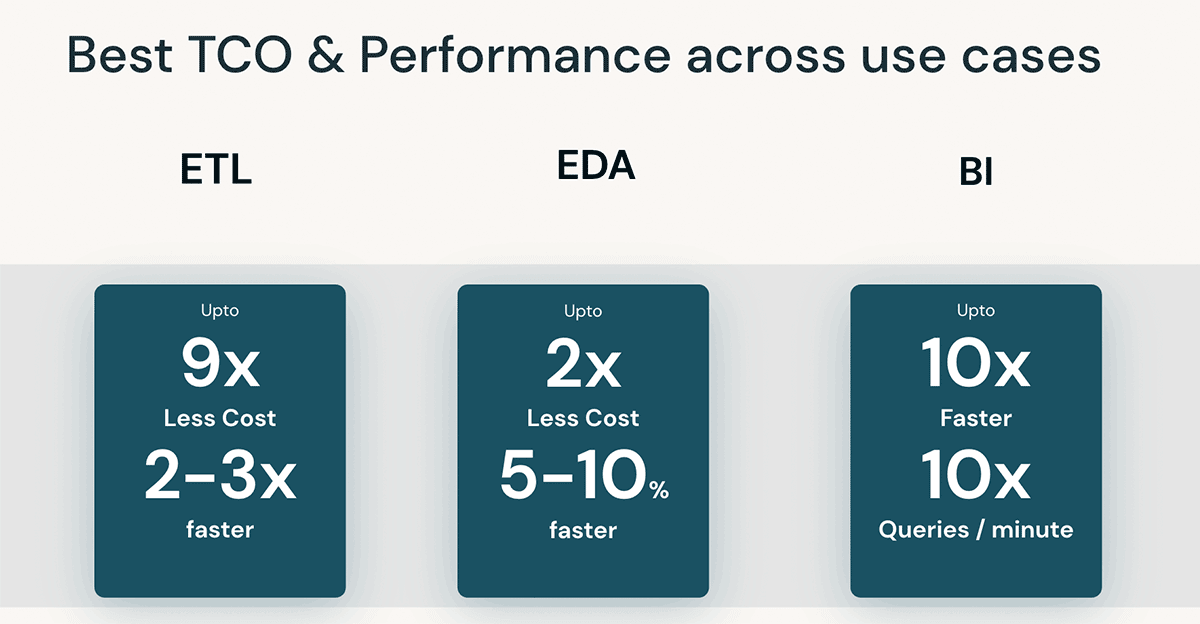

We consider that the perfect information warehouse is a lakehouse; due to this fact, we proceed to increase our management in ETL workloads and harnessing the ability of AI. Databricks SQL now additionally delivers industry-leading efficiency on your EDA and BI workloads, whereas bettering value financial savings – with no handbook tuning.

Say goodbye to manually creating indexes. With Predictive I/O for reads (GA) and updates (Public Preview), Databricks SQL now analyzes historic learn and write patterns to intelligently construct indexes and optimize workloads. Early prospects have benefited from a exceptional 35x enchancment in level lookup effectivity, spectacular efficiency boosts of 2-6x for MERGE operations and 2-10x for DELETE operations.

With Predictive Optimizations (Public Preview), Databricks will seamlessly optimize file sizes and clustering by operating OPTIMIZE, VACUUM, ANALYZE and CLUSTERING instructions for you. With this characteristic, Anker Improvements benefited from a 2.2x enhance to question efficiency whereas delivering 50% financial savings on storage prices.

“Databricks’ Predictive Optimizations intelligently optimized our Unity Catalog storage, which saved us 50% in annual storage prices whereas rushing up our queries by >2x. It realized to prioritize our largest and most-accessed tables. And, it did all of this mechanically, saving our workforce precious time.”

— Anker Improvements

Uninterested in managing completely different warehouses for smaller and bigger workloads or nice tuning scaling parameters? Clever Workload Administration is a collection of options that retains queries quick whereas protecting value low. By analyzing actual time patterns, Clever Workload Administration ensures that your workloads have the optimum quantity of compute to execute incoming SQL statements with out disrupting already operating queries.

With AI-powered optimizations, Databricks SQL gives {industry} main TCO and efficiency for any type of workload, with none handbook tuning wanted. To be taught extra about obtainable optimization previews, watch Reynold Xin’s keynote and Databricks SQL Serverless Below the Hood: How We Use ML to Get the Finest Value/Efficiency from the Information+AI Summit.

Unlock siloed information with Lakehouse Federation

Right this moment’s organizations face challenges in discovering, governing and querying siloed information sources throughout fragmented techniques. With Lakehouse Federation, information groups can use Databricks SQL to find, question and handle information in exterior platforms together with MySQL, PostgreSQL, Amazon Redshift, Snowflake, Azure SQL Database, Azure Synapse, Google’s BigQuery (coming quickly) and extra.

Moreover, Lakehouse Federation seamlessly integrates with superior options of Unity Catalog when accessing exterior information sources from inside Databricks. Implement row and column degree safety to limit entry to delicate info. Leverage information lineage to hint the origins of your information and guarantee information high quality and compliance. To arrange and handle information belongings, simply tag federated catalog belongings for easy information discovery.

Lastly, to speed up sophisticated transformations or cross-joins on federated sources, Lakehouse Federation helps Materialized Views for higher question latencies.

Lakehouse Federation is in Public Preview at this time. For extra particulars, watch our devoted session Lakehouse Federation: Entry and Governance of Exterior Information Sources from Unity Catalog from the Information+AI Summit.

Develop on the Lakehouse with the SQL Assertion Execution API

The SQL Assertion Execution API allows entry to your Databricks SQL warehouse over a REST API to question and retrieve outcomes. With HTTP frameworks obtainable for nearly all programming languages, you’ll be able to simply hook up with a various array of functions and platforms on to a Databricks SQL Warehouse.

The Databricks SQL Assertion Execution API is obtainable with the Databricks Premium and Enterprise tiers. To be taught extra, watch our session, comply with our tutorial (AWS | Azure), learn the documentation (AWS | Azure), or test our repository of code samples.

Streamline your information processing with Streaming Tables, Materialized Views, and DB SQL in Workflows

With Streaming Tables, Materialized Views, and DB SQL in Workflows, any SQL consumer can now apply information engineering greatest practices to course of information. Effectively ingest, rework, orchestrate, and analyze information with just some strains of SQL.

Streaming Tables are the perfect option to convey information into “bronze” tables. With a single SQL assertion, scalably ingest information from varied sources corresponding to cloud storage (S3, ADLS, GCS), message buses (EventHub, Kafka, Kinesis), and extra. This ingestion happens incrementally, enabling low-latency and cost-effective pipelines, with out the necessity for managing complicated infrastructure.

CREATE STREAMING TABLE web_clicks

AS

SELECT *

FROM STREAM

read_files('s3://mybucket')

Materialized Views cut back value and enhance question latency by pre-computing sluggish queries and continuously used computations, and are incrementally refreshed to enhance general latency. In a knowledge engineering context, they’re used for remodeling information. However they’re additionally precious for analyst groups in a knowledge warehousing context as a result of they can be utilized to (1) pace up end-user queries and BI dashboards, and (2) securely share information. In simply 4 strains of code, any consumer can create a materialized view for performant information processing.

CREATE MATERIALIZED VIEW customer_orders

AS

SELECT

prospects.title,

sum(orders.quantity),

orders.orderdate

FROM orders

LEFT JOIN prospects ON

orders.custkey = prospects.c_custkey

GROUP BY

title,

orderdate;

Want orchestration with DB SQL? Workflows now lets you schedule SQL queries, dashboards and alerts. Simply handle complicated dependencies between duties and monitor previous job executions with the intuitive Workflows UI or through API.

Streaming Tables and Materialized Views at the moment are in public preview. To be taught extra, learn our devoted weblog publish. To enroll within the public preview for each, enroll on this type. Workflows in DB SQL is now typically obtainable, and you may be taught extra by studying the documentation (AWS | Azure).

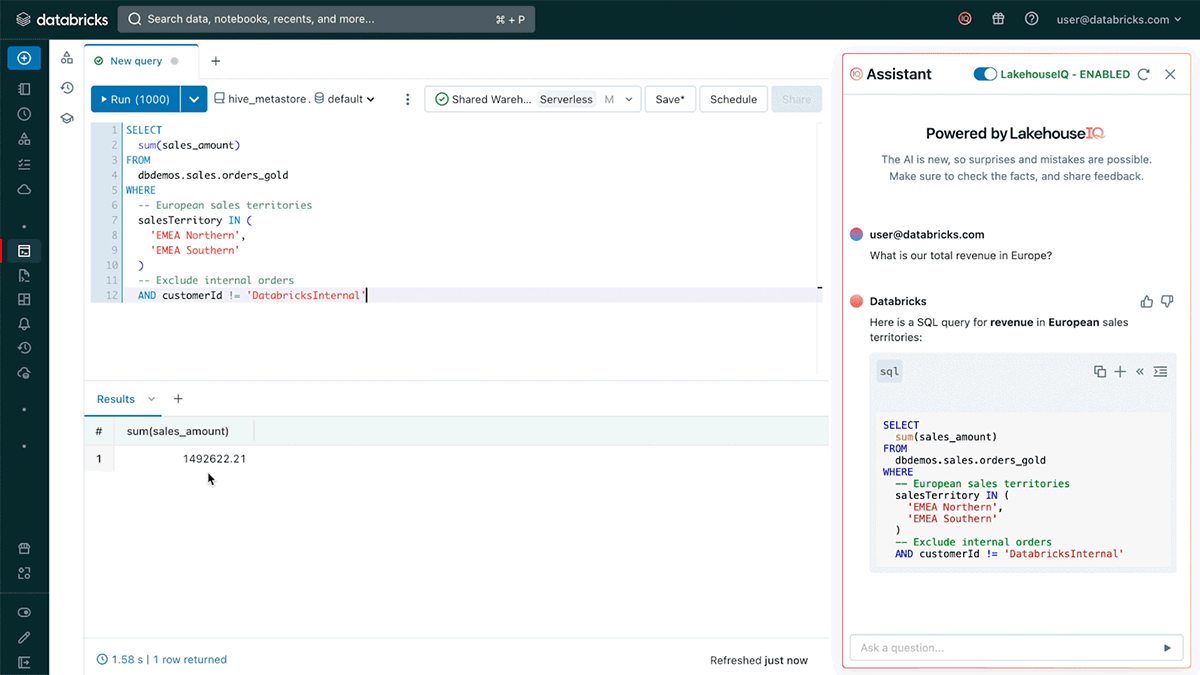

Databricks Assistant and LakehouseIQ: Write higher and quicker SQL with pure language

Databricks Assistant is a context-aware AI assistant embedded inside Databricks Notebooks and the SQL Editor. Databricks Assistant can take a pure language query and recommend a SQL question to reply that query. When making an attempt to grasp a fancy question, customers can ask the Assistant to elucidate it utilizing pure language, enabling anybody to grasp the logic behind question outcomes.

Behind the scenes, Databricks Assistant is powered by an AI information engine referred to as LakehouseIQ. LakehouseIQ understands indicators corresponding to schemas, reputation, lineage, feedback, and docs to enhance the search and AI experiences in Databricks. LakehouseIQ will improve numerous current product experiences with extra correct, related outcomes together with Search, Assist, and Databricks Assistant.

LakehouseIQ is presently in growth and can be obtainable later this yr. Databricks Assistant can be obtainable for public preview within the subsequent few weeks. Over time, we are going to combine the Assistant with LakehouseIQ to supply extra correct recommendations personalised in your firm’s information.

Handle your information warehouse with confidence

Directors and IT groups want the instruments to grasp information warehouse utilization. With System Tables, Stay Question Profile, and Assertion Timeouts, admins can monitor and repair issues after they happen, making certain that your information warehouse runs effectively.

Acquire deeper visibility and insights into your SQL atmosphere with System Tables. System Tables are Databricks-provided tables that comprise details about previous assertion executions, prices, lineage, and extra. Discover metadata and utilization metrics to reply questions like “What statements have been run and by whom?”, “How and when did my warehouses scale?” and “What was I billed for?”. Since System Tables are built-in inside Databricks, you’ve got entry to native capabilities corresponding to SQL alerts and SQL dashboards to automate the monitoring and alerting course of.

As of at this time, there are three System Tables presently in public preview: Audit Logs, Billable Utilization System Desk, and Lineage Sytem Desk (AWS | Azure). Further system tables for warehouse occasions and assertion historical past are coming quickly.

For instance, to compute the month-to-month DBUs used per SKU, you’ll be able to question the Billiable Utilization System Tables.

SELECT sku_name, usage_date, sum(usage_quantity) as `DBUs`

FROM system.billing.utilization

WHERE

month(usage_date) = month(NOW())

AND yr(usage_date) = yr(NOW())

GROUP BY sku_name, usage_date

With Stay Question Profile, customers achieve real-time insights into question efficiency to assist optimize workloads on the fly. Visualize question execution plans and assess stay question job executions to repair frequent SQL errors like exploding joins or full desk scans. Stay Question Profile lets you be certain that operating queries in your information warehouse are optimized and operating effectively. Be taught extra by studying the documentation (AWS | Azure).

In search of automated controls? Assertion Timeouts can help you set a customized workspace or question degree timeout. If a question’s execution time exceeds the timeout threshold, the question can be mechanically halted. Be taught extra by studying the documentation (AWS | Azure)

Compelling new experiences in DBSQL

Over the previous yr, we have been laborious at work so as to add new, cutting-edge experiences to Databricks SQL. We’re excited to announce new options that put the ability of AI in SQL customers fingers corresponding to, enabling SQL warehouses all through your entire Databricks platform; introducing a brand new technology of SQL dashboards; and bringing the ability of Python into Databricks SQL.

Democratize unstructured information evaluation with AI Capabilities

With AI Capabilities, DB SQL is bringing the ability of AI into the SQL warehouse. Effortlessly harness the potential of unstructured information by performing duties corresponding to sentiment evaluation, textual content classification, summarization, translation and extra. Information analysts can apply AI fashions through self-service, whereas information engineers can independently construct AI-enabled pipelines.

Utilizing AI Capabilities is kind of easy. For instance, contemplate a state of affairs the place a consumer needs to categorise the sentiment of some articles into Pissed off, Blissful, Impartial, or Glad.

-- create a udf for sentiment classification

CREATE FUNCTION classify_sentiment(textual content STRING)

RETURNS STRING

RETURN ai_query(

'Dolly', -- the title of the mannequin serving endpoint

named_struct(

'immediate',

CONCAT('Classify the next textual content into considered one of 4 classes [Frustrated, Happy, Neutral, Satisfied]:n',

textual content),

'temperature', 0.5),

'returnType', 'STRING');

-- use the udf

SELECT classify_sentiment(textual content) AS sentiment

FROM critiques;

AI Capabilities at the moment are in Public Preview. To enroll in the Preview, fill out the shape right here. To be taught extra, you too can learn our detailed weblog publish or overview the documentation (AWS | Azure).

Deliver the ability of SQL warehouses to notebooks

Databricks SQL warehouses are now public preview in notebooks, combining the pliability of notebooks with the efficiency and TCO of Databricks SQL Serverless and Professional warehouses. To allow SQL warehouses in notebooks, merely choose an obtainable SQL warehouse from the notebooks compute dropdown.

Discover and share insights with a brand new technology of dashboards

Uncover a revamped dashboarding expertise immediately on the Lakehouse. Customers can merely choose a desired dataset and construct gorgeous visualizations with a SQL-optional expertise. Say goodbye to managing separate queries and dashboard objects – an all-in-one content material mannequin simplifies the permissions and administration course of. Lastly, publish a dashboard to your total group, in order that any authenticated consumer in your id supplier can entry the dashboard through a safe internet hyperlink, even when they do not have Databricks entry.

New Databricks SQL Dashboards are presently in Non-public Preview. Contact your account workforce to be taught extra.

Leverage the pliability of Python in SQL

Deliver the pliability of Python into Databricks SQL with Python user-defined features (UDFs). Combine machine studying fashions or apply customized redaction logic for information processing and evaluation by calling customized Python features immediately out of your SQL question. UDFs are reusable features, enabling you to use constant processing to your information pipelines and evaluation.

As an example, to redact electronic mail and telephone numbers from a file, contemplate the next CREATE FUNCTION assertion.

CREATE FUNCTION redact(a STRING)

RETURNS STRING

LANGUAGE PYTHON

AS $$

import json

keys = ["email", "phone"]

obj = json.masses(a)

for ok in obj:

if ok in keys:

obj[k] = "REDACTED"

return json.dumps(obj)

$$;

Be taught extra about enrolling within the non-public preview right here.

Integrations together with your information ecosystem

At Information+AI Summit, Databricks SQL introduced new integrations for a seamless expertise together with your instruments of alternative.

Databricks + Fivetran

We’re thrilled to announce the final availability of Fivetran entry in Accomplice Join for all customers together with non-admins with adequate privileges to a catalog. This innovation makes it 10x simpler for all customers to ingest information into Databricks utilizing Fivetran. This can be a large win for all Databricks prospects as they will now convey information into the Lakehouse from lots of of connectors Fivetran gives, like Salesforce and PostgreSQL. Fivetran now absolutely helps Serverless warehouses as nicely!

Be taught extra by studying the weblog publish right here.

Databricks + dbt Labs

Simplify real-time analytics engineering on the lakehouse structure with Databricks and dbt Labs. The mix of dbt’s extremely fashionable analytics engineering framework with the Databricks Lakehouse Platform gives highly effective capabilities:

- dbt + Streaming Tables: Streaming ingestion from any supply is now built-in to dbt tasks. Utilizing SQL, analytics engineers can outline and ingest cloud/streaming information immediately inside their dbt pipelines.

- dbt + Materialized Views: Constructing environment friendly pipelines turns into simpler with dbt, leveraging Databricks’ highly effective incremental refresh capabilities. Customers can use dbt to construct and run pipelines backed by MVs, lowering infrastructure prices with environment friendly, incremental computation.

To be taught extra, learn the detailed weblog publish.

Databricks + PowerBI: Publish to PowerBI Workspaces

Publish datasets out of your Databricks workspace to PowerBI On-line workspace with a number of clicks! No extra managing odbc/jdbc connections – merely choose the dataset you wish to publish. Merely choose the datasets or schema you wish to publish and choose your PBI workspace! This makes it simpler for BI admins and report creators to assist PowerBI workspaces with out additionally having to make use of Energy BI Desktop.

PowerBI integration with Information Explorer is coming quickly and can solely be obtainable on Azure Databricks.

Getting Began with Databricks SQL

Comply with the information (AWS | Azure | GCP ) on setup a SQL warehouse to get began with Databricks SQL at this time! Databricks SQL Serverless is presently obtainable with a 20%+ promotional low cost, go to our pricing web page to be taught extra.

You may as well watch Databricks SQL: Why the Finest Serverless Information Warehouse is a Lakehouse and What’s New in Databricks SQL — With Stay Demos for a whole overview.