(Billion-Photographs./Shutterstock)

The final 12 months has seen an explosion in LLM exercise, with ChatGPT alone surpassing 100 million customers. And the joy has penetrated board rooms throughout each business, from healthcare to monetary companies to high-tech. The simple half is beginning the dialog: practically each group we speak to tells us they need a ChatGPT for his or her firm. The more durable half comes subsequent: “So what would you like that inner LLM to do?”

As gross sales groups like to say: “What’s your precise use case?”, however that’s the place half of the conversations grind to a halt. Most organizations merely don’t know their use case.

ChatGPT’s easy chat interface has educated the primary wave of LLM adopters in a easy interplay sample: you ask a query, and get a solution again. In some methods, the patron model has taught us that LLMs are primarily a extra concise Google. However used accurately, the know-how is far more highly effective than that.

Accessing an inner AI system that understands your knowledge is greater than a greater inner search. The precise means to consider shouldn’t be “a barely higher Google (or heaven forbid, Clippy) on inner knowledge”. The precise means to consider them is as a workforce multiplier. Do extra by automating extra, particularly as you’re employed along with your unstructured knowledge.

On this article, we’ll cowl among the most important purposes of LLMs we see within the enterprise that really drive enterprise worth. We’ll begin easy, with ones that sound acquainted, and work our option to the bleeding edge.

Prime LLM Use Circumstances within the Enterprise

We’ll describe 5 classes of use instances; for every, we’ll clarify what we imply by the use case, why LLMs are a very good match, and a selected instance of an software within the class.

The classes are:

- Q&A and search (ie: chatbots)

- Info extraction (creating structured tables from paperwork)

- Textual content classification

- Generative AI

- Mixing conventional ML with LLMs – personalization programs are one instance.

For every, it will also be useful to grasp if fixing the use case requires the LLM to alter its information – the set of info or content material its been uncovered to, or reasoning – the way it generates solutions based mostly on these info. By default, most generally used LLMs are educated on English language knowledge from the web as their information base and “taught” to generate comparable language out.

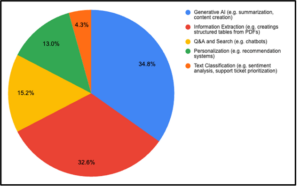

Over the previous three months, we surveyed 150 executives, knowledge scientists, machine studying engineers, builders, and product managers at each massive and small enterprises about their use of LLMs internally. That, combined with the shoppers we work with day by day, will drive our insights right here.

Self-reported use case from a survey of 150 knowledge professionals

Use Case #1: Q&A and Search

Candidly, that is what most prospects first consider after they translate ChatGPT internally: they wish to ask questions over their paperwork.

Generally, LLMs are well-suited to this process as you’ll be able to primarily “index” your inner documentation and use a course of referred to as Retrieval Augmented Era (RAG) to cross in new, company-specific information, to the identical LLM reasoning pipeline.

There are two most important caveats organizations ought to concentrate on when constructing a Q&A system with LLMs:

- LLMs are non-deterministic – they will hallucinate, and also you want guardrails on both the outputs or how the LLM is used inside your online business to safeguard in opposition to this.

- LLMs aren’t good at analytical computation or “combination” queries – in case you gave an LLM 100 monetary filings and requested “which firm made probably the most cash” requires aggregating information throughout many corporations and evaluating them to get a single reply. Out-of-the-box, it should fail however we’ll cowl methods on find out how to sort out this in use case #2.

Instance: Serving to scientists acquire insights from scattered stories

One nonprofit we work with is a world chief in environmental conservation. They develop detailed PDF stories for the lots of of tasks they sponsor yearly. With a restricted price range, the group should rigorously allocate program {dollars} to tasks delivering the very best outcomes. Traditionally, this required a small group to overview hundreds of pages of stories. There aren’t sufficient hours within the day to do that successfully. By constructing an LLM Q&A software on high of its massive corpus of paperwork, the group can now rapidly ask questions like, “What are the highest 5 areas the place we’ve had probably the most success with reforestation?” These new capabilities have enabled the group to make smarter selections about their tasks in actual time.

Use Case #2: Info Extraction

It’s estimated that round 80% of all knowledge is unstructured, and far of that knowledge is textual content contained inside paperwork. The older cousin of question-answering, data extraction is meant to resolve the analytical and combination enterprises wish to reply over these paperwork.

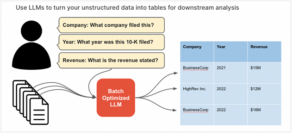

The method of constructing efficient data extraction entails working an LLM over every doc to “extract” related data and assemble a desk you’ll be able to question.

Instance: Creating Structured Insights for Healthcare and Banking

Info extraction is helpful in various industries like healthcare the place you may wish to enrich structured affected person information with knowledge from PDF lab stories or docs’ notes. One other instance is funding banking. A fund supervisor can take a big corpus of unstructured monetary stories, like 10Ks, and create structured tables with fields like income by 12 months, # of shoppers, new merchandise, new markets, and so on. This knowledge can then be analyzed to find out the very best funding choices. Take a look at this free instance pocket book on how you are able to do data extraction.

Use Case #3: Textual content Classification

Normally, the area of conventional supervised machine studying fashions, textual content classification is one basic means high-tech corporations are utilizing massive language fashions to automate duties like assist ticket triage, content material moderation, sentiment evaluation, and extra. The first profit that LLMs have over supervised ML is the truth that they will function zero-shot, which means with out coaching knowledge or the necessity to modify the underlying base mannequin.

For those who do have coaching knowledge as examples you wish to fine-tune your mannequin with to get higher efficiency, LLMs additionally assist that functionality out of the field. Effective-tuning is primarily instrumental in altering the way in which the LLM causes, for instance asking it to pay extra consideration to some components of an enter versus others. It will also be useful in serving to you prepare a smaller mannequin (because it doesn’t want to have the ability to recite French poetry, simply classify assist tickets) that may be cheaper to serve.

Instance: Automating Buyer Assist

Forethought, a pacesetter in buyer assist automation, makes use of LLMs for a broad-range of options equivalent to clever chatbots and classifying assist tickets to assist customer support brokers prioritize and triage points quicker. Their work with LLMs is documented on this real-life use case with Upwork.

Use Case #4: Generative Duties

Venturing into the extra cutting-edge are the category of use instances the place a corporation needs to make use of an LLM to generate some content material, usually for an end-user going through software.

You’ve seen examples of this earlier than even with ChatGPT, just like the basic “write me a weblog publish about LLM use instances”. However from our observations, generative duties within the enterprise are usually distinctive in that they often look to generate some structured output. This structured output could possibly be code that’s despatched to a compiler, JSON despatched to a database or a configuration that helps automate some process internally.

Structured technology may be difficult; not solely does the output have to be correct, it additionally must be formatted accurately. However when profitable, it is without doubt one of the handiest ways in which LLMs may help translate pure language right into a type readable by machines and subsequently speed up inner automation.

Instance: Producing Code

On this brief tutorial video, we present how an LLM can be utilized to generate JSON, which may then be used to automate downstream purposes that work through API.

Use Case #5: Mixing ML and LLMs

Authors shouldn’t have favorites, however my favourite use case is the one we see most just lately from corporations on the chopping fringe of manufacturing ML purposes: mixing conventional machine studying with LLMs. The core concept right here is to enhance the context and information base of an LLM with predictions that come from a supervised ML mannequin and permit the LLM to do extra reasoning on high of that. Basically, as a substitute of utilizing an ordinary database because the information base for an LLM, you employ a separate machine studying mannequin itself.

An awesome instance of that is utilizing embeddings and a recommender programs mannequin for personalization.

Instance: Conversational Advice for E-commerce

An e-commerce vendor we work with was fascinated by making a extra customized purchasing expertise that takes benefit of pure language queries like, “What leather-based males’s sneakers would you advocate for a marriage?” They constructed a recommender system utilizing supervised ML to generate customized suggestions based mostly on a buyer’s profile. The values are then fed to an LLM so the shopper can ask questions with a chat-like interface. You possibly can see an instance of this use case with this free pocket book.

The breadth of high-value use instances for LLMs extends far past ChatGPT-style chatbots. Groups trying to get began with LLMs can benefit from business LLM choices or customise open-source LLMs like Llama-2 or Vicuna on their very own knowledge inside their cloud atmosphere with hosted platforms like Predibase.

Concerning the writer: Devvret Rishi is the co-founder and Chief Product Officer at Predibase, a supplier of instruments for creating AI and machine studying purposes. Previous to Predibase, Devvret was a product supervisor at Google and was a Teaaching Fellow for Harvard College’s Introduction to Synthetic Intelligence class.

Associated Gadgets:

OpenAI Launches ChatGPT Enterprise

GenAI Debuts Atop Gartner’s 2023 Hype Cycle

The Boundless Enterprise Prospects of Generative AI