Hugging Face just lately launched Falcon 180B, the biggest open supply Massive Language Mannequin that’s stated to carry out in addition to Google’s state-of-the-art AI, Palm 2. And it additionally has no guardrails to maintain it from creating unsafe of dangerous outputs.

Falcon 180B Achieves State Of The Artwork Efficiency

The phrase “state-of-the-art” signifies that one thing is performing on the highest attainable degree, equal to or surpassing the present instance of what’s greatest.

It’s an enormous deal when researchers announce that an algorithm or giant language mannequin achieves state-of-the-art efficiency.

And that’s precisely what Hugging Face says about Falcon 180B.

Falcon 180B achieves state-of-the-art efficiency on pure language duties, beats out earlier open supply fashions and in addition “rivals” Google’s Palm 2 in efficiency.

These aren’t simply boasts, both.

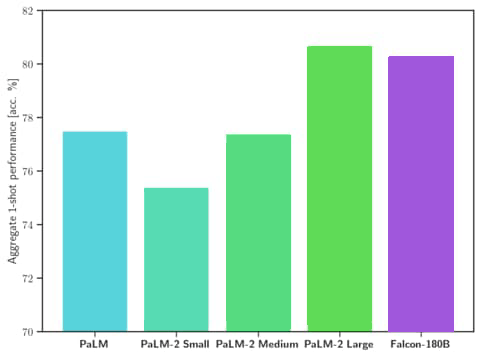

Hugging Face’s declare that Falcon 180B rivals Palm 2 is backed up by knowledge.

The information reveals that Falcon 180B outperforms the earlier strongest open supply mannequin Llama 270B throughout a spread of duties used to measure how highly effective an AI mannequin is.

Falcon 180B even outperforms OpenAI’s GPT-3.5.

The testing knowledge additionally reveals that Falcon 180B performs on the similar degree as Google’s Palm 2.

Screenshot of Efficiency Comparability

The announcement defined:

“Falcon 180B is the perfect brazenly launched LLM in the present day, outperforming Llama 2 70B and OpenAI’s GPT-3.5…

Falcon 180B sometimes sits someplace between GPT 3.5 and GPT4 relying on the analysis benchmark…”

The announcement goes on to suggest that extra tremendous tuning of the mannequin by customers could enhance the efficiency even increased.

Minor technical points that muddy up indexing, like triggering 301 redirects by inside hyperlinks to previous URLs which were up to date with a class construction.

Dataset Used To Prepare Falcon 180B

Hugging Face launched a analysis paper (PDF model right here) containing particulars of the dataset used to coach Falcon 180B.

It’s known as The RefinedWeb Dataset.

This dataset consists solely of content material from the Web, obtained from the open supply Widespread Crawl, a publicly obtainable dataset of the net.

The dataset is subsequently filtered and put via a means of deduplication (the removing of duplicate or redundant knowledge) to enhance the standard of what’s left.

What the researchers try to attain with the filtering is to take away machine-generated spam, content material that’s repeated, boilerplate, plagiarized content material and knowledge that isn’t consultant of pure language.

The analysis paper explains:

“As a consequence of crawling errors and low high quality sources, many paperwork include repeated sequences: this may occasionally trigger pathological habits within the ultimate mannequin…

…A major fraction of pages are machine-generated spam, made predominantly of lists of key phrases, boilerplate textual content, or sequences of particular characters.

Such paperwork will not be appropriate for language modeling…

…We undertake an aggressive deduplication technique, combining each fuzzy doc matches and actual sequences removing.”

Apparently it turns into crucial to filter and in any other case clear up the dataset as a result of it’s completely comprised of net knowledge, versus different datasets that add non-web knowledge.

The researchers efforts to filter out the nonsense resulted in a dataset that they declare is each bit nearly as good as extra curated datasets which might be made up of pirated books and different sources of non-web knowledge.

They conclude by stating that their dataset is successful:

“We have now demonstrated that stringent filtering and deduplication might lead to a 5 trillion tokens net solely dataset appropriate to provide fashions aggressive with the state-of-the-art, even outperforming LLMs skilled on curated corpora.”

Falcon 180B Has Zero Guardrails

Notable about Falcon 180B is that no alignment tuning has been executed to maintain it from producing dangerous or unsafe output and nothing to stop it from inventing details and outright mendacity.

As a consequence, the mannequin could be tuned to generate the type of output that may’t be generated with merchandise from OpenAI and Google.

That is listed in a piece of the announcement titled limitations.

Hugging Face advises:

“Limitations: the mannequin can and can produce factually incorrect data, hallucinating details and actions.

Because it has not undergone any superior tuning/alignment, it may possibly produce problematic outputs, particularly if prompted to take action.”

Industrial Use Of Falcon 180B

Hugging Face permits business use of Falcon 180B.

Nonetheless it’s launched below a restrictive license.

Those that want to use Falcon 180B are inspired by Hugging Face to first seek the advice of a lawyer.

Falcon 180B Is Like A Beginning Level

Lastly, the mannequin hasn’t undergone instruction coaching, which signifies that it must be skilled to be an AI chatbot.

So it’s like a base mannequin that wants extra to change into no matter customers need it to be. Hugging Face additionally launched a chat mannequin nevertheless it’s apparently a “easy” one.

Hugging Face explains:

“The bottom mannequin has no immediate format. Do not forget that it’s not a conversational mannequin or skilled with directions, so don’t count on it to generate conversational responses—the pretrained mannequin is a superb platform for additional finetuning, however you most likely shouldn’t instantly use it out of the field.

The Chat mannequin has a quite simple dialog construction.”

Learn the official announcement:

Unfold Your Wings: Falcon 180B is right here

Featured picture by Shutterstock/Giu Studios