In Half One, we mentioned first determine sluggish queries on MongoDB utilizing the database profiler, after which investigated what the methods the database took doing in the course of the execution of these queries to know why our queries have been taking the time and sources that they have been taking. On this weblog publish, we’ll focus on a number of different focused methods that we will use to hurry up these problematic queries when the precise circumstances are current.

Avoiding Assortment Scans utilizing Person-Outlined Learn Indexes

When working at scale, most main manufacturing databases can’t afford any assortment scans in any respect until the QPS could be very low or the gathering dimension itself is small. In case you discovered throughout your investigation in Half One which your queries are being slowed down by pointless assortment scans, it’s possible you’ll wish to think about using user-defined indexes in MongoDB.

Identical to relational databases, NoSQL databases like MongoDB additionally make the most of indexes to hurry up queries. Indexes retailer a small portion of every assortment’s information set into separate traversable information buildings. These indexes then allow your queries to carry out at sooner speeds by minimizing the variety of disk accesses required with every request.

When you already know the queries forward of time that you simply’re seeking to pace up, you’ll be able to create indexes from inside MongoDB on the fields which you want sooner entry to. With just some easy instructions, MongoDB will mechanically kind these fields into separate entries to optimize your question lookups.

To create an index in MongoDB, merely use the next syntax:

db.assortment.createIndex( <key and index kind specification>, <choices> )

As an example, the next command would create a single subject index on the sphere coloration:

db.assortment.createIndex( { coloration: -1 } )

MongoDB gives a number of index sorts optimized for numerous question lookups and information sorts:

- Single Area Indexes are used to a index single subject in a doc

- Compound Area Indexes are used to index a number of fields in a doc

- Multikey Indexes are used to index the content material saved in arrays

- Geospatial Indexes are used to effectively index geospatial coordinate information

- Textual content Indexes are used to effectively index string content material in a set

- Hashed Indexes are used to index the hash values of particular fields to help hash-based sharding

Whereas indexes can pace up with sure queries tremendously, additionally they include tradeoffs. Indexes use reminiscence, and including too many will trigger the working set to now not match inside reminiscence, which can truly tank the efficiency of the cluster. Thus, you at all times wish to make sure you’re indexing simply sufficient, however not an excessive amount of.

For extra particulars, make sure you take a look at our different weblog publish on Indexing on MongoDB utilizing Rockset!

Avoiding Doc Scans Totally utilizing Coated Queries

In case you discovered throughout your investigation that your queries are scanning an unusually excessive variety of paperwork, it’s possible you’ll wish to look into whether or not or not a question could be happy with out scanning any paperwork in any respect utilizing index-only scan(s). When this happens, we are saying that the index has “coated” this question since we now not have to do any extra work to finish this question. Such queries are often called coated queries, and are solely potential if and provided that all of those two necessities are happy:

- Each subject the question must entry is a part of an index

- Each subject returned by this question is in the identical index

Moreover, MongoDB has the next restrictions which stop indexes from totally overlaying queries:

- No subject within the overlaying index is an array

- No subject within the overlaying index is a sub-document

- The _id subject can’t be returned by this question

As an example, let’s say we now have a set rocks which has a multikey index on two fields, coloration and sort:

db.rocks.createIndex({ coloration: 1, kind: 1 })

Then, if attempt to discover the varieties of rocks for a selected coloration, that question could be “coated” by the above index:

db.customers.discover({ coloration: "black" }, { kind: 1, _id: 0 })

Let’s take a deeper take a look at what the database is doing utilizing the EXPLAIN methodology we discovered about in the course of the investigation section.

Utilizing a fundamental question and not using a overlaying index with a single doc, the next executionStats are returned:

"executionStats" : {

"executionSuccess" : true,

"nReturned" : 1,

"executionTimeMillis" : 0,

"totalKeysExamined" : 1,

"totalDocsExamined" : 1

}

Utilizing our coated question, nevertheless, the next executionStats are returned:

"executionStats" : {

"executionSuccess" : true,

"nReturned" : 1,

"executionTimeMillis" : 0,

"totalKeysExamined" : 1,

"totalDocsExamined" : 0

}

Observe that the variety of paperwork scanned modified to 0 within the coated question – this efficiency enchancment was made potential as a result of index we created earlier which contained all the information we would have liked (thereby “overlaying” the question). Thus, MongoDB didn’t have to scan any assortment paperwork in any respect. Tweaking your indexes and queries to permit for such instances can considerably enhance question efficiency.

Avoiding Software-Degree JOINs utilizing Denormalization

NoSQL databases like MongoDB are sometimes structured and not using a schema to make writes handy, and it’s a key half what additionally makes them so distinctive and well-liked. Nevertheless, the dearth of a schema can dramatically slows down reads, inflicting issues with question efficiency as your utility scales.

As an example, one of the vital generally well-known drawbacks of utilizing a NoSQL database like MongoDB is the dearth of help for database-level JOINs. If any of your queries are becoming a member of information throughout a number of collections in MongoDB, you’re doubtless doing it on the utility stage. This, nevertheless, is tremendously pricey since you need to switch all the information from the tables concerned into your utility earlier than you’ll be able to carry out the operation.

Growing Learn Efficiency by Denormalizing Your Information

If you find yourself storing relational information in a number of collections in MongoDB which requires a number of queries to retrieve the information you want, you’ll be able to denormalize it to extend learn efficiency. Denormalization is the method by which we commerce write efficiency for learn efficiency by embedding information from one assortment into one other, both by making a duplicate of sure fields or by transferring it fully.

As an example, let’s say you will have the next two collections for workers and firms:

{

"electronic mail" : "john@instance.com",

"identify" : "John Smith",

"firm" : "Google"

},

{

"electronic mail" : "mary@instance.com",

"identify" : "Mary Adams",

"firm" : "Microsoft"

},

...

{

"identify" : "Google",

"inventory" : "GOOGL",

"location" : "Mountain View, CA"

},

{

"identify" : "Microsoft",

"inventory" : "MSFT",

"location" : "Redmond, WA"

},

...

As an alternative of making an attempt to question the information from each collections utilizing an application-level JOIN, we will as an alternative embed the businesses assortment inside the workers assortment:

{

"electronic mail" : "john@instance.com",

"identify" : "John Smith",

"firm" : {

"identify": "Google",

"inventory" : "GOOGL",

"location" : "Mountain View, CA"

}

},

{

"electronic mail" : "mary@instance.com",

"identify" : "Mary Adams",

"firm" : {

"identify" : "Microsoft",

"inventory" : "MSFT",

"location" : "Redmond, WA"

}

},

...

Now that every one of our information is already saved in a single place, we will merely question the workers assortment a single time to retrieve every little thing we’d like, avoiding the necessity to do any JOINs fully.

As we famous earlier, whereas denormalizing your information does enhance learn efficiency, it doesn’t come with out its drawbacks both. A right away downside could be that we’re probably rising storage prices considerably by having to maintain a redundant copies of the information. In our earlier instance, each single worker would now have the complete firm information embedded inside its doc, inflicting an exponential enhance in storage dimension. Moreover, our write efficiency could be severely affected – as an illustration, if we needed to alter the placement subject of an organization that moved its headquarters, we’d now must undergo each single doc in our staff assortment to replace its firm’s location.

What about MongoDB’s $lookup operator?

To assist sort out its lack of help for JOINs, MongoDB added a brand new operator known as $lookup within the launch for MongoDB 3.2. The $lookup operator is an aggregation pipeline operator which performs a left outer be a part of to an unsharded assortment in the identical database to filter in paperwork from the “joined” assortment for processing. The syntax is as follows:

{

$lookup:

{

from: <assortment to hitch>,

localField: <subject from the enter paperwork>,

foreignField: <subject from the paperwork of the "from" assortment>,

as: <output array subject>

}

}

As an example, let’s check out our earlier instance once more for the 2 collections staff and firms:

{

"electronic mail" : "john@instance.com",

"identify" : "John Smith",

"firm" : "Google"

},

{

"electronic mail" : "mary@instance.com",

"identify" : "Mary Adams",

"firm" : "Microsoft"

},

...

{

"identify" : "Google",

"inventory" : "GOOGL",

"location" : "Mountain View, CA"

},

{

"identify" : "Microsoft",

"inventory" : "MSFT",

"location" : "Redmond, WA"

},

...

You possibly can then run the next command to hitch the tables collectively:

db.staff.combination([{

$lookup: {

from: "companies",

localField: "company",

foreignField: "name",

as: "employer"

}

}])

The question would return the next:

{

"electronic mail" : "john@instance.com",

"identify" : "John Smith",

"firm" : "Google"

"employer": {

"identify" : "Microsoft",

"inventory" : "GOOGL",

"location" : "Mountain View, CA"

}

},

{

"electronic mail" : "mary@instance.com",

"identify" : "Mary Adams",

"firm" : "Microsoft"

"employer": {

"identify" : "Microsoft",

"inventory" : "MSFT",

"location" : "Redmond, WA"

}

},

...

Whereas this helps to alleviate a number of the ache of performing JOINs on MongoDB collections, it’s removed from a whole resolution with some notoriously well-known drawbacks. Most notably, its efficiency is considerably worse than JOINs in SQL databases like Postgres, and nearly at all times requires an index to help every JOIN. As well as, even minor adjustments in your information or aggregation necessities may cause you to must closely rewrite the appliance logic.

Lastly, even at peak efficiency, the performance is solely very restricted – the $lookup operator solely permits you to carry out left outer joins, and can’t be used on sharded collections. It additionally can’t work straight with arrays, that means that you would need to a separate operator within the aggregation pipeline to first unnest any nested fields. As MongoDB’s CTO Eliot Horowitz wrote throughout its launch, “we’re nonetheless involved that $lookup could be misused to deal with MongoDB like a relational database.” On the finish of the day, MongoDB continues to be a document-based NoSQL database, and isn’t optimized for relational information at scale.

Velocity Up Queries and Carry out Quick JOINs utilizing Exterior Indexes

In case you’ve tried all the interior optimizations you’ll be able to consider inside MongoDB and your queries are nonetheless too sluggish, it could be time for an exterior index. Utilizing an exterior index, your information could be indexes and queried from a completely separate database with a very completely different set of strengths and limitations. Exterior indexes are tremendously useful for not solely reducing load in your main OLTP databases, but in addition to carry out sure complicated queries that aren’t splendid on a NoSQL database like MongoDB (similar to aggregation pipelines utilizing $lookup and $unwind operators), however could also be splendid when executed within the chosen exterior index.

Exceed Efficiency Limitations utilizing Rockset as an Exterior Index

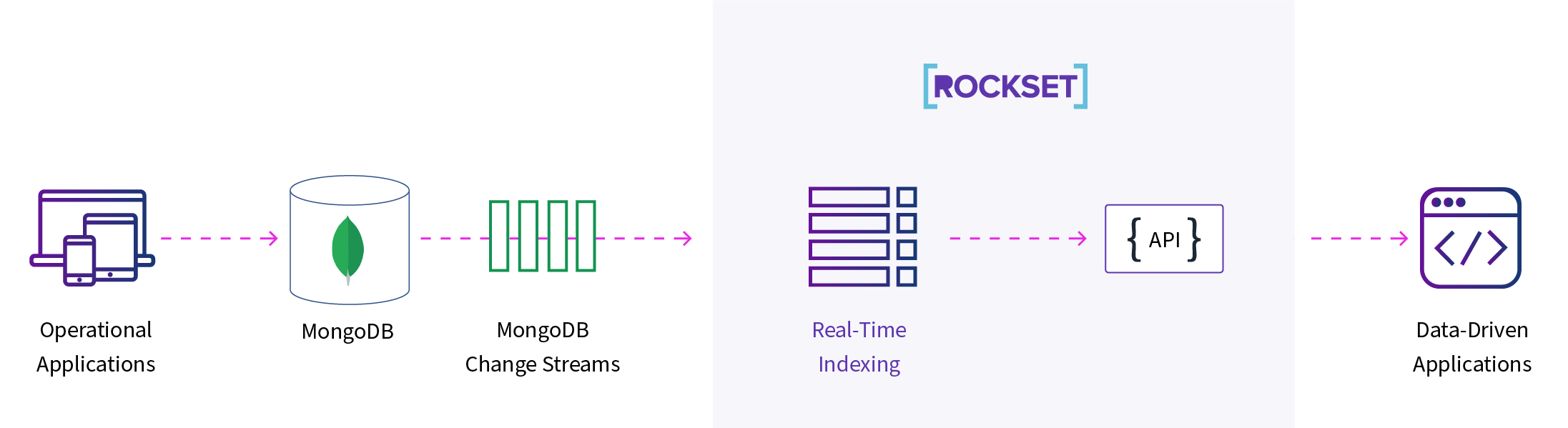

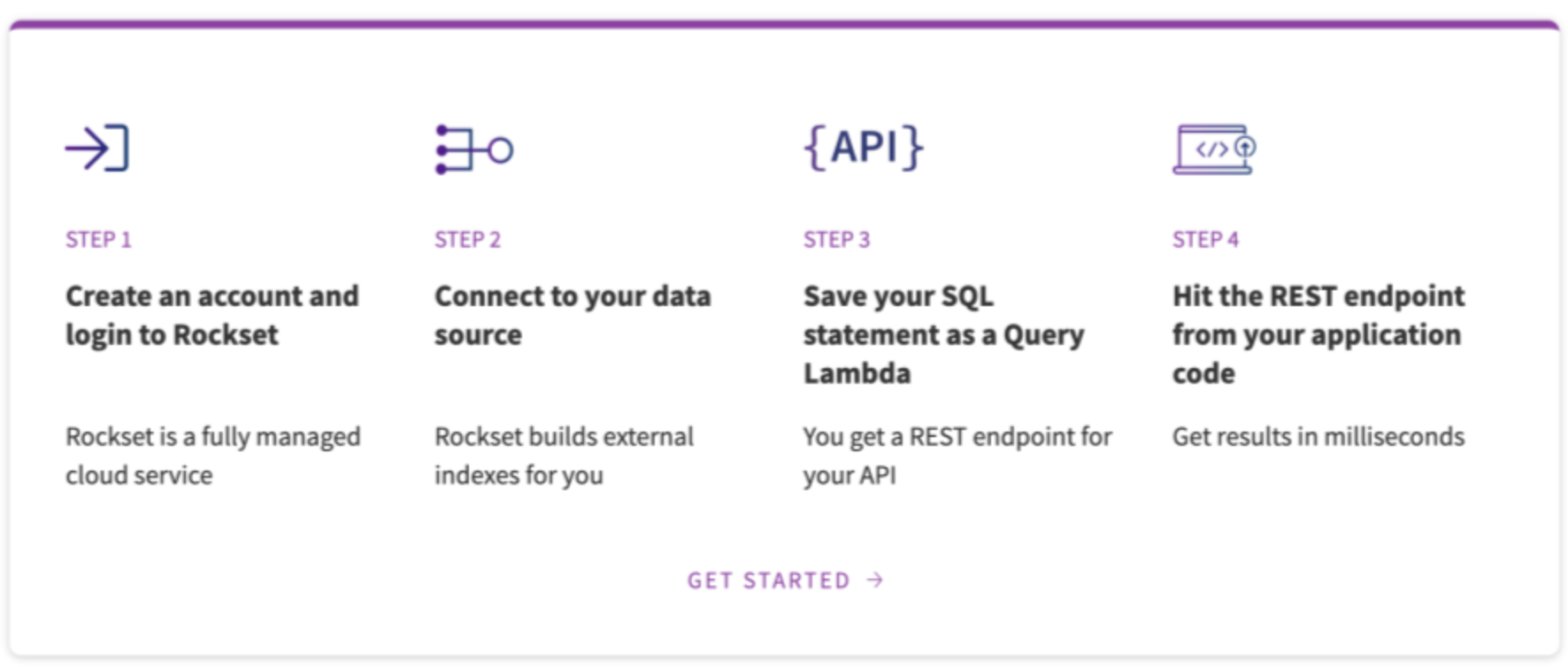

Right here at Rockset, we’ve partnered with MongoDB and constructed a completely managed connector with our real-time indexing know-how that lets you carry out quick JOINs and aggregations at scale. Rockset is a real-time serverless database which can be utilized as a pace layer on high of MongoDB Atlas, permitting you to carry out SQL aggregations and JOINs in real-time.

Utilizing our MongoDB integration, you will get arrange in minutes – merely click on and join Rockset along with your MongoDB collections by enabling correct learn permissions, and the remainder is mechanically accomplished for you. Rockset will then sync your information into our real-time database utilizing our schemaless ingest know-how, after which mechanically create indexes for you on each single subject in your assortment, together with nested fields. Moreover, Rockset can even mechanically keep up-to-date along with your MongoDB collections by syncing inside seconds anytime you replace your information.

As soon as your information is in Rockset, you’ll have entry to Rockset’s Converged Index™ know-how and question optimizer. Because of this Rockset permits full SQL help together with quick search, aggregations, and JOIN queries at scale. Rockset is purpose-built for complicated aggregations and JOINs on nested information, with no restrictions on overlaying indexes. Moreover, additionally, you will get sooner queries utilizing Rockset’s disaggregated Aggregator-Leaf-Tailer Structure enabling real-time efficiency for each ingesting and querying.

Allow Full SQL Assist for Aggregations and JOINs on MongoDB

Let’s re-examine our instance earlier the place we used the $lookup aggregation pipeline operator in MongoDB to simulate a SQL LEFT OUTER JOIN. We used this command to carry out the be a part of:

db.staff.combination([{

$lookup: {

from: "companies",

localField: "company",

foreignField: "name",

as: "employer"

}

}])

With full SQL help in Rockset, you’ll be able to merely use your acquainted SQL syntax to carry out the identical be a part of:

SELECT

e.electronic mail,

e.identify,

e.firm AS employer,

e.inventory,

e.location

FROM

staff e

LEFT JOIN

firms c

ON e.firm = c.identify;

Let’s take a look at one other instance aggregation in MongoDB the place we GROUP by two fields, COUNT the overall variety of related rows, after which SORT the outcomes:

db.rocks.combination([{

"$group": {

_id: {

color: "$color",

type: "$type"

},

count: { $sum: 1 }

}}, {

$sort: { "_id.type": 1 }

}])

The identical command could be carried out in Rockset utilizing the next SQL syntax:

SELECT

coloration,

kind,

COUNT(*)

FROM

rocks

GROUP BY

coloration,

kind

ORDER BY

kind;

Getting Began with Rockset on MongoDB

Lower load in your main MongoDB occasion by offloading costly operations to Rockset, whereas additionally enabling considerably sooner queries. On high of this, you’ll be able to even combine Rockset with information sources outdoors of MongoDB (together with information lakes like S3/GCS and information streams like Kafka/Kinesis) to hitch your information collectively from a number of exterior sources and question them without delay.

In case you’re concerned with studying extra, make sure you take a look at our full MongoDB.stay session the place we go into precisely how Rockset repeatedly indexes your information from MongoDB. You may as well view our tech speak on Scaling MongoDB to listen to about further methods for sustaining efficiency at scale. And everytime you’re able to strive it out your self, watch our step-by-step walkthrough after which create your Rockset account!