CynLr Expertise is a tech firm dealing in deep-tech robotics and cybernetics. They permit robots to turn into intuitive and able to dynamic object manipulation with minimal coaching effort. CynLr has developed a visible object intelligence platform that interfaces with robotic arms to attain the ‘Holy Grail of Robotics’ – Common Object Manipulation. This permits CynLr-powered arms to select up unrecognised objects with out recalibrating {hardware} and even works with mirror-finished objects (a historically onerous impediment for visible intelligence).

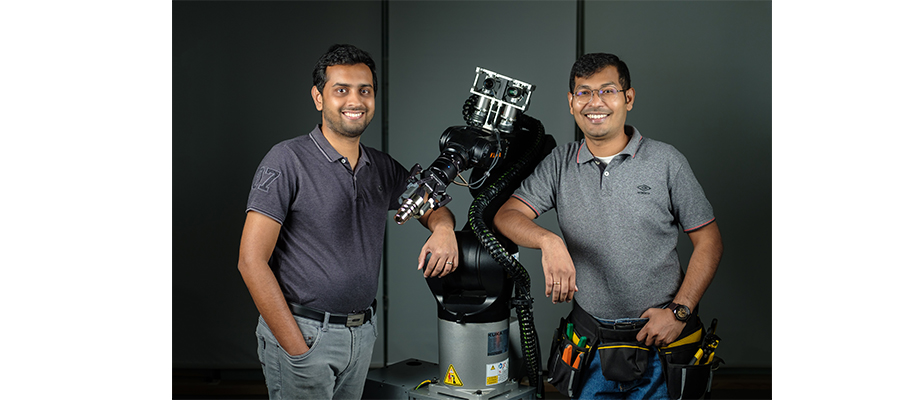

To know extra about robotics and the corporate, Sakshi Jain, Sr. Sub Editor-ELE Occasions had a chance to work together with Nikhil Ramaswamy, Co-Founder & CEO and Gokul NA, Founder – Design, Product and Model at CynLr Expertise. He talked about robots and their capacity to imitate the intuitiveness and vary of the human hand. Excerpts.

ELE Occasions: How does your visible robotic platform rework machines into aware robots?

Nikhil & Gokul: At the moment, most trendy approaches in AI and machine imaginative and prescient know-how utilised in robotics closely rely upon both presenting an object to a robotic arm in a predetermined method or by predicting object positions based mostly on static pictures, no matter whether or not they’re in 3D or every other format. Nonetheless, this strategy presents vital challenges because the object’s orientation and lighting circumstances can significantly alter its color and geometric form as perceived by the digicam. This turns into much more difficult when coping with steel objects which have a mirror end. In consequence, conventional AI strategies that depend on color and form for object identification lack universality. The intuitiveness of imaginative and prescient and object interplay in human beings is taken as a right. Most duties that are innate for human beings are thus far troublesome to duplicate in automation methods even in extraordinarily managed environments.

At CynLr, we work to deliver intuitiveness to machines, the important thing to which lies with machine imaginative and prescient being as shut as potential to human imaginative and prescient. In fact, in evaluating it to human beings we’re competing in opposition to thousands and thousands of years. Nonetheless, we’ve been capable of decipher the elemental layers of human and animal imaginative and prescient to create the foundations of our visible object intelligence know-how. We use cutting-edge methods equivalent to Auto-Focus Liquid Lens Optics, Optical Convergence, Temporal Imaging, Hierarchical Depth Mapping, and Power-Correlated Visible Mapping in our {hardware} and algorithms. These AI and Machine Studying algorithms allow robotic arms to understand and generate wealthy visible representations, permitting them to govern and deal with objects based mostly on any setting.

Presently, the manufacturing trade faces challenges in automation for merchandise with shorter life cycles. A change in mannequin or variant means an overhaul of all the automated line as automation is a component particular. CynLr’s know-how allows robots to turn into intuitive and able to dynamic object manipulation with minimal coaching effort. The thought is to make one line able to dealing with diversified objects liberating the automation trade from part-specific options solely. Whether or not it’s a packet of Lays chips, a steel half, or a mirrored end spoon, the identical robotic can deal with all of them. These robots are future-proof and able to performing a variety of duties. (You may discuss with our demo movies – Untrained Dynamic Monitoring & Greedy of Random Objects and Oriented Grasps and Picks of Untrained Random Objects).

ELE Occasions: Highlights some key milestones achieved by CynLr of their mission to simplify automation and optimise manufacturing processes.

Nikhil & Gokul: CynLr’s one of many main achievements is passing the Litmus paper check for AI – recognition and isolation of mirror-finished/reflective objects. Our visible robots can acknowledge and isolate mirror-finished objects with none coaching, beneath various lighting circumstances. Our extremely dynamic and adaptive product eliminates the necessity for customised options, sparing our prospects from coping with a number of applied sciences and complicated engineering.

Now we have very important pursuits in collaboration with the US and EU. Now we have presently begun engagements for reference designing our tech stack to construct superior meeting automation with two of the 5 largest automotive automobile producers globally and a big element provider from Europe. We’re additionally coming into new markets like the commercial kitchen and Superior Driver Help Techniques (ADAS) use instances.

ELE Occasions: How is your organization planning to deal with the worldwide problem of part-mating and meeting automation?

We’re strategically addressing the worldwide problem of part-mating and meeting automation by leveraging our superior visible intelligence know-how. This cutting-edge strategy combines laptop imaginative and prescient, machine studying, and robotics to sort out the complexity of automating intricate part-mating and meeting processes, which have traditionally been demanding to automate. Our visible intelligence know-how permits robots to precisely understand and interpret spatial relationships between components, enabling exact alignment and meeting.

We transcend visible notion alone. As a deep-tech firm, we additionally acknowledge the profitable automation of part-mating and meeting requires further parts equivalent to tactile suggestions and information of the way to manipulate completely different parts. By integrating tactile sensing capabilities and leveraging our experience in robotics, we intention to create robots that may not solely information the meeting course of visually but in addition work together with components in a fashion that emulates human-like dexterity and precision.

Our final goal is to supply a holistic answer for part-mating and meeting automation, overcoming challenges associated to advanced spatial relationships, half geometry variations, and the necessity for meticulous manipulation. By automating these processes, we intend to empower producers to attain outstanding features in effectivity, price discount, and elevated manufacturing.

We additionally collaborate carefully with world prospects and run pilots throughout completely different areas, together with the US, Germany, and India. We intention to additional validate and refine our know-how to seek out real-world functions.

ELE Occasions: Highlights your new mission in automation.

Our new mission in automation revolves round revolutionising the manufacturing trade via superior robotics and synthetic intelligence applied sciences. Beneath are some highlights of the identical:

- Automation Transformation: We intention to spearhead a transformative shift in manufacturing by automating processes that had been beforehand thought-about non-automatable. Our mission is to allow robots to carry out advanced duties with precision, effectivity, and adaptableness.

- Addressing Business Challenges: We’re dedicated to tackling the challenges confronted by producers in part-mating, meeting, and different intricate processes. We intention to beat the restrictions of conventional automation strategies by leveraging our experience in visible intelligence, tactile suggestions, and a complete understanding of basic sciences.

- Common Factories: CynLr envisions the institution of common factories, the place robots can seamlessly adapt to completely different duties and merchandise with out the necessity for specialised infrastructure or intensive reconfiguration. This strategy simplifies manufacturing facility operations, enhances versatility, and optimises logistics.

- Discount of Guide Labor: With our visible intelligence know-how, CynLr seeks to automate labour-intensive processes, lowering the reliance on handbook labour and streamlining manufacturing traces. By automating duties equivalent to part-mating and meeting, we wish to improve productiveness, minimise errors, and release human staff for extra strategic and value-added actions.

- World Impression: Our mission extends past regional boundaries. By collaborating with main OEM gamers in Europe and the US, and aiming to increase our enterprise in India, we try to have a world affect, remodeling manufacturing industries worldwide.

Total, our new mission in automation revolves round pushing the boundaries of what’s thought-about automatable, simplifying manufacturing facility operations, lowering handbook labour, and driving innovation within the manufacturing trade on a world scale.

ELE Occasions: What are your plans for Indian markets?

Nikhil & Gokul: We really feel that the Indian market continues to be untimely for our answer. We’d like an area the place prospects can actively interact, make investments their sources, and rework our know-how right into a sensible and practical answer. That is exactly why we’ve constructed one of the crucial densely populated robotics labs centered on visible intelligence.

Though we possess the technological capabilities, with no viable buyer answer, any know-how will stay underdeveloped. Due to this fact, a complete ecosystem is required for the success of a foundational know-how like ours. We additional intention to have interaction with prospects, channel companions and tutorial establishments who can additional construct on our machine imaginative and prescient stack and give you viable options.

ELE Occasions: How does your visible object intelligence platform enhance the power to imitate the intuitiveness and vary of the human hand?

Nikhil & Gokul: Imaginative and prescient know-how is severely restricted in robotics right this moment. The intelligence and eyes wanted for robots to regulate to completely different shapes, and variations and adapt accordingly are merely not there. So, we noticed a chance to allow that know-how by working with the issue carefully.

Our visible intelligence platform helps robotic arms to adapt to varied shapes, orientations and weights of objects in entrance of it. Now we have developed a know-how that may differentiate sight from imaginative and prescient and begins its algorithms from its HW utilizing Auto-Focus Liquid Lens Optics, Optical Convergence, Temporal Imaging, Hierarchical Depth Mapping, and Power-Correlated Visible Mapping. It allows the robots with human-like imaginative and prescient and flexibility to understand even Mirror-Completed objects with none pre-training (a feat that present ML methods can’t obtain). CynLr’s visible robots can comprehend the options of an object and re-orient them based mostly on the necessities. The AI & Machine Studying algorithms assist robotic arms course of the duty even in an amorphous setting and align them in the easiest way potential.

ELE Occasions: Share your views on HW & SW Imaginative and prescient Platform and the way it helps within the constructing of machines.

Nikhil & Gokul: HW & SW Imaginative and prescient Platform is instrumental in constructing machines because it gives them notion, understanding, clever decision-making capabilities, automation, enhanced security, and adaptableness. By incorporating imaginative and prescient applied sciences, machines can successfully work together with their environment, analyze visible information, and make knowledgeable choices, in the end bettering effectivity and increasing the chances of machine functions.

The HW element of the platform usually includes specialised {hardware} units equivalent to cameras, sensors, and picture processors. These parts seize visible information from the machine’s environment, convert it into digital info, and course of it to extract related options and patterns. However, the SW side of the platform includes the software program algorithms and frameworks designed to investigate and interpret visible information. These algorithms carry out duties equivalent to picture recognition, object detection, segmentation, and monitoring, enabling the machine to grasp its environment and make clever choices based mostly on that info.