The historical past of machine studying displays humanity’s quest to create computer systems in our personal picture; to imbue machines with the power to be taught, adapt, and make clever choices.

We’re speaking extra about Machine Studying, however at present we’re going again in time. Proper now we’re within the data age, and plenty of younger individuals (myself included) cannot think about a society earlier than telephones, fixed information assortment and the knowledge financial system.

Spanning a long time of modern analysis and technological breakthroughs, there was a outstanding development from Machine Studying’s early theoretical foundations to the transformative impression ML now has on trendy society. The historical past of machine studying displays humanity’s quest to create computer systems in our personal picture; to imbue machines with the power to be taught, adapt, and make clever choices. It is a journey that has reshaped industries, redefined human-computer interplay, and ushered in an period of unprecedented potentialities.

Alan Zenreich, Inventive Black E-book: Pictures (1985)

For what Machine Studying is, you possibly can check out What’s Machine Studying?, the earlier weblog on this collection. There, I additionally outline the various kinds of ML which can be additional mentioned right here. That is an summary, so if I missed your favourite ML historical past milestone, drop it within the feedback!

Early Ideas and Foundations

Chances are you’ll consider AI and ML as very current breakthroughs, however they have been in improvement longer than you may assume. And, like several nice scientific achievement, it wasn’t a single particular person writing code in a darkish room by themselves 20 years in the past – every particular person laid a stepping stone (shoulders of giants, and many others and many others). As quickly as computation arose as an idea, individuals have been already beginning to surprise how it will evaluate to essentially the most complicated system we knew — our brains.

The Turing Take a look at

Mathematician and pioneering laptop scientist Alan Turing had a big affect on the inception of machine studying. He proposed the Turing Take a look at in his 1950 paper “Computing Equipment and Intelligence.” It is a take a look at of a machine’s means to exhibit human-like intelligence and habits. The take a look at is designed to evaluate whether or not a machine can imitate human dialog effectively sufficient that an evaluator can’t reliably distinguish between the machine and a human primarily based solely on the responses they obtain.

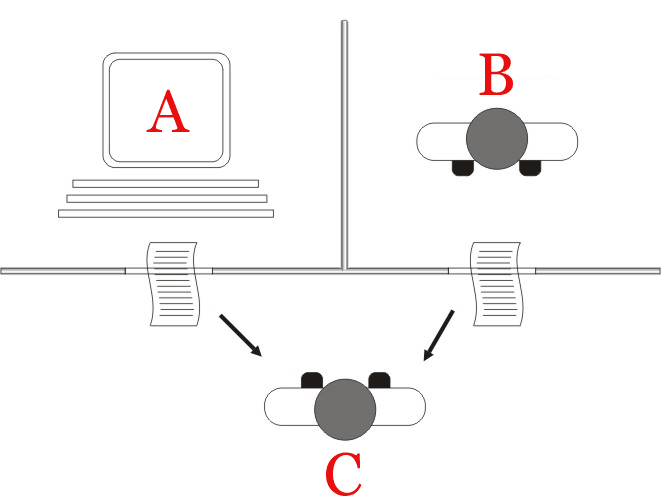

Within the Turing Take a look at, a human evaluator engages in text-based conversations with each a human participant and a machine (typically referred to as the “imitation sport”). The evaluator’s purpose is to find out which of the 2 contributors is the machine and which is the human. If the evaluator can’t constantly distinguish between the machine and the human primarily based on the dialog, the machine is claimed to have handed the Turing take a look at and demonstrated a degree of synthetic intelligence and conversational ability similar to that of a human.

Within the Turing Take a look at, the observer (C) would not have the ability to inform the distinction between the 2 outputs from the machine (A) or the human participant (B) for a machine that handed.

Nonetheless, the take a look at is subjective. Typically somebody might imagine a pc has handed, when another person is aware of a language mannequin skilled on a considerable amount of human language may say issues that people do. We additionally ought to ask if imitating human language is identical factor as (or the one marker for) intelligence, and if our pattern measurement of 1 for examples of what we name intelligence is making our method too close-minded for what we understand to be on our degree. Regardless of criticisms, for 1950 when computation as we all know it was nonetheless in its early phases, this thought experiment had an enormous affect on the attitudes towards a machine’s means not simply to assume, however to assume as we do.

Price a shot. Supply: xkcd comics

Enjoying Checkers

Arthur Samuel, a pioneer in synthetic intelligence and laptop science, labored at IBM and is commonly credited with creating one of many earliest examples of a self-learning machine. Samuel’s program, developed between 1952 and 1955, was applied on an IBM 701 laptop. This system employed a type of machine studying often called “self-play reinforcement studying.” It performed numerous video games of checkers towards itself, regularly refining its methods via trial and error.

Arthur Samuel and his considering machine. Supply: IBM

As this system performed extra video games, it gathered a rising database of positions and the corresponding greatest strikes. This system used this database to information its decision-making in future video games. Over time, this system improved its play, demonstrating the power to compete with human gamers and even often win towards them.

Arthur Samuel’s work on the Checkers-Enjoying Program marked a big milestone within the early historical past of ML. It showcased the potential for computer systems to be taught and adapt to complicated duties via iterative self-improvement. This mission laid the groundwork for later developments in machine studying algorithms and strategies, and it highlighted the sensible purposes of AI in domains past mere calculation and computation.

The Perceptron

The perceptron was one of many earliest neural community fashions and a big improvement within the historical past of synthetic intelligence and machine studying. It was launched by Frank Rosenblatt within the late Nineteen Fifties as an try and create a computational mannequin that would mimic the essential functioning of a organic neuron. The perceptron was supposed to be a machine, however grew to become an algorithm for supervized studying of binary classifiers.

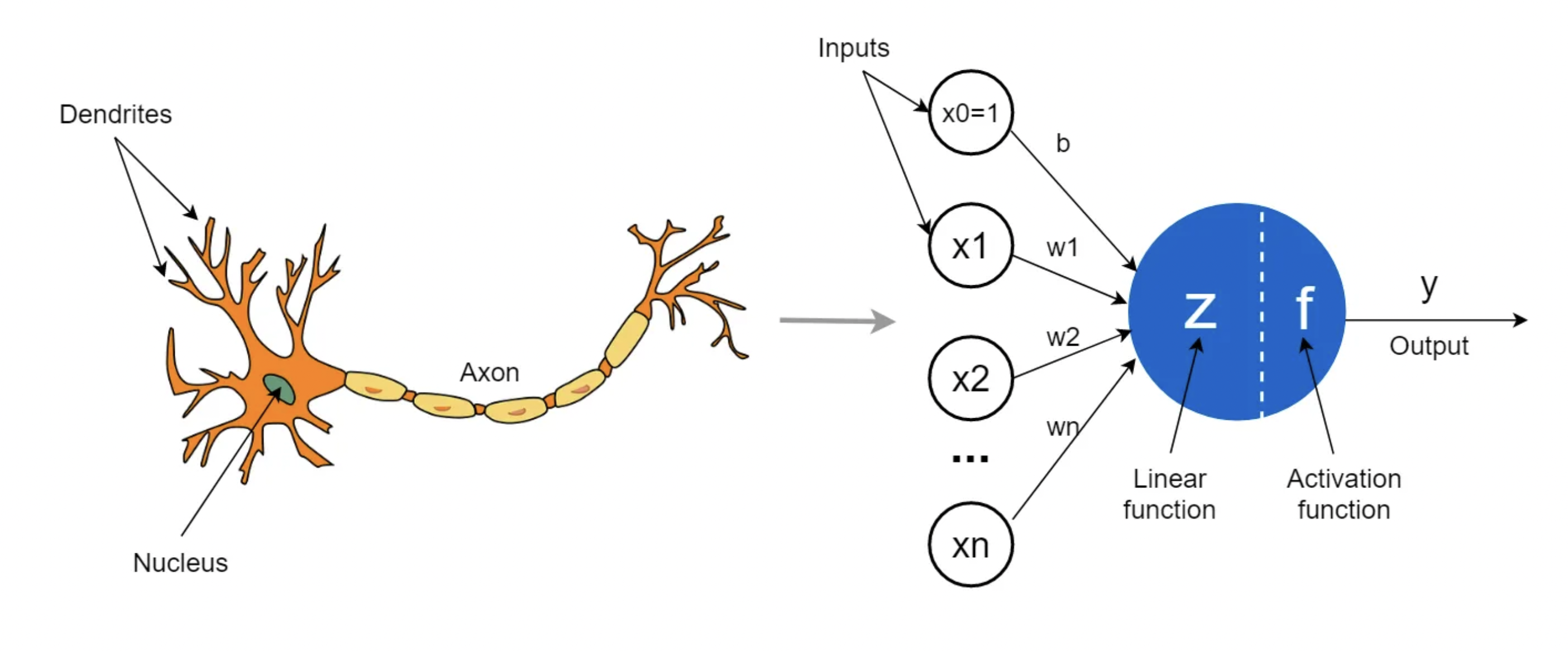

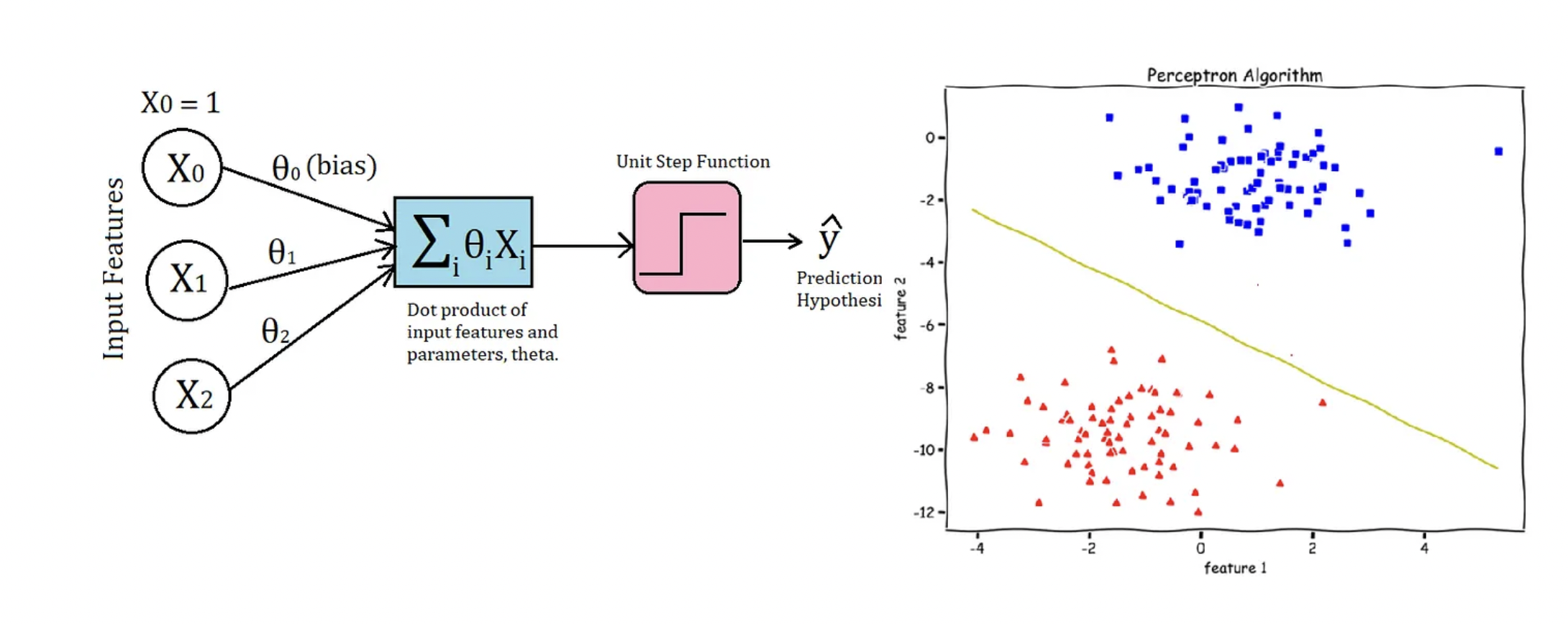

The perceptron is a simplified mathematical mannequin of a single neuron. It takes enter values, multiplies them by corresponding weights, sums them up, after which applies an activation operate to supply an output. This output can be utilized as an enter for additional processing or decision-making.

The perceptron took inspiration from the shape and performance of the neuron. Supply: In direction of Information Science

The important thing innovation of the perceptron was its means to regulate its weights primarily based on enter information and goal output. This means of weight adjustment is achieved via a studying algorithm, also known as the perceptron studying rule. The algorithm updates the weights to cut back the distinction between the expected output and the specified output, successfully permitting the perceptron to be taught from its errors and enhance its efficiency over time.

The perceptron laid the muse for the event of neural networks, which at the moment are a basic idea in machine studying. Whereas the perceptron itself was a single-layer community, its construction and studying rules impressed the creation of extra complicated neural architectures like multi-layer neural networks. It additionally demonstrated the idea of machines studying from information and iteratively adjusting their parameters to enhance efficiency. This marked a departure from conventional rule-based programs and launched the thought of machines buying data via publicity to information.

The perceptron algorithm is a binary classifier that works with linear resolution boundaries. Supply: In direction of Information Science

Nonetheless, the perceptron had limitations. It may solely be taught linearly separable patterns, which meant that it struggled with duties that required nonlinear resolution boundaries. Regardless of that, the perceptron’s introduction and subsequent developments performed a vital position in shaping the trajectory of machine studying as a subject. It laid the groundwork for exploring extra complicated neural community architectures, reinforcement studying rules, and the eventual resurgence of curiosity in neural networks within the Eighties and past.

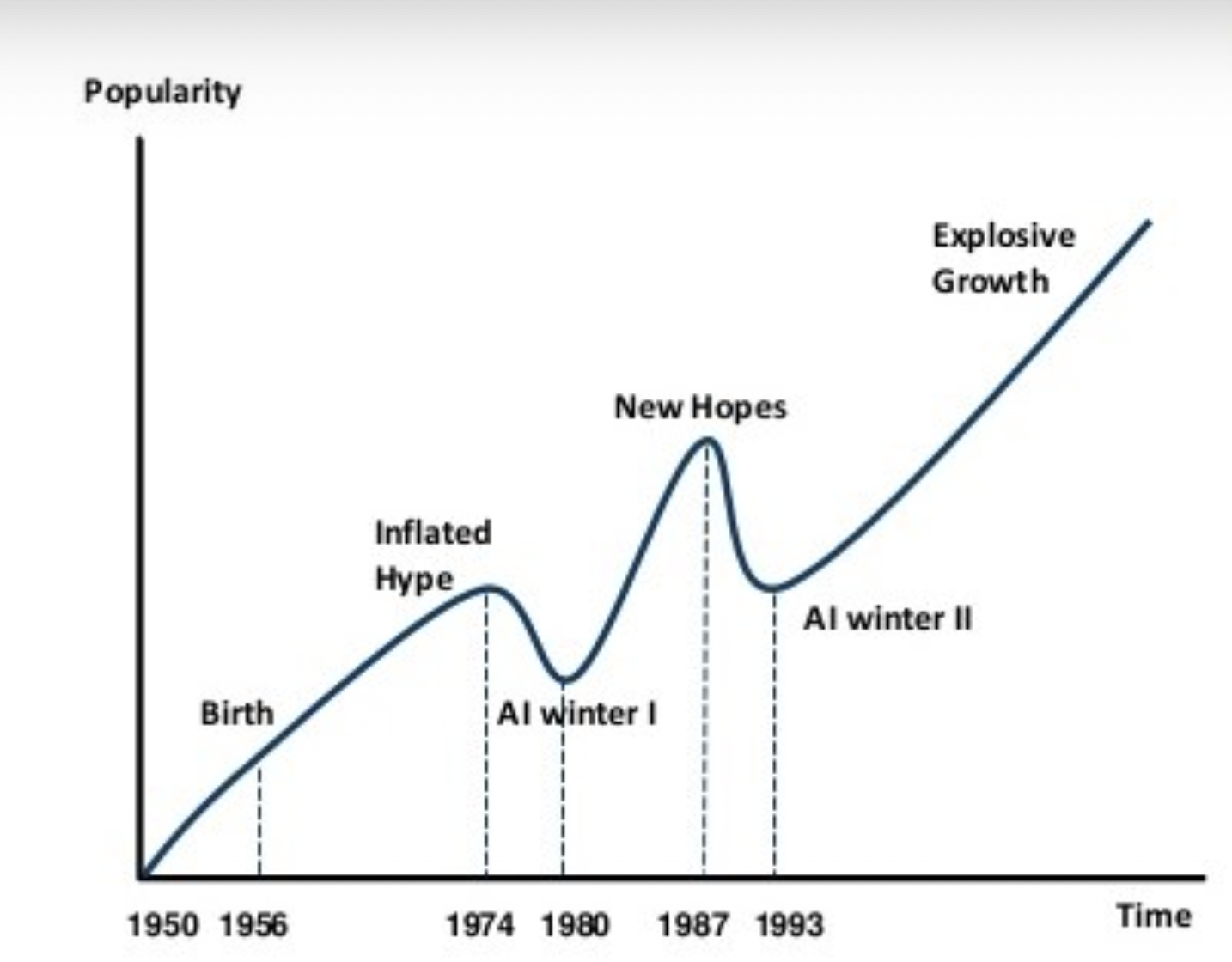

The AI Winters

The “AI winter” refers to a interval of diminished funding, waning curiosity, and slowed progress within the subject of synthetic intelligence (AI) and associated areas, together with machine studying. It occurred throughout sure durations when preliminary expectations for AI’s improvement and capabilities weren’t met, resulting in a lower in analysis investments, skepticism in regards to the feasibility of AI objectives, and a normal decline within the development of AI applied sciences.

First AI Winter (mid-Seventies – early Eighties):

The preliminary wave of optimism about AI’s potential within the Nineteen Fifties and Nineteen Sixties led to excessive expectations. Nonetheless, the capabilities of the prevailing know-how didn’t align with the bold objectives set by AI researchers. The restricted computational energy accessible on the time hindered the power to realize important breakthroughs. Analysis confronted difficulties in areas resembling pure language understanding, picture recognition, and common sense reasoning. Funding for AI analysis from each authorities and business sources decreased as a consequence of unmet expectations and the notion that AI was overhyped. The primary AI winter resulted in a diminished variety of analysis tasks, diminished educational curiosity, and a scaling again of AI-related initiatives.

AI winters have been sort of like Ice Ages – the actually devoted builders saved it going till the local weather of public sentiment modified.

Second AI Winter (late Eighties – Nineties):

The second AI winter occurred as a response to earlier overoptimism and the unfulfilled guarantees of AI from the primary winter. The interval was characterised by a notion that AI applied sciences weren’t residing as much as their potential and that the sector was not delivering sensible purposes. Funding for AI analysis as soon as once more decreased as organizations grew to become cautious about investing in applied sciences that had beforehand didn’t ship substantial outcomes. Many AI tasks struggled with complexity, scalability, and real-world relevance. Researchers shifted their focus to narrower objectives as a substitute of pursuing normal AI.

Balancing hype and output was a battle for the sector of AI. Supply: Actuaries Digital

These durations weren’t completely stagnant; they fostered introspection, refinement of approaches, and the event of extra lifelike expectations. The AI subject finally emerged from these winters with useful classes, renewed curiosity, and a concentrate on extra achievable objectives, paving the way in which for the expansion of AI and machine studying within the twenty first century.

Evolution of Algorithms and Methods

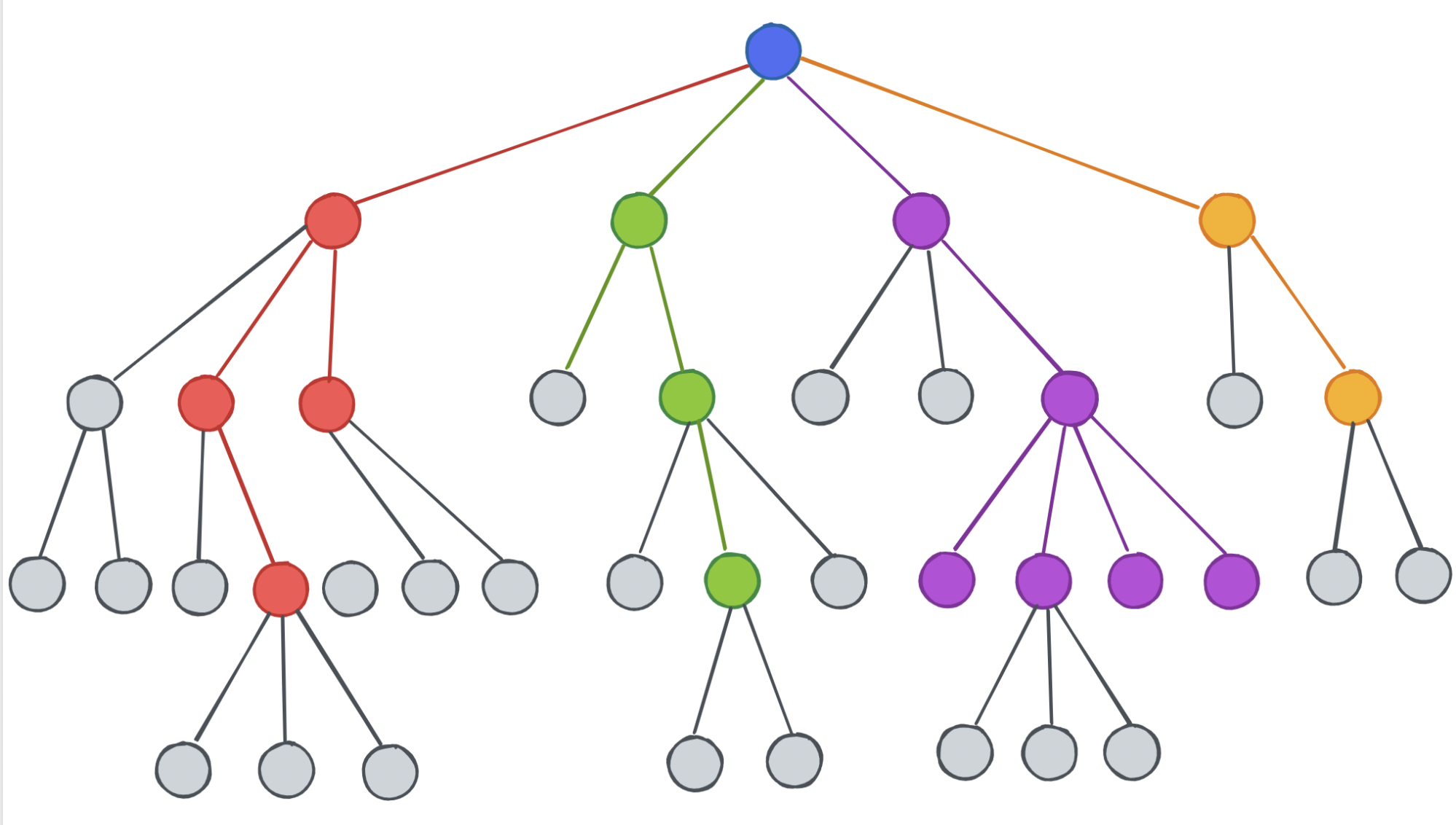

Choice Timber

Through the early phases, as the sector navigated the challenges of the primary AI winter within the late Nineteen Sixties and early Seventies, researchers turned their consideration to specialised purposes, giving rise to knowledgeable programs. These programs aimed to duplicate the decision-making talents of area specialists utilizing symbolic logic and guidelines to signify data. By encoding experience within the type of “if-then” guidelines, knowledgeable programs grew to become adept at rule-based decision-making inside well-defined domains. Nonetheless, they did not carry out as effectively when it got here to dealing with uncertainty and adapting to new data.

In response, the idea of resolution timber emerged as a promising different within the early Seventies. Choice timber provided a extra data-driven and automatic method to decision-making. These constructions, formed like hierarchies or household diagrams, delineated a sequence of selections and corresponding outcomes, like a choose-your-own-adventure ebook. Every inner node represented a call primarily based on a particular attribute, whereas leaf nodes represented ultimate outcomes or classifications. The development of resolution timber concerned choosing attributes that maximally contributed to correct classifications, making them interpretable and user-friendly.

A call tree. Supply: Geeky Codes

Choice timber showcased the potential of AI and machine studying to fuse human experience and data-driven decision-making. They’ve remained related as a consequence of their interpretability and utility in dealing with numerous varieties of information. Because the AI panorama developed, this early improvement supplied a strong footing for the next emergence of extra subtle strategies, like ensemble strategies and deep studying, permitting for extra nuanced and highly effective machine studying fashions.

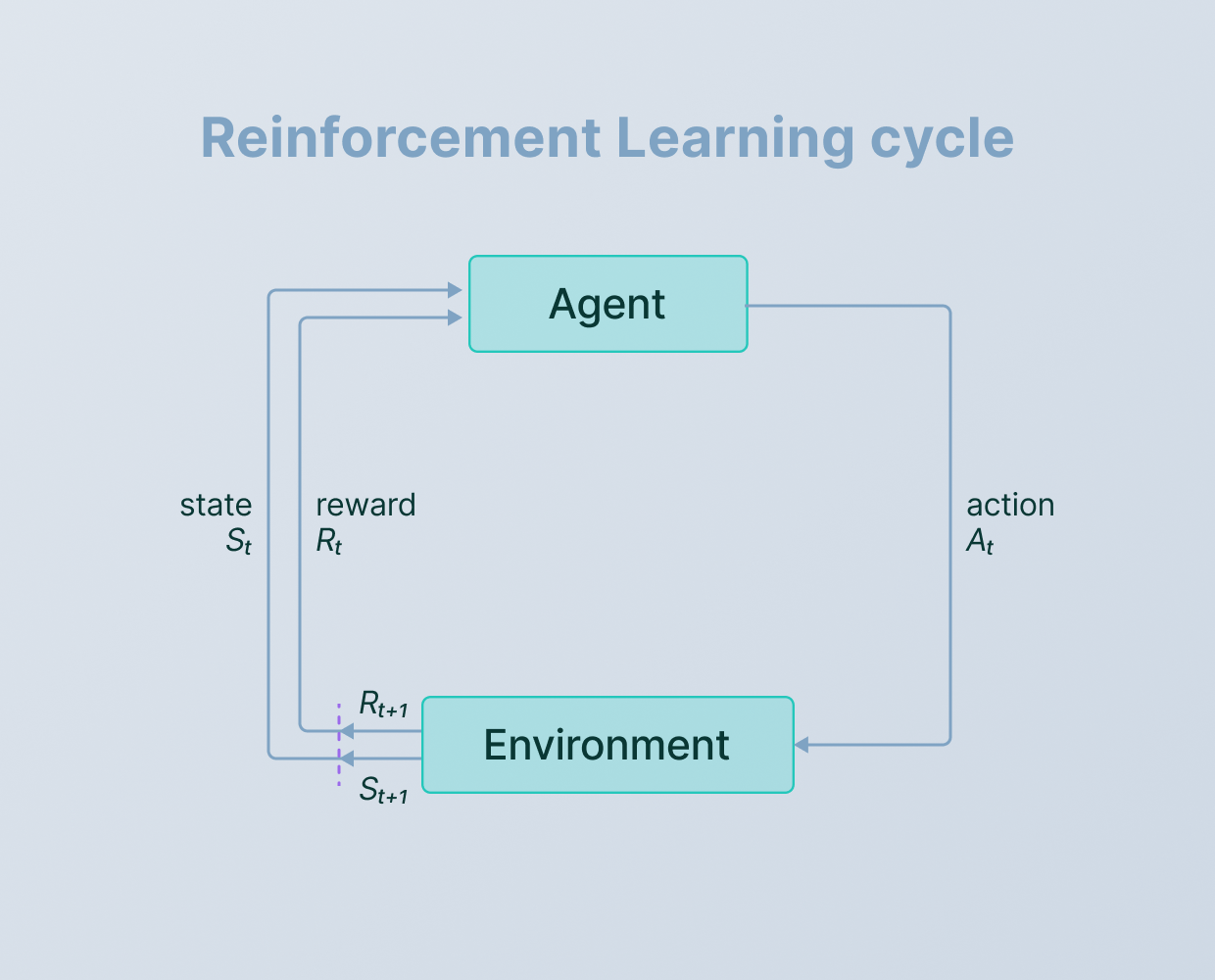

Reinforcement Studying and Markov Fashions

Reinforcement studying emerged as an alternative choice to conventional rule-based programs, emphasizing an agent’s means to work together with its setting and be taught via a means of trial and error. Drawing inspiration from psychology and operant conditioning, it allowed brokers to regulate their habits primarily based on suggestions within the type of rewards or penalties. Arthur Samuel’s work within the Nineteen Fifties supplied a sensible illustration of reinforcement studying in motion, demonstrating how an agent may enhance its efficiency by taking part in video games (like checkers) towards itself and refining its methods .

Supply: V7 Labs

In parallel, the idea of Markov fashions, notably Markov resolution processes (MDPs), supplied a helpful mathematical framework for modeling sequential decision-making. These fashions have been primarily based on the concept the long run state of a system depends solely on its current state and unbiased of its previous states (a Markov chain is a straightforward show of this concept – solely the present step issues to the following step). MDPs facilitated the formalization of how brokers work together with environments, take actions, and obtain rewards, forming the muse for fixing reinforcement studying challenges. This framework paved the way in which for the event of algorithms like dynamic programming, Q-learning, and coverage iteration, enabling brokers to be taught optimum methods over time.

These strategies outfitted researchers with the instruments to handle intricate decision-making dilemmas in fluctuating and unsure contexts. Combining these ideas with deep studying (often called deep reinforcement studying) has expanded machine studying’s capabilities, permitting brokers to make sense of complicated inputs.

Rise of Massive Information and Computational Energy

Influence of Elevated Computation

The rise of computational energy accessible to builders considerably influenced machine studying’s improvement in the course of the late twentieth century. This period was a notable turning level, with the rising availability of extra highly effective {hardware} and the emergence of parallel processing strategies.

Throughout this time, researchers and practitioners began to experiment with bigger datasets and extra complicated fashions. Parallel processing strategies, resembling the usage of multi-core processors, paved the way in which for sooner computations. Nonetheless, it was the early twenty first century that witnessed a extra pronounced impression as graphical processing models (GPUs) designed for graphics rendering have been tailored for parallel computing in machine studying duties.

The event of deep studying fashions gained momentum across the 2010s. These fashions demonstrated the potential of harnessing important computational energy for duties like picture recognition and pure language processing. With the provision of GPUs and distributed computing frameworks, researchers may practice deep neural networks in an affordable period of time.

Cloud computing platforms and providers, resembling Amazon Internet Providers, Google Cloud Platform, and Microsoft Azure, opened up entry to huge computational sources. This accessibility enabled people and organizations to leverage highly effective computing for his or her machine studying tasks while not having to put money into specialised and costly {hardware} themselves.

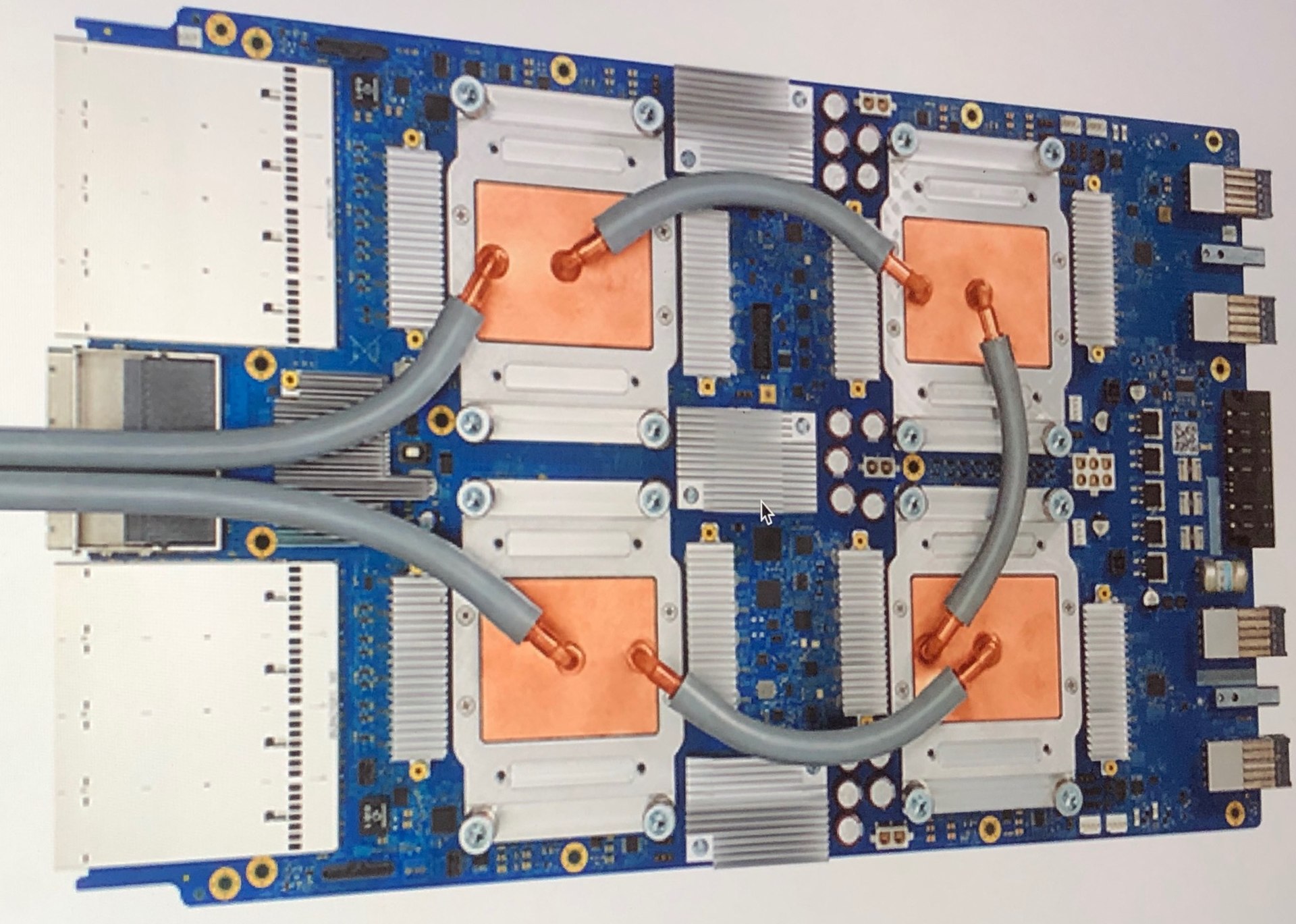

Tensor Processing Unit

Because the years progressed, developments in {hardware} structure, resembling the event of specialised {hardware} for machine studying duties (like tensor processing models), continued to speed up the impression of computational energy on machine studying. These developments allowed for the exploration of much more subtle algorithms, the dealing with of bigger datasets, and the deployment of real-time purposes.

Massive Information

Machine studying initially targeted on algorithms that would be taught from information and make predictions. Concurrently, the expansion of the web and digital tech introduced on the period of huge information – information that is too huge and complicated for conventional strategies to deal with. Enter information mining, a subject that focuses on foraging via giant datasets to find patterns, correlations, and useful data. Information mining strategies, resembling clustering and affiliation rule mining, have been developed to disclose hidden insights inside this wealth of information.

As computational energy superior, machine studying algorithms developed to deal with the challenges of huge information. These algorithms may now course of and analyze huge datasets effectively. The combination of information mining strategies with machine studying algorithms enhanced their predictive energy. For example, combining clustering strategies with machine studying fashions may result in higher buyer segmentation for focused advertising.

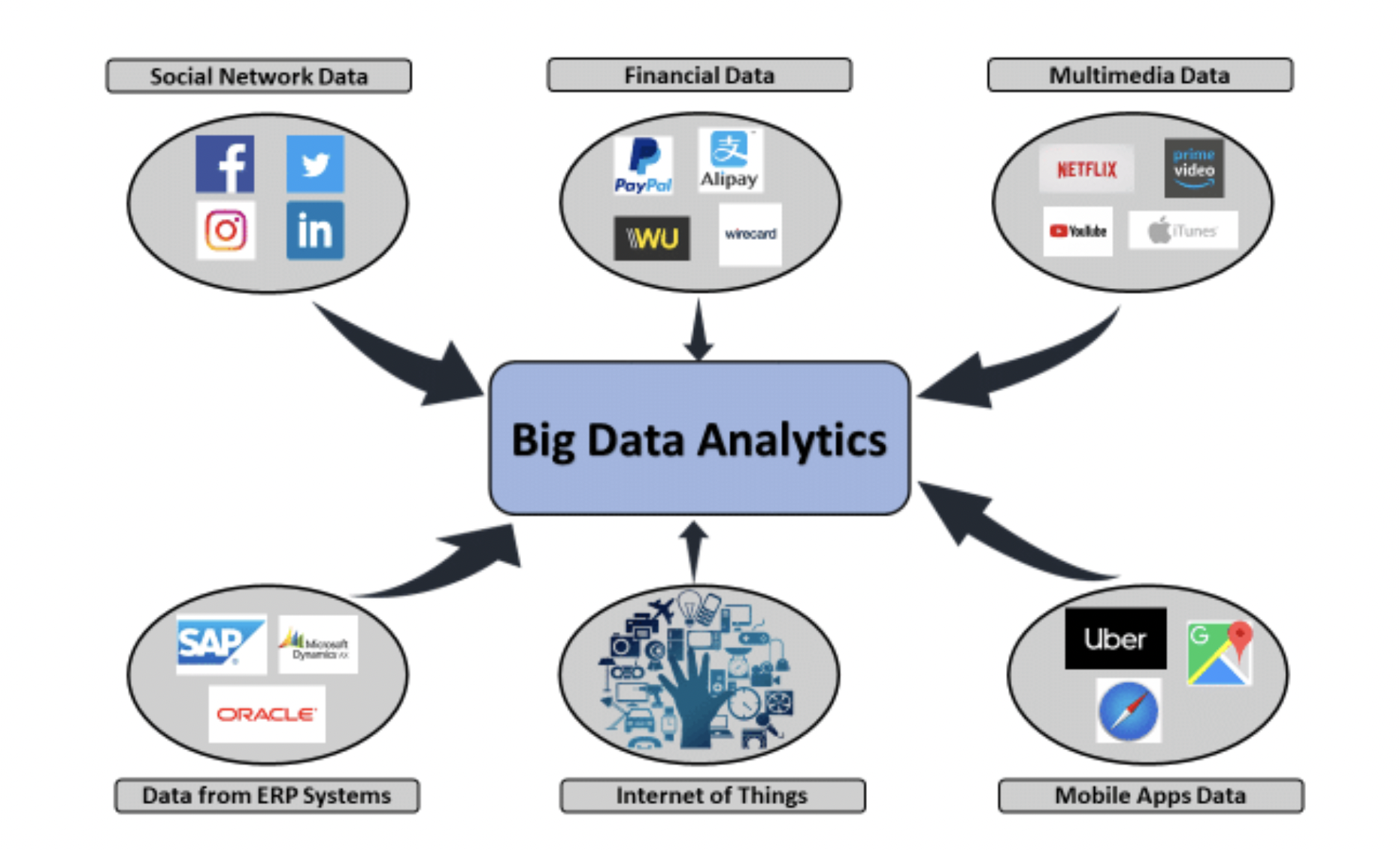

Information from all these sources advance ML algorithms every single day. Supply: Massive Information: Ethics and Legislation, Rainer Lenz

Machine studying will get higher the extra studying it has an opportunity to do – so fashions skilled on huge units of information sourced from throughout the web can grow to be immensely highly effective in a shorter period of time as a consequence of their entry to check supplies.

Neural Networks and Deep Studying Revolution

Early Analysis

Early neural community analysis, spanning the Nineteen Forties to the Nineteen Sixties, was characterised by pioneering makes an attempt to emulate the human mind’s construction and capabilities via computational fashions. The preliminary endeavors laid the groundwork for contemporary neural networks and deep studying. Nonetheless, these early efforts encountered important challenges that tempered their progress and widespread adoption.

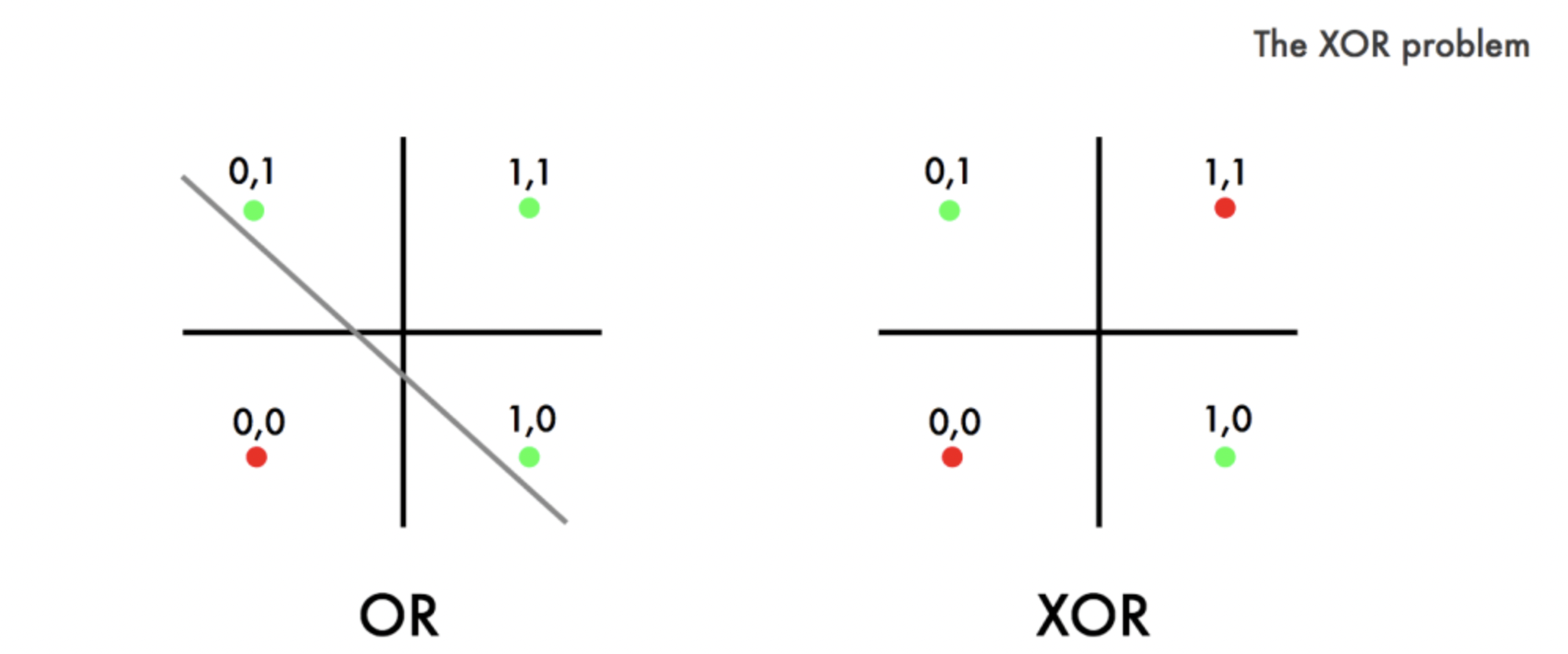

Early neural networks have been primarily confined to single-layer constructions, which curtailed their capabilities. The limitation to linearly separable issues, like what the perceptron confronted, hindered their potential to deal with real-world complexities involving nonlinear relationships. Coaching neural networks necessitated handbook weight changes, rendering the method laborious and time-intensive. Moreover, the lack to handle nonlinear relationships led to the notorious XOR drawback (an unique or cannot be calculated by a single layer), a seemingly easy binary classification activity that eluded early neural networks.

Supply: dev.to

Notably, early neural community analysis lacked a powerful theoretical basis, and the mechanisms underlying the success or failure of those fashions remained unknown. This lack of theoretical understanding hindered developments and contributed to the waning curiosity in neural networks in the course of the late Nineteen Sixties and early Seventies.

The Backpropagation Algorithm

The Backpropagation algorithm emerged as an answer to the issues that plagued early neural community analysis, catalyzing the revival of curiosity in neural networks and welcoming within the period of deep studying. This algorithm, which gained prominence within the Eighties however had its roots within the 70s, addressed essential challenges that had impeded neural networks’ progress and potential.

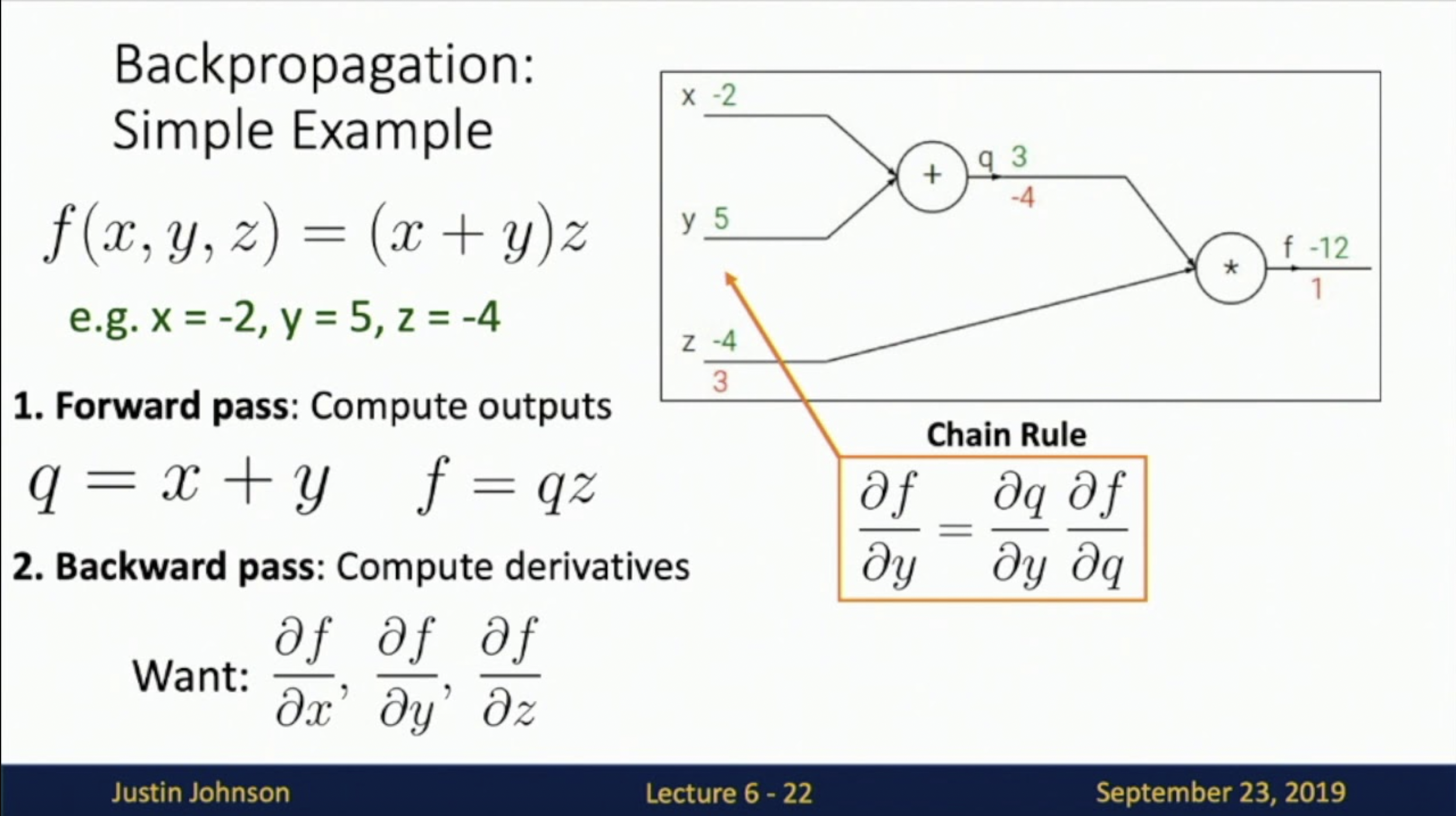

The large problem was the vanishing gradient drawback, an impediment that hindered the coaching of deep networks. Whereas calculating weight updates inside the community, the burden change is proportional to the partial by-product of the error operate with respect to the present weight. When these gradients begin getting sufficiently small that the burden change not has an impact on the burden, you might have a vanishing gradient drawback. If it occurs, the community will just about cease studying as a result of algorithm not updating itself.

The Backpropagation algorithm supplied a scientific mechanism to calculate gradients in reverse order, enabling environment friendly weight updates from the output layer again to the enter layer. By doing so, it mitigated the vanishing gradient problem, permitting gradients to stream extra successfully via the community’s layers and the netowrk to proceed updating itself because it “learns.”

I have not seen a differential equation since 2018. Supply: Justin Johnson, College of Michigan

The Backpropagation algorithm unlocked the potential to coach deeper networks, a feat that had eluded early neural community efforts. By enabling gradients to propagate backward, Backpropagation allowed for the adjustment of weights in deeper layers, thus enabling the development of multi-layer architectures. This breakthrough marked a big departure from the shallow fashions of the previous and led to the event of subtle architectures that demonstrated distinctive promise in duties like picture recognition, pure language processing, and extra.

Past overcoming particular challenges, the Backpropagation algorithm reignited curiosity in neural networks as an entire. Researchers realized that these fashions, empowered by Backpropagation’s capabilities, may seize intricate relationships and patterns inside information. This resurgence fashioned the muse of the deep studying revolution, a interval of exponential development in synthetic intelligence pushed by deep neural networks.

Deep Studying and Convolutional Neural Networks (CNNs)

The 2010s constituted a interval of deep studying developments, which led to a considerable leap within the capabilities of neural networks. Standing out amongst these breakthroughs is the Convolutional Neural Community (CNN), a marvel that has notably revolutionized picture evaluation and the sector of laptop imaginative and prescient. CNNs are uniquely tailor-made to fathom and interpret intricate options intrinsic to pictures. This emulation of the hierarchical construction and intrinsic correlations that underlie visible data is why they’re so efficient.

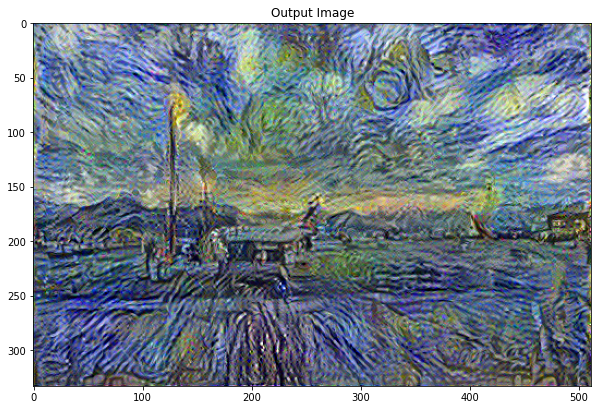

I really did a mission with this as soon as in class utilizing TensorFlow and Keras. I utilized the feel of Van Gogh’s Starry Night time to an image of a airplane my brother had taken (thanks Kyle!), ensuing within the picture on the underside:

The convolutional layers intricately dissect patterns via filter operations, capturing elemental options like edges and textures that beforehand have been past the grasp of any type of computational mannequin. Following pooling layers downsize characteristic maps whereas retaining very important information. The journey culminates in absolutely related layers that synthesize the assimilated options, yielding spectacular outcomes. CNN has confirmed to be adept at picture classification, object detection, picture technology, switch studying, and extra.

Different Vital Milestones

- 1913: The Markov Chain is found.

- 1951: The primary neural community machine, the SNARC is invented.

- 1967: The nearest neighbor algorithm is created, the beginning of fundamental sample recognition.

- 1970: The neocognitron is found, a kind of synthetic neural community that can later encourage CNNs.

- 1985: NETtalk, a neural community that learns easy methods to pronounce phrases the identical approach infants do, is developed.

- 1997: IBM’s Deep Blue defeats chess champion Gary Kasparov in chess.

- 2002: Machine studying software program library Torch is launched.

- 2009: ImageNET, a big visible database, is created. Many credit score ImageNet because the catalyst for the AI growth of the twenty first century.

- 2016: Google’s AlphaGo turns into the primary laptop to beat an expert human participant in Go.

- 2022: Massive-scale language mannequin ChatGPT is launched for public use.

Keep tuned within the coming weeks for extra about machine studying’s purposes and the way this revolution will have an effect on you!

Utilizing ML in your work? Keep in mind an earlier iteration of it? Have ideas and opinions on differential equations? Tell us within the feedback!