Synthetic intelligence (AI) and machine studying (ML) are actually thought-about a should to reinforce options in each market section with their capacity to constantly be taught and enhance the expertise and the output. As these options change into widespread, there may be an growing want for safe studying and customisation. Nevertheless, the price of coaching the AI for application-specific duties is the first problem throughout the market

The problem out there for AI to get extra scaled is simply the price of doing AI. When you have a look at GPT-3, a single coaching session prices weeks and $6 million! Due to this fact, each time a brand new mannequin or a change is introduced, once more the depend begins from zero and the present mannequin must be retrained, which is kind of costly. Number of issues have an effect on the coaching of those fashions, corresponding to drift, however the necessity to retrain for customisation will surely assist in managing retraining value.

Contemplate the manufacturing business, which is projecting losses of about $50 billion per yr due to preventable upkeep points. There may be downtime value as a result of the upkeep wasn’t accomplished in time. One other staggering statistic reveals the lack of productiveness within the US attributable to folks not coming to work as a result of the price of preventable continual ailments is $1.1 trillion. That’s simply the productiveness loss, not the price of healthcare.

This might have been considerably diminished by extra succesful and cost-effective monitoring by way of digital well being. Due to this fact, a necessity of real-time AI near the gadget to assist be taught, predict, and proper points and proactively schedule upkeep is duly needed.

AI is fueled by knowledge, so one wants to think about the quantity of information that’s being generated by all of the gadgets. Allow us to take into account only one automobile. A single automobile generates knowledge that ranges over a terabyte per day. Think about the price of truly studying from it. Think about what number of automobiles are there producing that form of knowledge? What does it do to the community? Whereas not all of that knowledge goes to the cloud, quite a lot of that knowledge is being saved someplace.

These are just a few of each the alternatives or issues that AI can resolve, but in addition the challenges which are stopping AI from scaling to ship these options. The actual questions might be framed as follows:

• How can we cut back the latency and subsequently enhance the responsiveness of the system?

• How can we truly make these gadgets scale? How can we make them value efficient?

• How do you guarantee privateness and safety?

• sort out community congestion as a result of great amount of information generated?

The answer

Quite a lot of components have an effect on the latency of a system. For instance, the {hardware} capability, community latency, and using massive quantities of information can also be an issue. These gadgets embed AI, additionally they have the self-learning talents based mostly on the progressive time collection knowledge dealing with for predictive evaluation. Predictive upkeep is a crucial consider any manufacturing business as many of the industries are actually turning to robotic meeting traces. In-cabin expertise, for instance, utilises an identical AI enabled setting, even for automated operation of autonomous automobiles (AVs). Sensing the important indicators prediction and evaluation of the medical knowledge is a crucial well being and wellness software instance. Safety and surveillance as effectively are actually turning in direction of AI enabled safety cams for steady surveillance.

“The extra clever gadgets you make, the larger the expansion of the general intelligence.”

The answer to latency lies in a distributed AI computing mannequin, which is a powerful part with the power to embed edge AI gadgets, to have the efficiency to run needed AI processing. Extra importantly, we want the power to be taught on the gadget to permit for safe customisation, which might be achieved by making these programs occasion based mostly.

It will cut back the quantity of information and eradicate delicate knowledge being despatched to the cloud, thereby decreasing community congestion, cloud computation, and enhance safety. It additionally offers real-time responses, which makes well timed actions potential. Such gadgets change into compelling sufficient for folks to purchase because it’s not solely in regards to the efficiency however the way you make it cost-effective as effectively.

Neural gadgets are skilled typically in two methods, both utilizing the machine fed knowledge or utilizing spikes that mimic the performance of spiking neural networks. This makes the system loaded.

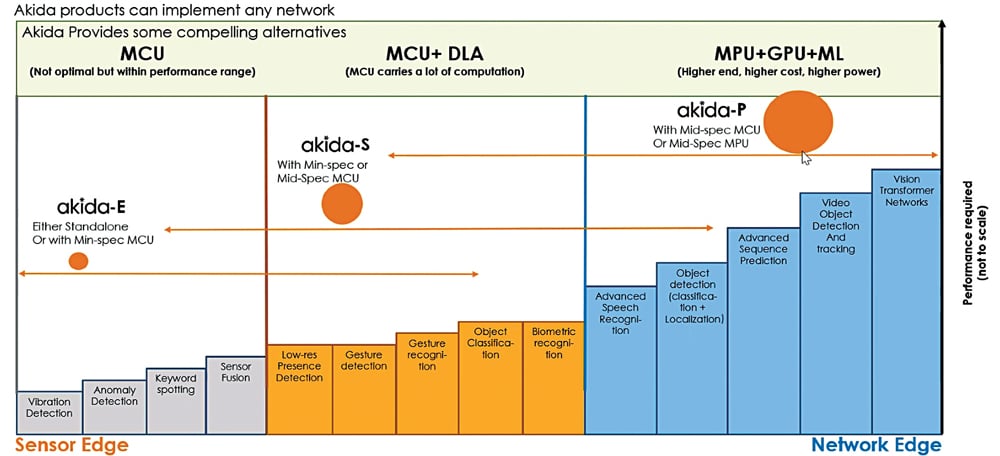

A neural system, alternatively, requires an structure that may speed up all kinds of neural networks, might or not it’s convolution neural community (CNN), deep neural community (DNN), spiking neural community (SNN), and even imaginative and prescient transformers or sequence prediction.

Due to this fact, utilizing a stateless structure in distributed programs might help cut back the load on servers and the time it takes for the system to retailer and retrieve knowledge. Stateless structure allows functions to stay responsive and scalable with a minimal variety of servers, because it doesn’t require functions to maintain file of the classes.

Utilizing direct media entry (DMA) controllers can increase responsiveness as effectively. This {hardware} gadget permits input-output gadgets to instantly entry the reminiscence with much less participation of the processor. It’s like dividing the work amongst folks to spice up the general pace, and that’s precisely what occurs right here.

Intel Movidius Myriad X, for instance, is particularly a imaginative and prescient processing unit that has a devoted neural compute engine that instantly interfaces with a high-throughput clever reminiscence material to keep away from any reminiscence bottleneck when transferring knowledge. The Akida IP Platform by BraiChip additionally utilises DMA in an identical method. It additionally has a runtime supervisor that manages all operations of the neural processor with full transparency and may also be accessed by way of a easy API.

Different options of those platforms embrace the multi-pass processing, which is processing a number of layers at a time, making the processing very environment friendly. One capacity of the gadget is the smaller footprint integration to make fewer nodes in a configuration and go from a parallel kind execution to a sequential kind execution. This implies latency.

However an enormous quantity of that latency comes from the truth that the CPU will get concerned each time a layer is available in. Because the gadget processes a number of layers at a time, and the DMA manages all exercise itself, latencies are considerably diminished.

Contemplate an AV, which requires the information to be processed from varied sensors at a time. The TDA4VM from Texas Devices Inc. comes with a devoted deep-learning accelerator on-chip that permits the car to understand its setting by gathering knowledge from round 4 to 6 cameras, a radar, lidar, and even from an ultrasonic sensor. Equally, the Akida IP Platform can do bigger networks on a smaller configuration concurrently.

Glimpse into the market

These gadgets have an enormous number of scope out there. As these are known as occasion based mostly gadgets, they’ve an software sector. For instance, Google’s Tensor processing models (TPUs), that are application-specific built-in circuits (ASICs), are designed to speed up deep studying workloads of their cloud platform. TPUs ship as much as 180 teraflops of efficiency, and have been used to coach large-scale machine studying fashions like Google’s AlphaGo AI.

Equally, Intel’s Nervana neural community processor is an AI accelerator designed to ship excessive efficiency for deep studying workloads. The processor contains a customized ASIC structure optimised for neural networks, and has been adopted by firms like Fb, Alibaba, and Tencent.

Qualcomm’s Snapdragon neural processing engine AI accelerator is designed to be used in cell gadgets and different edge computing functions. It contains a customized {hardware} design optimised for machine studying workloads, and may ship as much as 3-terflop efficiency, making it appropriate for on-device AI inference duties.

A number of different firms have already invested closely in designing and producing neural processors which are being tailored into the market as effectively, and that too in a variety of industries. As AI, neural networks, and machine studying have gotten extra mainstream, it’s anticipated that the marketplace for neural processors will proceed to develop.

In conclusion, the way forward for neural processors out there is promising, though many components might have an effect on their development and evolution, together with new technological developments, authorities rules, and even buyer preferences.

This text, based mostly on an interview with Nandan Nayampally, Chief Advertising and marketing Officer, BrainChip, has been transcribed and curated by Jay Soni, an electronics fanatic at EFY

Nandan Nayampally is Chief Advertising and marketing Officer at BrainChip