(3rdtimeluckystudio/Shutterstock)

Executives are proper to be involved in regards to the accuracy of the AI fashions they put into manufacturing and tamping down on hallucinating ML fashions. However they need to be spending as a lot time, if no more, addressing questions over the ethics of AI, significantly round knowledge privateness, consent, transparency, and danger.

The sudden recognition of enormous language fashions (LLMs) has added rocket gas to synthetic intelligence, which was already transferring ahead at an accelerated tempo. However even earlier than the ChatGPT revolution, corporations had been struggling to come back to grips with the necessity to construct and deploy AI purposes in an moral method.

Whereas consciousness is constructing over the necessity for AI ethics, there’s nonetheless an unlimited quantity of labor to do by AI practitioners and corporations that need to undertake AI. Looming regulation in Europe, by way of the EU AI Act, solely provides to the stress for executives to get ethics proper.

The extent of consciousness over AI ethics points just isn’t the place it must be. For instance, a current survey by Conversica, which builds customized conversational AI options, discovered that 20% of enterprise leaders at corporations who use AI “have restricted or no data” about their firm’s insurance policies for AI by way of safety, transparency, accuracy, and ethics, the corporate says. “Much more alarming is that 36% declare to be solely ‘considerably acquainted’ with these points,” it says.

Comic, actor, and creator Sarah Silverman is suing OpenAI (Featureflash Photograph Company/Shutterstock)

There was some excellent news final week from Thomson Reuters and its “Way forward for Professionals” report. It discovered, not surprisingly, that many professionals (45%) are desirous to leverage AI to extend productiveness, increase inside efficiencies, and enhance consumer companies. However it additionally discovered that 30% of survey respondents mentioned their largest considerations with AI had been round knowledge safety (15%) and ethics (15%). Some people are listening to the ethics query, which is sweet.

However questions round consent present no indicators of being resolved any time quickly. LLMs, like all machine studying fashions, are educated on knowledge. The query is: who’s knowledge?

The Web has historically been a reasonably open place, with a laissez faire method to content material possession. Nevertheless, with the arrival of LLMs which might be educated on big swaths of knowledge scraped off the Web, questions on possession have grow to be extra acute.

The comic Sarah Silverman has generated her share of eyerolls throughout her standup routines, however OpenAI wasn’t laughing after Silverman sued it for copyright infringement final month. The lawsuit hinges on ChatGPT’s functionality to recite giant swaths of Silverman’s 2010 ebook “The Bedwetter,” which Silverman alleges might solely be attainable if OpenAI educated its AI on the contents of the ebook. She didn’t give consent for her copyrighted work for use that manner.

Google and OpenAI have additionally been sued by Clarkson, a “public curiosity” legislation workplace with places of work in California, Michigan, and New York. The agency filed a class-action lawsuit in June in opposition to Google after the Internet big made a privateness coverage change that, in accordance with an article in Gizmodo, explicitly provides itself “the appropriate to scrape nearly all the things you submit on-line to construct its AI instruments.” The identical month, it filed an analogous go well with in opposition to OpenAI.

The lawsuits are a part of what the legislation follow calls “Collectively on AI.” “To construct essentially the most transformative expertise the world has ever recognized, an virtually inconceivable quantity of knowledge was captured,” states Ryan J. Clarkson, the agency’s managing associate, in a June 26 weblog submit. “The overwhelming majority of this data was scraped with out permission from the non-public knowledge of primarily everybody who has ever used the web, together with kids of all ages.”

Google Cloud says it doesn’t use its enterprise clients’ knowledge to coach its AI fashions, however its dad or mum firm does use shopper knowledge to coach its fashions (Michael Vi/Shutterstock)

Clarkson desires the AI giants to pause AI analysis till guidelines may be hammered out, which doesn’t seem forthcoming. If something, the tempo of R&D on AI is accelerating, as Google’s cloud division this week rolled out a host of enhancements to its AI choices. Because it helps allow corporations to construct AI, Google Cloud can be keen to assist its enterprise clients handle ethics challenges, mentioned June Yang, Google Cloud’s vice chairman of Cloud AI and business options.

“When assembly with clients about generative AI, we’re increagingly requested query about knowledge governance and privateness, safety and compliance, reliability and sustainability, security and accountability,” Yang mentioned in a press convention final week. “These pillars are actually our cornerstone of our method to enterprise readiness.

“On the subject of knowledge governance and privateness, we begin with the premise that your knowledge is your knowledge,” she continued. “Your knowledge consists of enter immediate, mannequin output, and coaching knowledge, and extra. We don’t use clients’ knowledge to coach our personal fashions. And so our clients can use our companies with confidence figuring out their knowledge and their IP [intellectual property] are protected.”

Customers have historically been the loudest in terms of complaining about abuse of their knowledge. However enterprise clients are additionally beginning to sound the alarm over the info consent situation, because it was found that Zoom had been amassing audio, video, and name transcript knowledge from its video-hosting clients, and utilizing it to coach its AI fashions.

With out rules, bigger corporations will likely be free to proceed to gather huge quantities of knowledge and monetize it nevertheless they like, says Shiva Nathan, the CEO and founding father of Onymos, a supplier of a privacy-enhanced Internet software framework.

“The bigger SaaS suppliers may have the ability dynamic to say, what, if you’d like use my service as a result of I’m the primary supplier on this explicit house, I’ll use your knowledge and your buyer’s knowledge as properly,” Nathan advised Datanami in a current interview. “So that you both take it or go away it.”

Knowledge rules, such because the EU’s Common Knowledge Safety Regulation (GDPR) and the California Shopper Safety Act (CCPA), have helped to degree the taking part in subject in terms of shopper knowledge. Nearly each web site now asks for consent from customers to gather knowledge, and offers customers the choice to reject sharing their knowledge. Now with the EU AI Act, Europe is transferring ahead with regulation on the usage of AI.

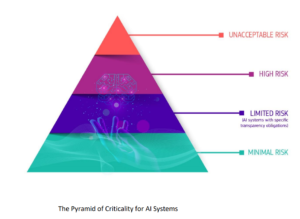

The EU AI Act, which remains to be being hammered out and will probably grow to be legislation in 2024, would implement a number of modifications, together with limiting how corporations can use AI of their merchandise; require AI to be applied in a protected, authorized, moral, and clear method; drive corporations to get prior approval for sure AI use circumstances; and require corporations to watch their AI merchandise.

Whereas the EU AI Act has some drawbacks by way of addressing cybercrime and LLMs, it’s general an vital step ahead, says Paul Hopton, the CTO of Scoutbee, a German developer of an AI-based data platform. Furthermore, we shouldn’t concern regulation, he mentioned.

“AI regulation will hold coming,” Hopton advised Datanami. “Anxieties round addressing misinformation and mal-information associated to AI aren’t going away any time quickly. We count on rules to rise relating to knowledge transparency and customers’ ‘proper to data’ on a corporation’s tech information.”

Companies ought to take a proactive function in constructing belief and transparency of their AI fashions, Hopton says. Specifically, rising ISO requirements, resembling ISO 23053 and ISO 42001 or ones just like ISO 27001, will assist information companies by way of constructing AI, assessing the dangers, and speaking to customers about how the AI fashions are developed.

“Use these requirements as a beginning place and set your personal firm insurance policies that construct off these requirements on the way you need to use AI, how you’ll construct it, how you’ll be clear in your processes, and your method to high quality management,” Hopton mentioned. “Make these insurance policies public. Rules are likely to give attention to decreasing carelessness. For those who determine and set clear pointers and safeguards and provides the market confidence in your method to AI now, you don’t have to be afraid of regulation and will likely be in a a lot better place from a compliance standpoint when laws turns into extra stringent.”

Firms that take constructive steps to deal with questions of AI ethics is not going to solely achieve extra belief from clients, but it surely might assist forestall authorities regulation that’s probably extra stringent, he says.

“As scrutiny over AI grows, voluntary certifications of organizations following clear and accepted AI practices and danger administration will present extra confidence in AI methods than inflexible laws,” Hopton mentioned. “The expertise remains to be evolving.”

Associated Objects:

Zoom Knowledge Debacle Shines Gentle on SaaS Knowledge Snooping

Europe Strikes Ahead with AI Regulation

Trying For An AI Ethicist? Good Luck

AI ethics, AI transparency, consent, knowledge regulation, knowledge transparency, ethics, EU AI Act, GDPR, generative AI, lawsuits, Sarah Silverman