The chance for machine studying and AI in manufacturing is immense. From higher alignment of manufacturing with shopper demand to improved course of management, yield prediction, defect detection, predictive upkeep, delivery optimization, and a lot, rather more, ML/AI is poised to rework how producers run their companies, making these applied sciences a principal space of focus in Business 4.0 initiatives.

However realizing this potential shouldn’t be with out its challenges. Whereas there are lots of, some ways for organizations to miss the mark in the case of machine studying and AI, one essential approach shouldn’t be anticipating how a mannequin will probably be built-in into operational processes.

Edge Units Are Important for Store Ground Predictions

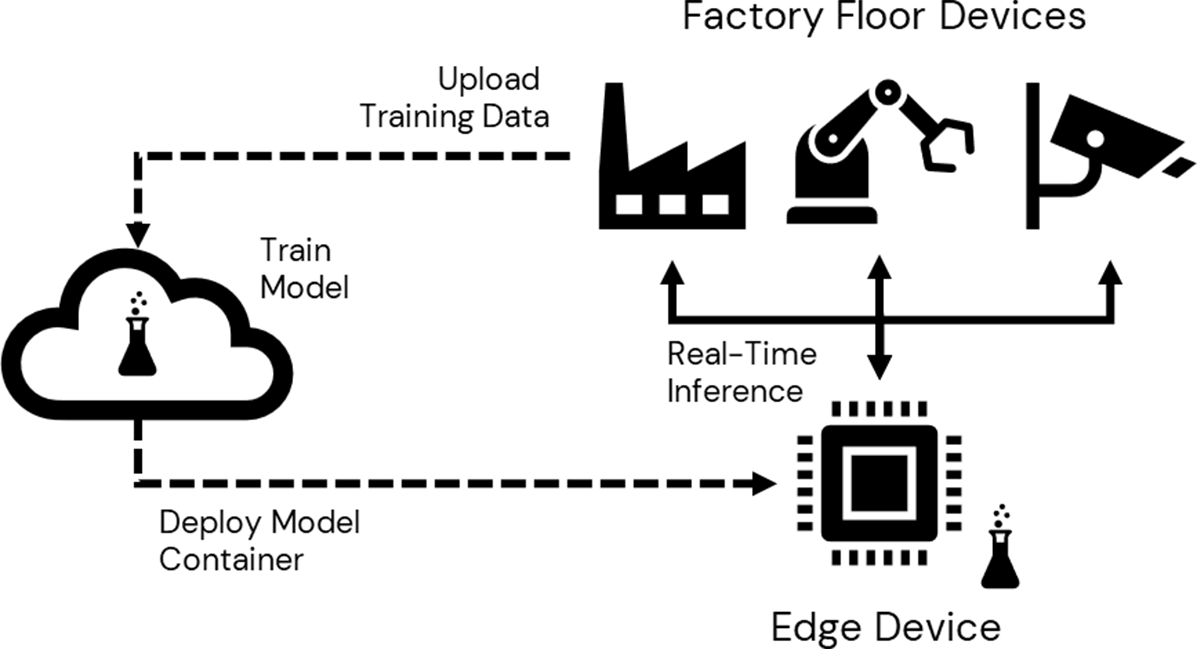

In manufacturing, lots of the alternatives for instantly exploiting ML/AI are present in and across the store flooring. Alerts despatched from machines, devices, units and sensors are despatched to fashions which convert this data into predictions that assist govern operations or set off alerts. The flexibility of those fashions to steer these actions depends upon dependable, safe and low-latency connectivity between the store flooring units and the mannequin, one thing that can’t at all times be assured when the 2 are separated over massive scale networks.

However massive scale networks are what are wanted to gather the volumes of information required to coach a mannequin. Throughout mannequin coaching, algorithms are repeatedly launched to the varied values collected from these actual or simulated units in order that they might hone in on related patterns within the information. It is a computationally intensive and time-consuming course of, one thing that’s greatest managed within the cloud.

Whereas coaching requires entry to such assets, the ensuing mannequin is commonly surprisingly light-weight. Info realized from Terabytes of information could also be condensed into Megabytes and even Kilobytes of metadata inside a educated mannequin. Because of this a typical mannequin beforehand educated within the cloud can generate quick, correct predictions with the help of a really modest computational system. This opens up the chance for us to deploy the mannequin on or close to to the store flooring on a light-weight system. Such an edge deployment reduces our dependency on a big, excessive velocity community to help integration eventualities. (Determine 1)

Databricks and Azure DevOps Allow Edge Machine Deployment

Placing all of the components collectively, we’d like an preliminary assortment of information, pushed to the cloud, and a platform on which to coach a mannequin. As a unified, cloud-based analytics information platform, Databricks gives an setting inside which a variety of ML/AI fashions might be educated in a quick and scalable method.

We then have to deploy the educated mannequin to a container picture. This container must host not simply the mannequin and all its dependencies however current the mannequin in a fashion that’s accessible to all kinds of exterior purposes. At this time, REST APIs are the gold normal for presenting purposes in such a fashion. And whereas the mechanics of defining a sturdy REST API generally is a bit daunting, the excellent news is that Databricks, by way of its integration of the MLflow mannequin administration and deployment platform, allows you to do that with a single line of code.

With the container outlined, it now have to be deployed to an edge system. A typical system will present a 64-bit processor, a couple of Gigabytes of RAM, WiFi or ethernet connectivity, and can run a light-weight working system resembling Linux which is able to operating a container picture. Instruments resembling Azure DevOps, constructed to handle the deployment of containers to a variety of units, can then be used to push the mannequin container from the cloud to the system and launch the native predictive service on it.

To assist our clients discover this strategy of mannequin coaching, packaging and edge deployment, we’ve constructed a Resolution Accelerator with pre-built assets that doc intimately every step of the journey from Databricks to the sting. It’s our hope that these property will assist demystify the sting deployment course of, permitting organizations to give attention to how predictive capabilities can enhance store flooring operations, not the mechanics of the deployment course of.

Discover the answer accelerator right here and get in touch with us to debate your Edge deployments.