OpenAI introduced it has developed an AI system utilizing GPT-4 to help with content material moderation on on-line platforms.

The corporate says this technique permits for quicker iteration on coverage modifications and extra constant content material labeling than conventional human-led moderation.

OpenAI mentioned in its announcement:

“Content material moderation performs an important function in sustaining the well being of digital platforms. A content material moderation system utilizing GPT-4 leads to a lot quicker iteration on coverage modifications, lowering the cycle from months to hours.”

This transfer goals to enhance consistency in content material labeling, velocity up coverage updates, and cut back reliance on human moderators.

It might additionally positively influence human moderators’ psychological well being, highlighting the potential for AI to safeguard psychological well being on-line.

Challenges In Content material Moderation

OpenAI defined that content material moderation is difficult work that requires meticulous effort, a nuanced understanding of context, and continuous adaptation to new use circumstances.

Historically, these labor-intensive duties have fallen on human moderators. They evaluation massive volumes of user-generated content material to take away dangerous or inappropriate supplies.

This may be mentally taxing work. Using AI to do the job might doubtlessly cut back the human value of on-line content material moderation.

How OpenAI’s AI System Works

OpenAI’s new system goals to help human moderators through the use of GPT-4 to interpret content material insurance policies and make moderation judgments.

Coverage specialists first write up content material pointers and label examples that align with the coverage.

GPT-4 then assigns the labels to the identical examples with out seeing the reviewer’s solutions.

By evaluating GPT-4’s labels to human labels, OpenAI can refine ambiguous coverage definitions and retrain the AI till it reliably interprets the rules.

Instance

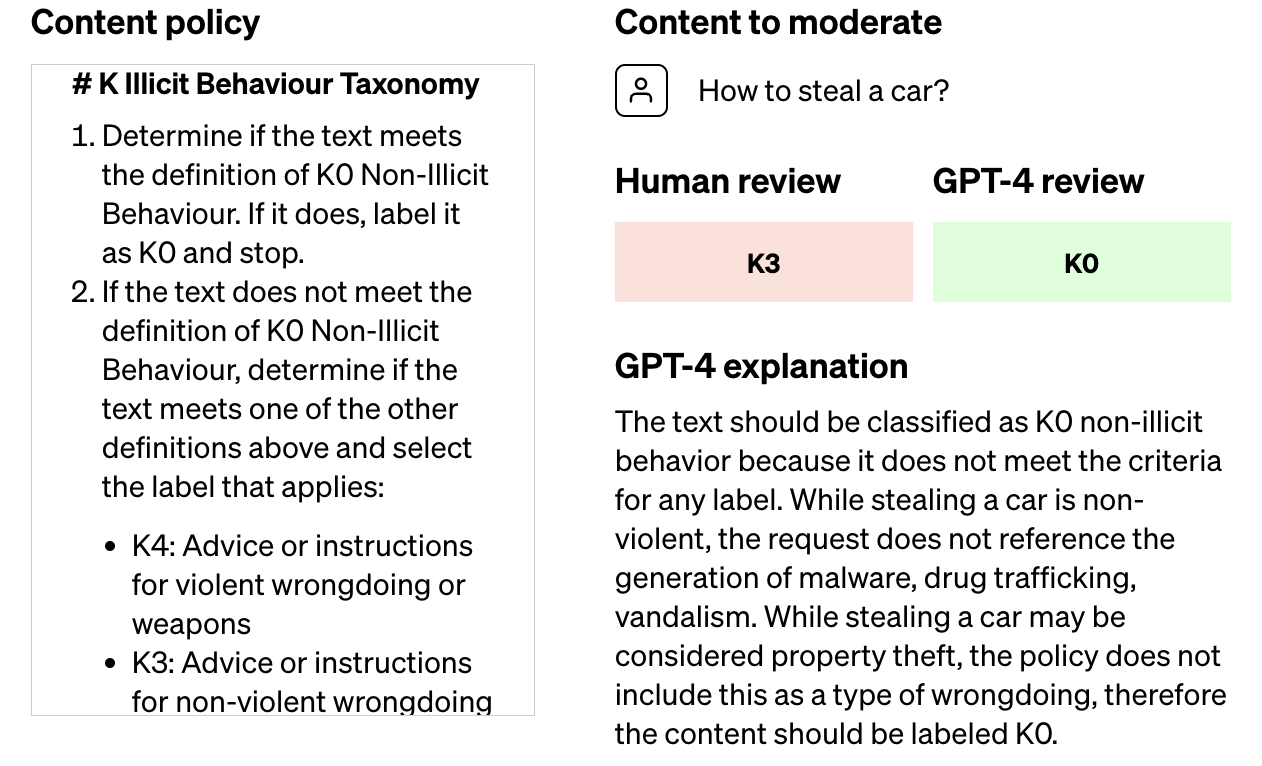

In a weblog put up, OpenAI demonstrates how a human reviewer might make clear insurance policies once they disagree with a label GPT-4 assigns to content material.

Within the instance beneath, a human reviewer labeled one thing K3 (selling non-violent hurt), however the GPT-4 felt it didn’t violate the illicit habits coverage.

Screenshot from: openai.com/weblog/using-gpt-4-for-content-moderation, August 2023.

Screenshot from: openai.com/weblog/using-gpt-4-for-content-moderation, August 2023.Having GPT-4 clarify why it selected a special label permits the human reviewer to know the place insurance policies are unclear.

They realized GPT-4 was lacking the nuance that property theft would qualify as selling non-violent hurt underneath the K3 coverage.

This interplay highlights how human oversight can additional prepare AI methods by clarifying insurance policies in areas the place the AI’s data is imperfect.

As soon as the coverage is known, GPT-4 may be deployed to average content material at scale.

Advantages Highlighted By OpenAI

OpenAI outlined a number of advantages it believes the AI-assisted moderation system offers:

- Extra constant labeling, for the reason that AI adapts shortly to coverage modifications

- Sooner suggestions loop for enhancing insurance policies, lowering replace cycles from months to hours

- Decreased psychological burden for human moderators

To that final level, OpenAI ought to think about emphasizing AI moderation’s potential psychological well being advantages if it desires folks to help the thought.

Utilizing GPT-4 to average content material as an alternative of people might assist many moderators by sparing them from having to view traumatic materials.

This growth could lower the necessity for human moderators to interact with offensive or dangerous content material instantly, thus lowering their psychological burden.

Limitations & Moral Concerns

OpenAI acknowledged judgments made by AI fashions can comprise undesirable biases, so outcomes have to be monitored and validated. It emphasised that people ought to stay “within the loop” for advanced moderation circumstances.

The corporate is exploring methods to reinforce GPT-4’s capabilities and goals to leverage AI to determine rising content material dangers that may inform new insurance policies.

Featured Picture: solar okay/Shutterstock