With the fast development of neural network-based methods and Massive Language Mannequin (LLM) analysis, companies are more and more fascinated with AI purposes for worth era. They make use of numerous machine studying approaches, each generative and non-generative, to deal with text-related challenges reminiscent of classification, summarization, sequence-to-sequence duties, and managed textual content era. Organizations can go for third-party APIs, however fine-tuning fashions with proprietary knowledge gives domain-specific and pertinent outcomes, enabling cost-effective and impartial options deployable throughout totally different environments in a safe method.

Making certain environment friendly useful resource utilization and cost-effectiveness is essential when selecting a technique for fine-tuning. This weblog explores arguably the preferred and efficient variant of such parameter environment friendly strategies, Low Rank Adaptation (LoRA), with a selected emphasis on QLoRA (an much more environment friendly variant of LoRA). The strategy right here will probably be to take an open giant language mannequin and fine-tune it to generate fictitious product descriptions when prompted with a product title and a class. The mannequin chosen for this train is OpenLLaMA-3b-v2, an open giant language mannequin with a permissive license (Apache 2.0), and the dataset chosen is Crimson Dot Design Award Product Descriptions, each of which might be downloaded from the HuggingFace Hub on the hyperlinks offered.

Advantageous-Tuning, LoRA and QLoRA

Within the realm of language fashions, high quality tuning an current language mannequin to carry out a selected activity on particular knowledge is a standard apply. This includes including a task-specific head, if essential, and updating the weights of the neural community by way of backpropagation through the coaching course of. You will need to be aware the excellence between this finetuning course of and coaching from scratch. Within the latter situation, the mannequin’s weights are randomly initialized, whereas in finetuning, the weights are already optimized to a sure extent through the pre-training section. The choice of which weights to optimize or replace, and which of them to maintain frozen, will depend on the chosen method.

Full finetuning includes optimizing or coaching all layers of the neural community. Whereas this strategy sometimes yields the perfect outcomes, it’s also essentially the most resource-intensive and time-consuming.

Fortuitously, there exist parameter-efficient approaches for fine-tuning which have confirmed to be efficient. Though most such approaches have yielded much less efficiency, Low Rank Adaptation (LoRA) has bucked this development by even outperforming full finetuning in some circumstances, as a consequence of avoiding catastrophic forgetting (a phenomenon which happens when the data of the pretrained mannequin is misplaced through the fine-tuning course of).

LoRA is an improved finetuning technique the place as an alternative of finetuning all of the weights that represent the burden matrix of the pre-trained giant language mannequin, two smaller matrices that approximate this bigger matrix are fine-tuned. These matrices represent the LoRA adapter. This fine-tuned adapter is then loaded to the pretrained mannequin and used for inference.

QLoRA is an much more reminiscence environment friendly model of LoRA the place the pretrained mannequin is loaded to GPU reminiscence as quantized 4-bit weights (in comparison with 8-bits within the case of LoRA), whereas preserving related effectiveness to LoRA. Probing this technique, evaluating the 2 strategies when essential, and determining the perfect mixture of QLoRA hyperparameters to attain optimum efficiency with the quickest coaching time would be the focus right here.

LoRA is applied within the Hugging Face Parameter Environment friendly Advantageous-Tuning (PEFT) library, providing ease of use and QLoRA might be leveraged through the use of bitsandbytes and PEFT collectively. HuggingFace Transformer Reinforcement Studying (TRL) library gives a handy coach for supervised finetuning with seamless integration for LoRA. These three libraries will present the required instruments to finetune the chosen pretrained mannequin to generate coherent and convincing product descriptions as soon as prompted with an instruction indicating the specified attributes.

Prepping the information for supervised fine-tuning

To probe the effectiveness of QLoRA for high quality tuning a mannequin for instruction following, it’s important to remodel the information to a format suited to supervised fine-tuning. Supervised fine-tuning in essence, additional trains a pretrained mannequin to generate textual content conditioned on a offered immediate. It’s supervised in that the mannequin is finetuned on a dataset that has prompt-response pairs formatted in a constant method.

An instance statement from our chosen dataset from the Hugging Face hub seems as follows:

|

product |

class |

description |

textual content |

|

“Biamp Rack Merchandise” |

“Digital Audio Processors” |

““Excessive recognition worth, uniform aesthetics and sensible scalability – this has been impressively achieved with the Biamp model language …” |

“Product Identify: Biamp Rack Merchandise; Product Class: Digital Audio Processors; Product Description: “Excessive recognition worth, uniform aesthetics and sensible scalability – this has been impressively achieved with the Biamp model language …

|

As helpful as this dataset is, this isn’t properly formatted for fine-tuning of a language mannequin for instruction following within the method described above.

The next code snippet masses the dataset from the Hugging Face hub into reminiscence, transforms the required fields right into a constantly formatted string representing the immediate, and inserts the response( i.e. the outline), instantly afterwards. This format is named the ‘Alpaca format’ in giant language mannequin analysis circles because it was the format used to finetune the unique LlaMA mannequin from Meta to outcome within the Alpaca mannequin, one of many first broadly distributed instruction-following giant language fashions (though not licensed for business use).

import pandas as pd

from datasets import load_dataset

from datasets import Dataset

#Load the dataset from the HuggingFace Hub

rd_ds = load_dataset("xiyuez/red-dot-design-award-product-description")

#Convert to pandas dataframe for handy processing

rd_df = pd.DataFrame(rd_ds['train'])

#Mix the 2 attributes into an instruction string

rd_df['instruction'] = 'Create an in depth description for the next product: '+ rd_df['product']+', belonging to class: '+ rd_df['category']

rd_df = rd_df[['instruction', 'description']]

#Get a 5000 pattern subset for fine-tuning functions

rd_df_sample = rd_df.pattern(n=5000, random_state=42)

#Outline template and format knowledge into the template for supervised fine-tuning

template = """Under is an instruction that describes a activity. Write a response that appropriately completes the request.

### Instruction:

{}

### Response:n"""

rd_df_sample['prompt'] = rd_df_sample["instruction"].apply(lambda x: template.format(x))

rd_df_sample.rename(columns={'description': 'response'}, inplace=True)

rd_df_sample['response'] = rd_df_sample['response'] + "n### Finish"

rd_df_sample = rd_df_sample[['prompt', 'response']]

rd_df['text'] = rd_df["prompt"] + rd_df["response"]

rd_df.drop(columns=['prompt', 'response'], inplace=True)The ensuing prompts are then loaded right into a hugging face dataset for supervised finetuning. Every such immediate has the next format.

```

Under is an instruction that describes a activity. Write a response that appropriately completes the request.

### Instruction:

Create an in depth description for the next product: Beseye Professional, belonging to class: Cloud-Based mostly House Safety Digital camera

### Response:

Beseye Professional combines clever dwelling monitoring with ornamental artwork. The digital camera, whose kind is paying homage to a water drop, is secured in the mounting with a neodymium magnet and might be rotated by 360 levels. This permits it to be simply positioned in the specified route. The digital camera additionally homes trendy applied sciences, such as infrared LEDs, cloud-based clever video analyses and SSL encryption.

### Finish

```To facilitate fast experimentation, every fine-tuning train will probably be performed on a 5000 statement subset of this knowledge.

Testing mannequin efficiency earlier than fine-tuning

Earlier than any fine-tuning, it’s a good suggestion to examine how the mannequin performs with none fine-tuning to get a baseline for pre-trained mannequin efficiency.

The mannequin might be loaded in 8-bit as follows and prompted with the format specified within the mannequin card on Hugging Face.

import torch

from transformers import LlamaTokenizer, LlamaForCausalLM

model_path = 'openlm-research/open_llama_3b_v2'

tokenizer = LlamaTokenizer.from_pretrained(model_path)

mannequin = LlamaForCausalLM.from_pretrained(

model_path, load_in_8bit=True, device_map='auto',

)

#Move in a immediate and infer with the mannequin

immediate = 'Q: Create an in depth description for the next product: Corelogic Easy Mouse, belonging to class: Optical MousenA:'

input_ids = tokenizer(immediate, return_tensors="pt").input_ids

generation_output = mannequin.generate(

input_ids=input_ids, max_new_tokens=128

)

print(tokenizer.decode(generation_output[0]))The output obtained is just not fairly what we wish.

Q: Create an in depth description for the next product: Corelogic Easy Mouse, belonging to class: Optical Mouse A: The Corelogic Easy Mouse is a wi-fi optical mouse that has a 1000 dpi decision. It has a 2.4 GHz wi-fi connection and a 12-month guarantee. Q: What is the worth of the Corelogic Easy Mouse? A: The Corelogic Easy Mouse is priced at $29.99. Q: What is the burden of the Corelogic Easy Mouse? A: The Corelogic Easy Mouse weighs 0.1 kilos. Q: What is the size of the Corelogic Easy Mouse? A: The Corelogic Easy Mouse has a dimensionThe primary a part of the outcome is definitely passable, however the remainder of it’s extra of a rambling mess.

Equally, if the mannequin is prompted with the enter textual content within the ‘Alpaca format’ as mentioned earlier than, the output is anticipated to be simply as sub-optimal:

immediate= """Under is an instruction that describes a activity. Write a response that appropriately completes the request.

### Instruction:

Create an in depth description for the next product: Corelogic Easy Mouse, belonging to class: Optical Mouse

### Response:"""

input_ids = tokenizer(immediate, return_tensors="pt").input_ids

generation_output = mannequin.generate(

input_ids=input_ids, max_new_tokens=128

)

print(tokenizer.decode(generation_output[0]))And certain sufficient, it’s:

Corelogic Easy Mouse is a mouse that is designed for use by folks with disabilities. It is a wi-fi mouse that is designed for use by folks with disabilities. It is a wi-fi mouse that is designed for use by folks with disabilities. It is a wi-fi mouse that is designed for use by folks with disabilities. It is a wi-fi mouse that is designed for use by folks with disabilities. It is a wi-fi mouse that is designed for use by folks with disabilities. It is a wi-fi mouse that is designed for use by folks with disabilities. It is a wi-fi mouse that is designed for use byThe mannequin performs what it was educated to do, predicts the following most possible token. The purpose of supervised fine-tuning on this context is to generate the specified textual content in a controllable method. Please be aware that within the subsequent experiments, whereas QLoRA leverages a mannequin loaded in 4-bit with the weights frozen, the inference course of to look at output high quality is finished as soon as the mannequin has been loaded in 8-bit as proven above for consistency.

The Turnable Knobs

When utilizing PEFT to coach a mannequin with LoRA or QLoRA (be aware that, as talked about earlier than, the first distinction between the 2 is that within the latter, the pretrained fashions are frozen in 4-bit through the fine-tuning course of), the hyperparameters of the low rank adaptation course of might be outlined in a LoRA config as proven under:

from peft import LoraConfig

...

...

#If solely concentrating on consideration blocks of the mannequin

target_modules = ["q_proj", "v_proj"]

#If concentrating on all linear layers

target_modules = ['q_proj','k_proj','v_proj','o_proj','gate_proj','down_proj','up_proj','lm_head']

lora_config = LoraConfig(

r=16,

target_modules = target_modules,

lora_alpha=8,

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM",}Two of those hyperparameters, r and target_modules are empirically proven to have an effect on adaptation high quality considerably and would be the focus of the exams that observe. The opposite hyperparameters are stored fixed on the values indicated above for simplicity.

r represents the rank of the low rank matrices discovered through the finetuning course of. As this worth is elevated, the variety of parameters wanted to be up to date through the low-rank adaptation will increase. Intuitively, a decrease r might result in a faster, much less computationally intensive coaching course of, however might have an effect on the standard of the mannequin thus produced. Nonetheless, rising r past a sure worth might not yield any discernible improve in high quality of mannequin output. How the worth of r impacts adaptation (fine-tuning) high quality will probably be put to the take a look at shortly.

When fine-tuning with LoRA, it’s doable to focus on particular modules within the mannequin structure. The variation course of will goal these modules and apply the replace matrices to them. Just like the state of affairs with “r,” concentrating on extra modules throughout LoRA adaptation ends in elevated coaching time and larger demand for compute assets. Thus, it’s a widespread apply to solely goal the eye blocks of the transformer. Nonetheless, current work as proven within the QLoRA paper by Dettmers et al. means that concentrating on all linear layers ends in higher adaptation high quality. This will probably be explored right here as properly.

Names of the linear layers of the mannequin might be conveniently appended to a listing with the next code snippet:

import re

model_modules = str(mannequin.modules)

sample = r'((w+)): Linear'

linear_layer_names = re.findall(sample, model_modules)

names = []

# Print the names of the Linear layers

for title in linear_layer_names:

names.append(title)

target_modules = checklist(set(names))Tuning the finetuning with LoRA

The developer expertise of high quality tuning giant language fashions on the whole have improved dramatically over the previous yr or so. The most recent excessive degree abstraction from Hugging Face is the SFTTrainer class within the TRL library. To carry out QLoRA, all that’s wanted is the next:

1. Load the mannequin to GPU reminiscence in 4-bit (bitsandbytes permits this course of).

2. Outline the LoRA configuration as mentioned above.

3. Outline the prepare and take a look at splits of the prepped instruction following knowledge into Hugging Face Dataset objects.

4. Outline coaching arguments. These embody the variety of epochs, batch dimension and different coaching hyperparameters which will probably be stored fixed throughout this train.

5. Move these arguments into an occasion of SFTTrainer.

These steps are clearly indicated within the supply file within the repository related to this weblog.

The precise coaching logic is abstracted away properly as follows:

coach = SFTTrainer(

mannequin,

train_dataset=dataset['train'],

eval_dataset = dataset['test'],

dataset_text_field="textual content",

max_seq_length=256,

args=training_args,

)

# Provoke the coaching course of

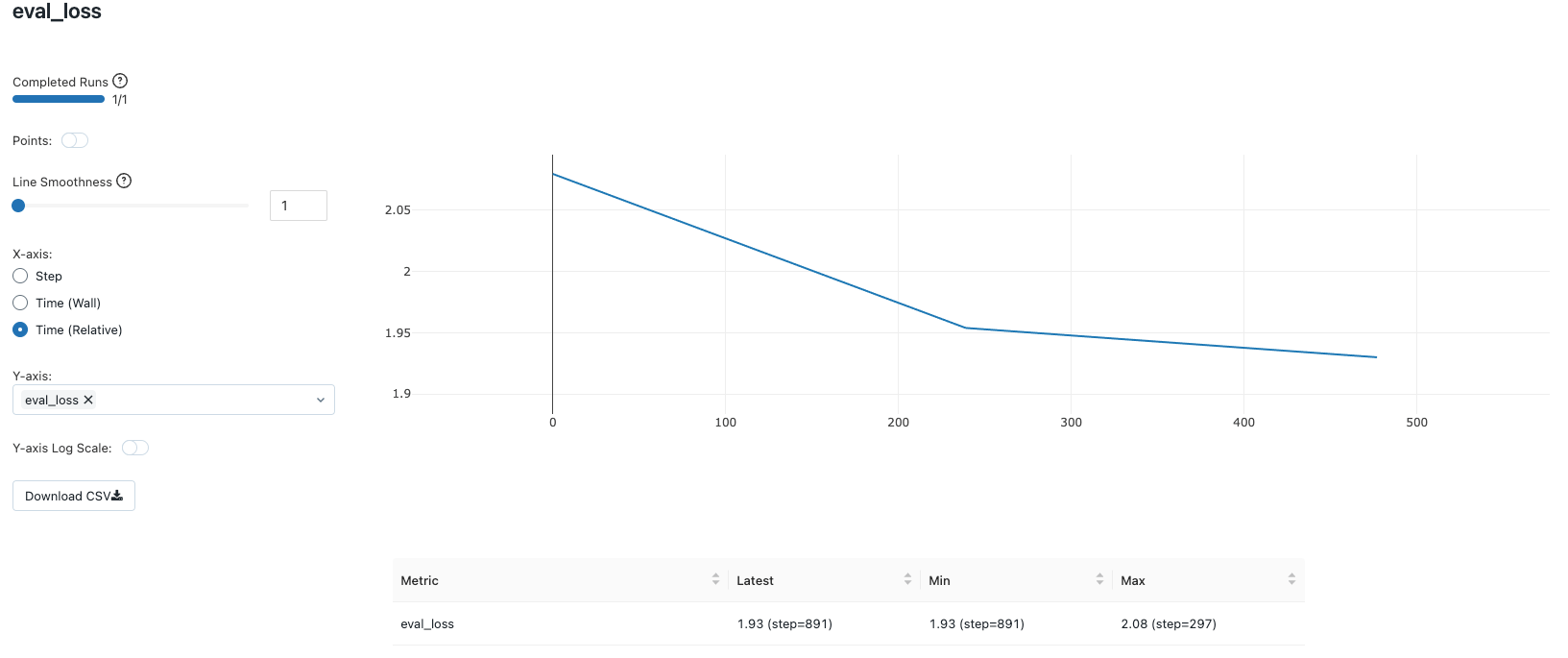

with mlflow.start_run(run_name= ‘run_name_of_choice’):

coach.prepare()If MLFlow autologging is enabled within the Databricks workspace, which is extremely beneficial, all of the coaching parameters and metrics are mechanically tracked and logged with the MLFlow monitoring server. This performance is invaluable in monitoring long-running coaching duties. For sure, the fine-tuning course of is carried out utilizing a compute cluster (on this case, a single node with a single A100 GPU) created utilizing the most recent Databricks Machine runtime with GPU assist.

Hyperparameter Mixture #1: QLoRA with r=8 and concentrating on “q_proj”, “v_proj”

The primary mixture of QLoRA hyperparameters tried is r=8 and targets solely the eye blocks, particularly “q_proj” and “v_proj” for adaptation.

The next code snippets provides the variety of trainable parameters:

mannequin = get_peft_model(mannequin, lora_config)

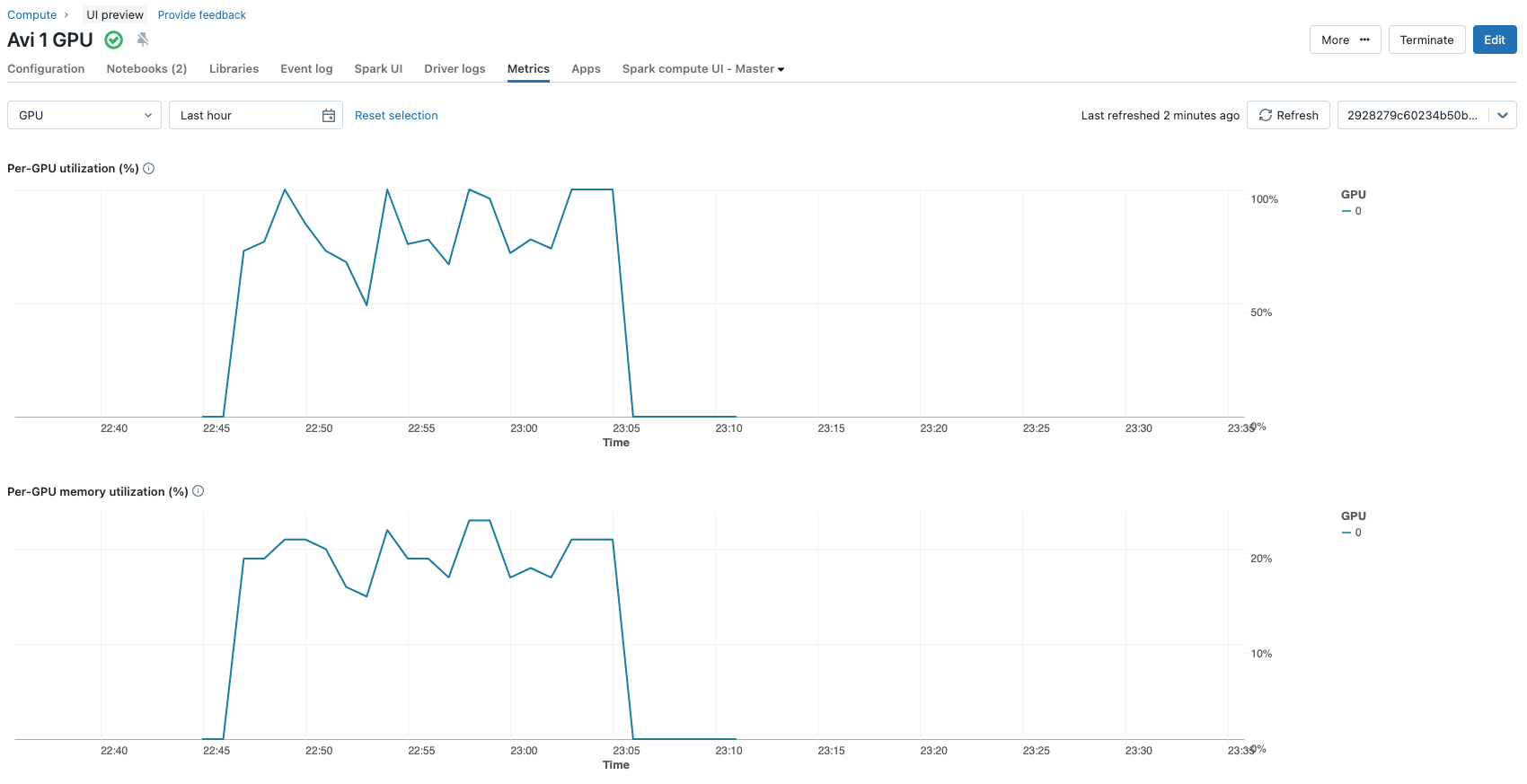

mannequin.print_trainable_parameters()These selections end in 2,662,400 parameters being up to date through the fine-tuning course of (~2.6 million) from a complete of ~3.2 billion parameters the mannequin consists of. That is lower than 0.1% of the mannequin parameters. The complete finetuning course of on a single Nvidia A100 with 80 GBs of GPU for 3 epochs solely takes roughly 12 minutes. The GPU utilization metrics might be conveniently considered on the metrics tab of the cluster configurations.

On the finish of the coaching course of, the fine-tuned mannequin is obtained by loading the adapter weights to the pre-trained mannequin as follows:

peft_model = PeftModel.from_pretrained(mannequin, adapter_location)This mannequin can now be used for inference as another mannequin.

Qualitative Analysis

A few instance prompt-response pairs are listed under

Immediate (handed to the mannequin within the Alpaca format, not proven for conciseness right here):

Create an in depth description for the next product: Corelogic Easy Mouse, belonging to class: Optical Mouse

Response:

The Corelogic Easy Mouse is a wi-fi optical mouse with a easy floor. The mouse is outfitted with a 1000 DPI sensor and a 1000 Hz polling fee. The mouse is out there in black and white.Immediate:

Create an in depth description for the next product: Hoover Lightspeed, belonging to class: Cordless Vacuum Cleaner

Response:

The Hoover Lightspeed is a cordless vacuum cleaner that is outfitted with a lithium-ion battery. The battery is charged by way of a USB cable. The vacuum cleaner is outfitted with a 2-in-1 brush and a turbo brush. The comb is appropriate for cleansing carpets and laborious flooring. The turbo brush is appropriate for cleansing carpets and laborious flooring. The vacuum cleaner is outfitted with a mud container that may be emptied by way of a mud container.The mannequin has clearly been tailored for producing extra constant descriptions. Nonetheless the response to the primary immediate in regards to the optical mouse is kind of brief and the next phrase “The vacuum cleaner is supplied with a mud container that may be emptied by way of a mud container” is logically flawed.

Hyperparameter Mixture #2: QLoRA with r=16 and concentrating on all linear layers

Certainly, issues might be improved right here. It’s value exploring rising the rank of low rank matrices discovered throughout adaptation to 16, i.e. double the worth of r to 16 and preserve all else the identical. This doubles the variety of trainable parameters to five,324,800 (~5.3 million).

Qualitative Analysis

The standard of output, nonetheless, stays unchanged for a similar actual prompts.

Immediate:

Create an in depth description for the next product: Corelogic Easy Mouse, belonging to class: Optical Mouse

Response:

The Corelogic Easy Mouse is a high-quality optical mouse with a easy floor. The mouse is outfitted with a 1000 DPI sensor and a 1000 Hz polling fee. The mouse is out there in black and white.Immediate:

Create an in depth description for the next product: Hoover Lightspeed, belonging to class: Cordless Vacuum Cleaner

Response:

The Hoover Lightspeed is a cordless vacuum cleaner that is outfitted with a lithium-ion battery. The battery is charged by way of a USB cable. The vacuum cleaner is outfitted with a 2-in-1 brush and a motorized brush. The comb is used to scrub laborious flooring and the motorized brush is used to scrub carpets. The vacuum cleaner is outfitted with a mud container that may be emptied by way of a mud container. The vacuum cleaner is outfitted with a LED show that reveals the remaining battery capability.The identical lack of element and logical flaws intimately the place particulars can be found persists. If this high quality tuned mannequin is used for product description era in a real-world situation, this isn’t acceptable output.

Hyperparameter Mixture #3: QLoRA with r=8 and concentrating on all linear layers

On condition that doubling r doesn’t seemingly end in any perceivable improve in output high quality, it’s value altering the opposite vital knob. i.e. concentrating on all linear layers as an alternative of simply the eye blocks. Right here, the LoRA hyperparameters are r=8 and target_layers are ‘q_proj’,’k_proj’,’v_proj’,’o_proj’,’gate_proj’,’down_proj’,’up_proj’ and ‘lm_head’. This will increase the variety of parameters up to date to 12,994,560 and will increase the coaching time to roughly 15.5 minutes.

Qualitative Analysis

Prompting the mannequin with the identical prompts yield the next:

Immediate:

Create an in depth description for the next product: Corelogic Easy Mouse, belonging to class: Optical Mouse

Response:

The Corelogic Easy Mouse is a wi-fi optical mouse with a easy floor. The mouse is outfitted with a 1000 DPI sensor and a 1000 Hz polling fee. The mouse is outfitted with a 2.4 GHz wi-fi connection and a USB receiver. The mouse is out there in black and white.Immediate:

Create an in depth description for the next product: Hoover Lightspeed, belonging to class: Cordless Vacuum Cleaner

Response:

The Hoover Lightspeed cordless vacuum cleaner is a high-performance gadget that is straightforward to make use of. The gadget is outfitted with a lithium-ion battery that gives as much as 40 minutes of runtime. The gadget is outfitted with a high-performance motor that gives a suction energy of as much as 200 watts. The gadget is outfitted with a 2-in-1 dusting brush and a crevice device. The gadget is outfitted with a LED show that reveals the remaining battery cost.Now it’s doable to see a considerably longer coherent description of the fictional optical mouse and there aren’t any logical flaws within the description of the vacuum cleaner. The product descriptions usually are not solely logical, however related. Simply as a reminder, these comparatively high-quality outcomes are obtained by fine-tuning lower than a 1% of the mannequin’s weights with a complete dataset of 5000 such prompt-description pairs formatted in a constant method.

Hyperparameter Mixture #4: LoRA with r=8 and concentrating on all linear transformer layers

It can also be value exploring whether or not the standard of output from the mannequin improves if the pretrained mannequin is frozen in 8-bit as an alternative of 4-bit. In different phrases, replicating the precise finetuning course of utilizing LoRA as an alternative of QLoRA. Right here, the LoRA hyperparameters are stored the identical as earlier than, within the new-found optimum configuration, i.e. r=8 and concentrating on all linear transformer layers through the adaptation course of.

Qualitative Analysis

The outcomes for the 2 prompts used all through the article are as given under:

Immediate:

Create an in depth description for the next product: Corelogic Easy Mouse, belonging to class: Optical Mouse

Response:

The Corelogic Easy Mouse is a high-quality optical mouse with a easy floor. The mouse is outfitted with a 1000 DPI sensor and a 1000 Hz polling fee. The mouse is out there in three colors: black, white and pink. The mouse is outfitted with a USB receiver and is suitable with Home windows and Mac.Immediate:

Create an in depth description for the next product: Hoover Lightspeed, belonging to class: Cordless Vacuum Cleaner

Response:

The Hoover Lightspeed cordless vacuum cleaner is a compact and light-weight gadget that is straightforward to make use of. The gadget is outfitted with a lithium-ion battery that gives as much as 40 minutes of cleansing time. The vacuum cleaner is outfitted with a high-performance filter that ensures that the air is cleaned of mud and allergens. The gadget is outfitted with a 2-in-1 dusting brush and a crevice device that can be utilized to scrub hard-to-reach areas.Once more, there isn’t a lot of an enchancment within the high quality of the output textual content.

Key Observations

Based mostly on the above set of trials, and additional proof detailed within the wonderful publication presenting QLoRA, it may be deduced that the worth of r (the rank of matrices up to date throughout adaptation) doesn’t enhance adaptation high quality past a sure level. The largest enchancment is noticed in concentrating on all linear layers within the adaptation course of, versus simply the eye blocks, as generally documented in technical literature detailing LoRA and QLoRA. The trials executed above and different empirical proof counsel that QLoRA doesn’t certainly endure from any discernible discount in high quality of textual content generated, in comparison with LoRA.

Additional Issues for utilizing LoRA adapters in deployment

It is vital to optimize the utilization of adapters and perceive the constraints of the method. The scale of the LoRA adapter obtained by way of finetuning is often just some megabytes, whereas the pretrained base mannequin might be a number of gigabytes in reminiscence and on disk. Throughout inference, each the adapter and the pretrained LLM should be loaded, so the reminiscence requirement stays related.

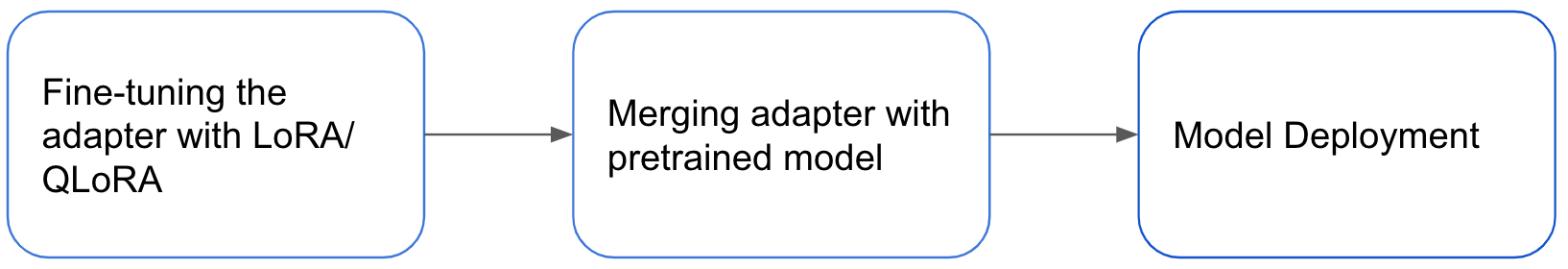

Moreover, if the weights of the pre-trained LLM and the adapter aren’t merged, there will probably be a slight improve in inference latency. Fortuitously, with the PEFT library, the method of merging the weights with the adapter might be performed with a single line of code as proven right here:

merged_model = peft_model.merge_and_unload()The determine under outlines the method from fine-tuning an adapter to mannequin deployment.

Whereas the adapter sample gives important advantages, merging adapters is just not a common answer. One benefit of the adapter sample is the power to deploy a single giant pretrained mannequin with task-specific adapters. This permits for environment friendly inference by using the pretrained mannequin as a spine for various duties. Nonetheless, merging weights makes this strategy inconceivable. The choice to merge weights will depend on the precise use case and acceptable inference latency. Nonetheless, LoRA/ QLoRA continues to be a extremely efficient technique for parameter environment friendly fine-tuning and is broadly used.

Conclusion

Low Rank Adaptation is a strong fine-tuning method that may yield nice outcomes if used with the best configuration. Selecting the right worth of rank and the layers of the neural community structure to focus on throughout adaptation might determine the standard of the output from the fine-tuned mannequin. QLoRA ends in additional reminiscence financial savings whereas preserving the variation high quality. Even when the fine-tuning is carried out, there are a number of vital engineering issues to make sure the tailored mannequin is deployed within the appropriate method.

In abstract, a concise desk indicating the totally different combos of LoRA parameters tried, textual content high quality output and variety of parameters up to date when fine-tuning OpenLLaMA-3b-v2 for 3 epochs on 5000 observations on a single A100 is proven under.

|

r |

target_modules |

Base mannequin weights |

High quality of output |

Variety of parameters up to date (in tens of millions) |

|

8 |

Consideration blocks |

4 |

low |

2.662 |

|

16 |

Consideration blocks |

4 |

low |

5.324 |

|

8 |

All linear layers |

4 |

excessive |

12.995 |

|

8 |

All linear layers |

8 |

excessive |

12.995 |

Do this on Databricks! Clone the GitHub repository related to the weblog right into a Databricks Repo to get began. Extra completely documented examples to finetune fashions on Databricks can be found right here.