(Maxim Apryatin/Shutterstock)

Firms are flocking to GenAI applied sciences to assist automate enterprise features, corresponding to studying and writing emails, producing Java and SQL code, and executing advertising and marketing campaigns. On the similar time, cybercriminals are additionally discovering instruments like WomrGPT and FraudGPT helpful for automating nefarious deeds, corresponding to writing malware, distributing ransomware, and automating the exploitation of laptop vulnerabilities across the Web. With the pending launch of API acceess to a language mannequin dubbed DarkBERT into the felony underground, the GenAI capabilities obtainable to cybercriminals may enhance considerably.

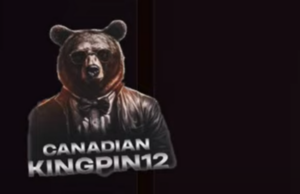

On July 13, researchers with SlashNext reported the emergence of WormGPT, an AI-powered device that’s being actively utilized by cybercriminals. About two weeks later, it let the world learn about one other digital creation from the felony underground, dubbed FraudGPT. FraudGPT is being promoted by its creator, who goes by the title “CanadianKingpin12,” as an “unique bot” designed for fraudsters, hackers, spammers, SlashNext says in a weblog publish this week.

FraudGPT is replete with a variety of superior GenAI capabilities, in keeping with an advert posted on a cybercrime discussion board found by SlashNext, together with:

- Write malicious code;

- Create undetectable malware;

- Create phishing pages;

- Create hacking instruments;

Picture from a video produced by cybercriminals and shared by SlashNext

- Write rip-off pages / letters;

- Discover leaks and vulnerabilities;

- Discover “cardable” websites;

- “And rather more | sky is the restrict.”

When SlashNext contacted the malware’s writer, the writer insisted that FraudGPT was superior to WormGPT, which was the primary aim that SlashNext had within the dialog. Then the malware writer went to to say that she or he had two extra malicious GenAI merchandise in improvement, together with DarkBART and DarkBERT, and that they’d be ingrated with Google Lens, which supplies the instruments the aptitude to ship textual content accompanied by pictures.

This perked up the ears of the safety researchers at SlashNext, a Pleasanton, California firm that gives safety towards phishing and human hacking. DarkBERT is a big language mannequin (LLM) created by a South Korean safety analysis agency and educated on a big corpus of information culled from the Darkish Net to struggle cybercrime. It has not been publicly launched, however CanadianKingpin12 claimed to have entry to it (though it was not clear whether or not they truly did).

DarkBERT may probably present cybercriminals with a leg up of their malicious schemes. In his weblog publish, SlashNext’s Daniel Kelley, who identifies as “a reformed black hat laptop hacker,” shares a number of the potential ways in which CanadianKingpin12 envisions the device getting used. They embrace:

- “Helping in executing superior social engineering assaults to govern people;”

- “Exploiting vulnerabilities in laptop methods, together with vital infrastructure;”

- “Enabling the creation and distribution of malware, together with ransomware;”

- “The event of subtle phishing campaigns for stealing private data;” and

- “Offering data on zero-day vulnerabilities to end-users.”

“Whereas it’s tough to precisely gauge the true affect of those capabilities, it’s cheap to count on that they are going to decrease the limitations for aspiring cybercriminals,” Kelley writes. “Furthermore, the fast development from WormGPT to FraudGPT and now ‘DarkBERT’ in beneath a month, underscores the numerous affect of malicious AI on the cybersecurity and cybercrime panorama.”

What’s extra, simply as OpenAI has enabled 1000’s of firms to leverage highly effective GenAI capabilites by means of the ability of APIs, so too will the cybercriminal underground leverage APIs.

“This development will vastly simplify the method of integrating these instruments into cybercriminals’ workflows and code,” Kelley writes. “Such progress raises vital issues about potential penalties, because the use instances for this sort of expertise will doubtless grow to be more and more intricate.”

The GenAI felony exercise just lately caugh the attention of Cybersixgill, an Israeli safety agency. In line with Delilah Schwartz, who works in menace intel at Cybersixgill, all three merchandise are being marketed on the market.

“Cybersixgill noticed menace actors promoting FraudGPT and DarkBARD on cybercrime boards and Telegram, along with chatter in regards to the instruments,” Schwartz says. “Malicious variations of deep language studying fashions are presently a sizzling commodity on the underground, producing malicious code, creating phishing content material, and facilitating different unlawful actions. Whereas menace actors abuse official synthetic intelligence (AI) platforms with workarounds that evade security restrictions, malicious AI instruments go a step additional and are particularly designed to facilitate felony actions.”

The corporate has famous adverts selling FraudGPT, FraudBot, and DarkBARD as “Swiss Military Knife hacking instruments.”

“One advert explicitly acknowledged the instruments are designed for ‘fraudsters, hackers, spammers, [and] like-minded people,’” Schwartz says. “If the instruments carry out as marketed, they will surely improve a wide range of assault chains. With that being stated, there seems to be a dearth of precise critiques from customers championing the merchandise’ capabilities, regardless of the abundance of ads.”

Associated Gadgets:

Feds Increase Cyber Spending as Safety Threats to Knowledge Proliferate

Safety Issues Inflicting Pullback in Open Supply Knowledge Science, Anaconda Warns

Filling Cybersecurity Blind Spots with Unsupervised Studying