The Web is an ever-evolving digital universe with over 1.1 billion web sites.

Do you suppose that Google can crawl each web site on the earth?

Even with all of the assets, cash, and information facilities that Google has, it can’t even crawl all the internet – nor does it need to.

What Is Crawl Finances, And Is It Vital?

Crawl finances refers back to the period of time and assets that Googlebot spends on crawling internet pages in a website.

You will need to optimize your website so Google will discover your content material quicker and index your content material, which might assist your website get higher visibility and visitors.

In case you have a big website that has hundreds of thousands of internet pages, it’s notably necessary to handle your crawl finances to assist Google crawl your most necessary pages and get a greater understanding of your content material.

Google states that:

In case your website doesn’t have numerous pages that change quickly, or in case your pages appear to be crawled the identical day that they’re printed, holding your sitemap updated and checking your index protection usually is sufficient. Google additionally states that every web page have to be reviewed, consolidated and assessed to find out the place it is going to be listed after it has crawled.

Crawl finances is decided by two most important parts: crawl capability restrict and crawl demand.

Crawl demand is how a lot Google desires to crawl in your web site. Extra common pages, i.e., a well-liked story from CNN and pages that have important adjustments, will probably be crawled extra.

Googlebot desires to crawl your website with out overwhelming your servers. To stop this, Googlebot calculates a crawl capability restrict, which is the utmost variety of simultaneous parallel connections that Googlebot can use to crawl a website, in addition to the time delay between fetches.

Taking crawl capability and crawl demand collectively, Google defines a website’s crawl finances because the set of URLs that Googlebot can and needs to crawl. Even when the crawl capability restrict isn’t reached, if crawl demand is low, Googlebot will crawl your website much less.

Listed here are the highest 12 tricks to handle crawl finances for big to medium websites with 10k to hundreds of thousands of URLs.

1. Decide What Pages Are Vital And What Ought to Not Be Crawled

Decide what pages are necessary and what pages are usually not that necessary to crawl (and thus, Google visits much less regularly).

As soon as you establish that via evaluation, you may see what pages of your website are price crawling and what pages of your website are usually not price crawling and exclude them from being crawled.

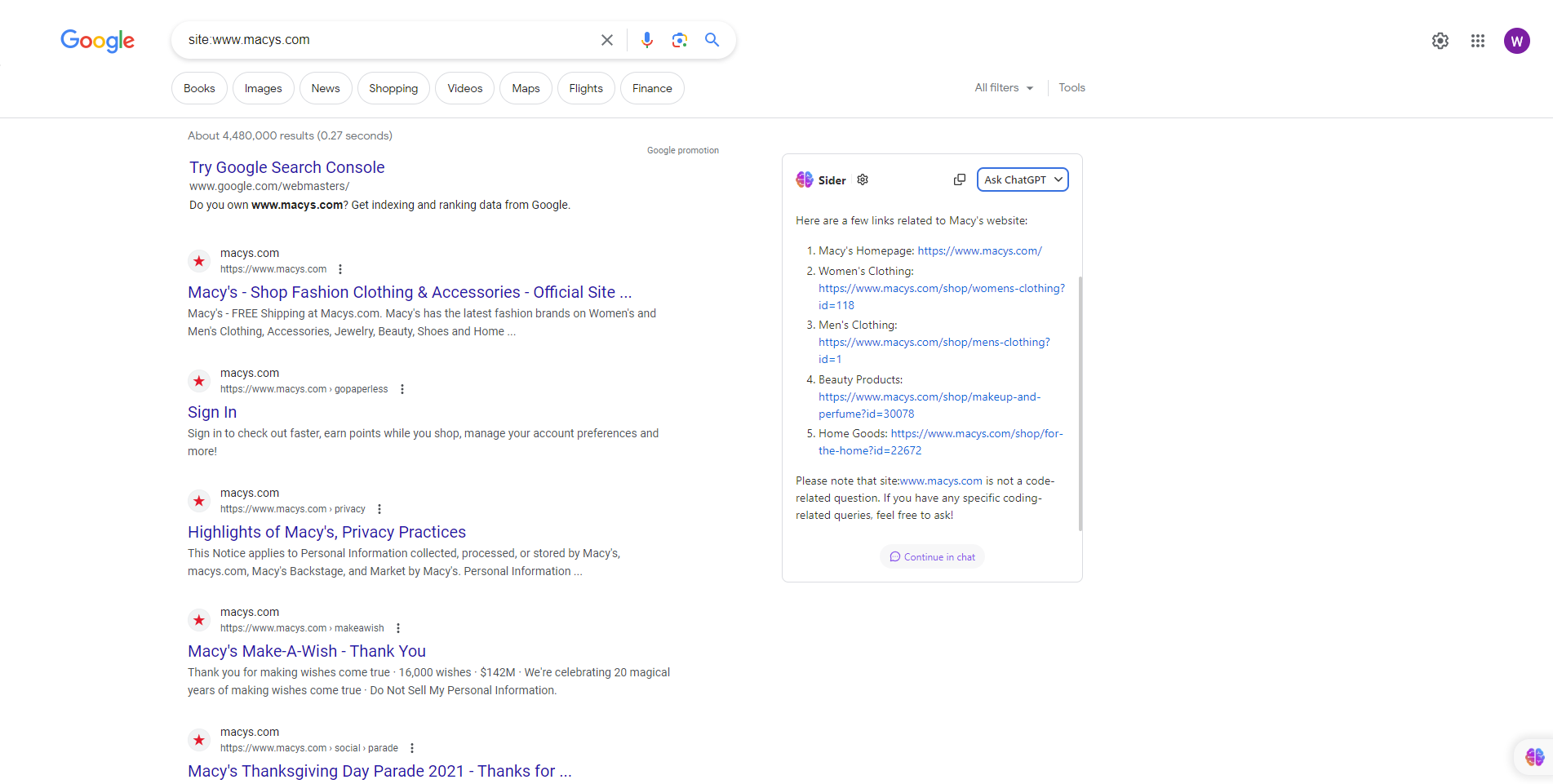

For instance, Macys.com has over 2 million pages which might be listed.

Screenshot from seek for [site: macys.com], Google, June 2023

Screenshot from seek for [site: macys.com], Google, June 2023It manages its crawl finances by informing Google to not crawl sure pages on the positioning as a result of it restricted Googlebot from crawling sure URLs within the robots.txt file.

Googlebot might determine it isn’t price its time to have a look at the remainder of your website or enhance your crawl finances. Guarantee that Faceted navigation and session identifiers: are blocked through robots.txt

2. Handle Duplicate Content material

Whereas Google doesn’t difficulty a penalty for having duplicate content material, you need to present Googlebot with unique and distinctive data that satisfies the tip consumer’s data wants and is related and helpful. Just remember to are utilizing the robots.txt file.

Google acknowledged to not use no index, as it would nonetheless request however then drop.

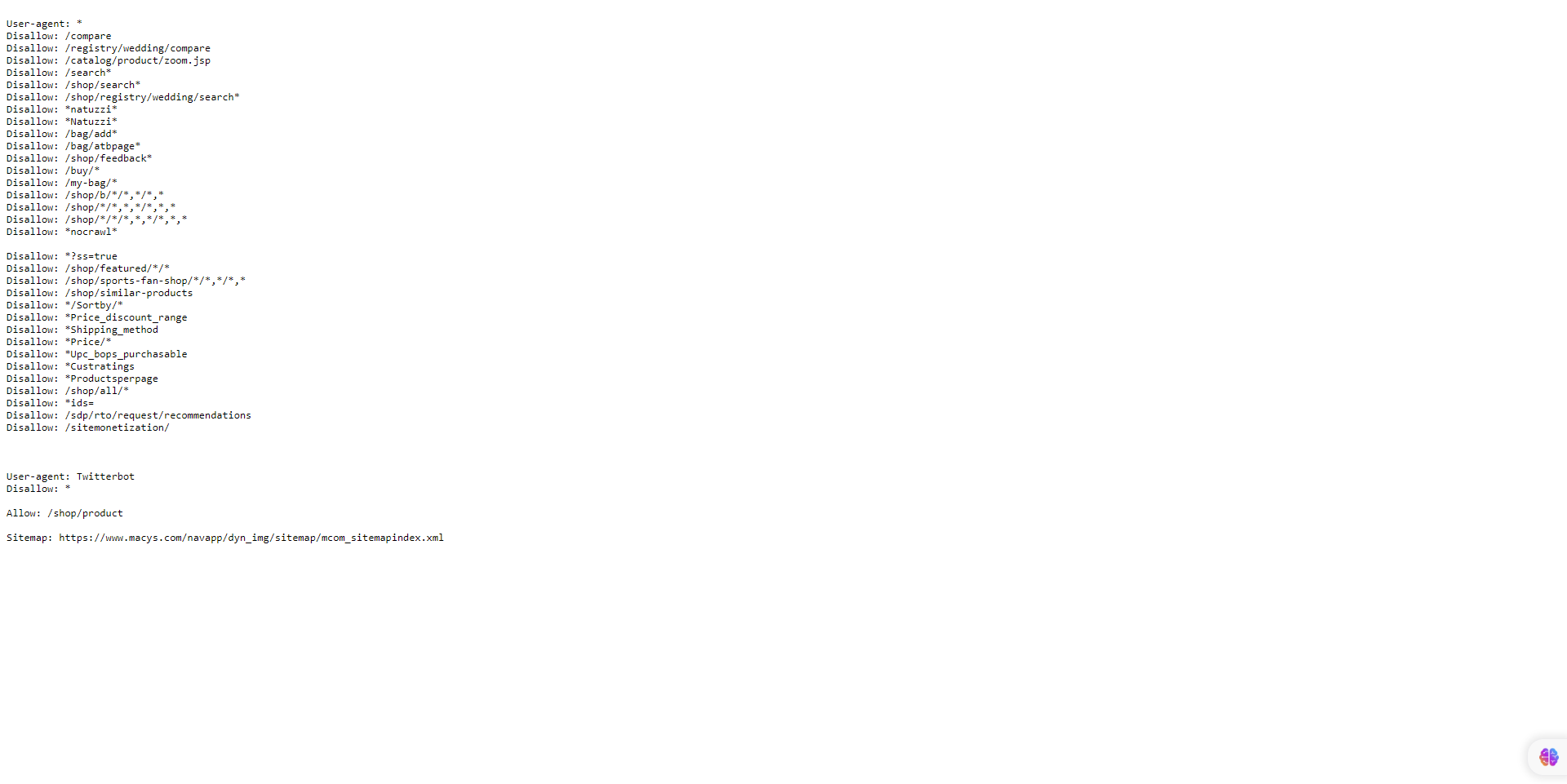

3. Block Crawling Of Unimportant URLs Utilizing Robots.txt And Inform Google What Pages It Can Crawl

For an enterprise-level website with hundreds of thousands of pages, Google recommends blocking the crawling of unimportant URLs utilizing robots.txt.

Additionally, you need to be certain that your necessary pages, directories that maintain your golden content material, and cash pages are allowed to be crawled by Googlebot and different engines like google.

Screenshot from writer, June 2023

Screenshot from writer, June 20234. Lengthy Redirect Chains

Hold your variety of redirects to a small quantity in case you can. Having too many redirects or redirect loops can confuse Google and cut back your crawl restrict.

Google states that lengthy redirect chains can have a destructive impact on crawling.

5. Use HTML

Utilizing HTML will increase the chances of a crawler from any search engine visiting your web site.

Whereas Googlebots have improved on the subject of crawling and indexing JavaScript, different search engine crawlers are usually not as subtle as Google and will have points with different languages apart from HTML.

6. Make Positive Your Net Pages Load Rapidly And Provide A Good Consumer Expertise

Make your website is optimized for Core Net Vitals.

The faster your content material masses – i.e., underneath three seconds – the faster Google can present data to finish customers. In the event that they prefer it, Google will maintain indexing your content material as a result of your website will display Google crawl well being, which might make your crawl restrict enhance.

7. Have Helpful Content material

In line with Google, content material is rated by high quality, no matter age. Create and replace your content material as vital, however there isn’t a extra worth in making pages artificially seem like contemporary by making trivial adjustments and updating the web page date.

In case your content material satisfies the wants of finish customers and, i.e., useful and related, whether or not it’s previous or new doesn’t matter.

If customers don’t discover your content material useful and related, then I like to recommend that you just replace and refresh your content material to be contemporary, related, and helpful and market it through social media.

Additionally, hyperlink your pages on to the house web page, which can be seen as extra necessary and crawled extra usually.

8. Watch Out For Crawl Errors

In case you have deleted some pages in your website, make sure the URL returns a 404 or 410 standing for completely eliminated pages. A 404 standing code is a robust sign to not crawl that URL once more.

Blocked URLs, nevertheless, will keep a part of your crawl queue for much longer and will probably be recrawled when the block is eliminated.

- Additionally, Google states to take away any delicate 404 pages, which is able to proceed to be crawled and waste your crawl finances. To check this, go into GSC and evaluation your Index Protection report for delicate 404 errors.

In case your website has many 5xx HTTP response standing codes (server errors) or connection timeouts sign the other, crawling slows down. Google recommends taking note of the Crawl Stats report in Search Console and holding the variety of server errors to a minimal.

By the best way, Google doesn’t respect or adhere to the non-standard “crawl-delay” robots.txt rule.

Even in case you use the nofollow attribute, the web page can nonetheless be crawled and waste the crawl finances if one other web page in your website, or any web page on the net, doesn’t label the hyperlink as nofollow.

9. Hold Sitemaps Up To Date

XML sitemaps are necessary to assist Google discover your content material and might velocity issues up.

This can be very necessary to maintain your sitemap URLs updated, use the <lastmod> tag for up to date content material, and observe website positioning finest practices, together with however not restricted to the next.

- Solely embrace URLs you need to have listed by engines like google.

- Solely embrace URLs that return a 200-status code.

- Be certain a single sitemap file is lower than 50MB or 50,000 URLs, and in case you determine to make use of a number of sitemaps, create an index sitemap that can record all of them.

- Be certain your sitemap is UTF-8 encoded.

- Embody hyperlinks to localized model(s) of every URL. (See documentation by Google.)

- Hold your sitemap updated, i.e., replace your sitemap each time there’s a new URL or an previous URL has been up to date or deleted.

10. Construct A Good Web site Construction

Having a good website construction is necessary in your website positioning efficiency for indexing and consumer expertise.

Web site construction can have an effect on search engine outcomes pages (SERP) ends in numerous methods, together with crawlability, click-through charge, and consumer expertise.

Having a transparent and linear construction of your website can use your crawl finances effectively, which is able to assist Googlebot discover any new or up to date content material.

At all times keep in mind the three-click rule, i.e., any consumer ought to be capable to get from any web page of your website to a different with a most of three clicks.

11. Inner Linking

The better you may make it for engines like google to crawl and navigate via your website, the better crawlers can establish your construction, context, and necessary content material.

Having inner hyperlinks pointing to an online web page can inform Google that this web page is necessary, assist set up an data hierarchy for the given web site, and may also help unfold hyperlink fairness all through your website.

12. At all times Monitor Crawl Stats

At all times evaluation and monitor GSC to see in case your website has any points throughout crawling and search for methods to make your crawling extra environment friendly.

You need to use the Crawl Stats report to see if Googlebot has any points crawling your website.

If availability errors or warnings are reported in GSC in your website, search for cases within the host availability graphs the place Googlebot requests exceeded the purple restrict line, click on into the graph to see which URLs have been failing, and attempt to correlate these with points in your website.

Additionally, you should use the URL Inspection Instrument to check just a few URLs in your website.

If the URL inspection instrument returns host load warnings, that implies that Googlebot can’t crawl as many URLs out of your website because it found.

Wrapping Up

Crawl finances optimization is essential for big websites as a result of their intensive measurement and complexity.

With quite a few pages and dynamic content material, search engine crawlers face challenges in effectively and successfully crawling and indexing the positioning’s content material.

By optimizing your crawl finances, website house owners can prioritize the crawling and indexing of necessary and up to date pages, guaranteeing that engines like google spend their assets correctly and successfully.

This optimization course of entails methods resembling enhancing website structure, managing URL parameters, setting crawl priorities, and eliminating duplicate content material, main to raised search engine visibility, improved consumer expertise, and elevated natural visitors for big web sites.

Extra assets:

Featured Picture: BestForBest/Shutterstock