Introduction

We’re thrilled to unveil the English SDK for Apache Spark, a transformative device designed to complement your Spark expertise. Apache Spark™, celebrated globally with over a billion annual downloads from 208 nations and areas, has considerably superior large-scale information analytics. With the modern utility of Generative AI, our English SDK seeks to broaden this vibrant neighborhood by making Spark extra user-friendly and approachable than ever!

Motivation

GitHub Copilot has revolutionized the sector of AI-assisted code improvement. Whereas it is highly effective, it expects the customers to grasp the generated code to commit. The reviewers want to grasp the code as nicely to overview. This might be a limiting issue for its broader adoption. It additionally sometimes struggles with context, particularly when coping with Spark tables and DataFrames. The hooked up GIF illustrates this level, with Copilot proposing a window specification and referencing a non-existent ‘dept_id’ column, which requires some experience to understand.

As an alternative of treating AI because the copilot, lets make AI the chauffeur and we take the luxurious backseat? That is the place the English SDK is available in. We discover that the state-of-the-art massive language fashions know Spark rather well, due to the good Spark neighborhood, who over the previous ten years contributed tons of open and high-quality content material like API documentation, open supply initiatives, questions and solutions, tutorials and books, and many others. Now we bake Generative AI’s skilled information about Spark into the English SDK. As an alternative of getting to grasp the advanced generated code, you might get the consequence with a easy instruction in English that many perceive:

transformed_df = df.ai.remodel('get 4 week shifting common gross sales by dept')

The English SDK, with its understanding of Spark tables and DataFrames, handles the complexity, returning a DataFrame immediately and appropriately!

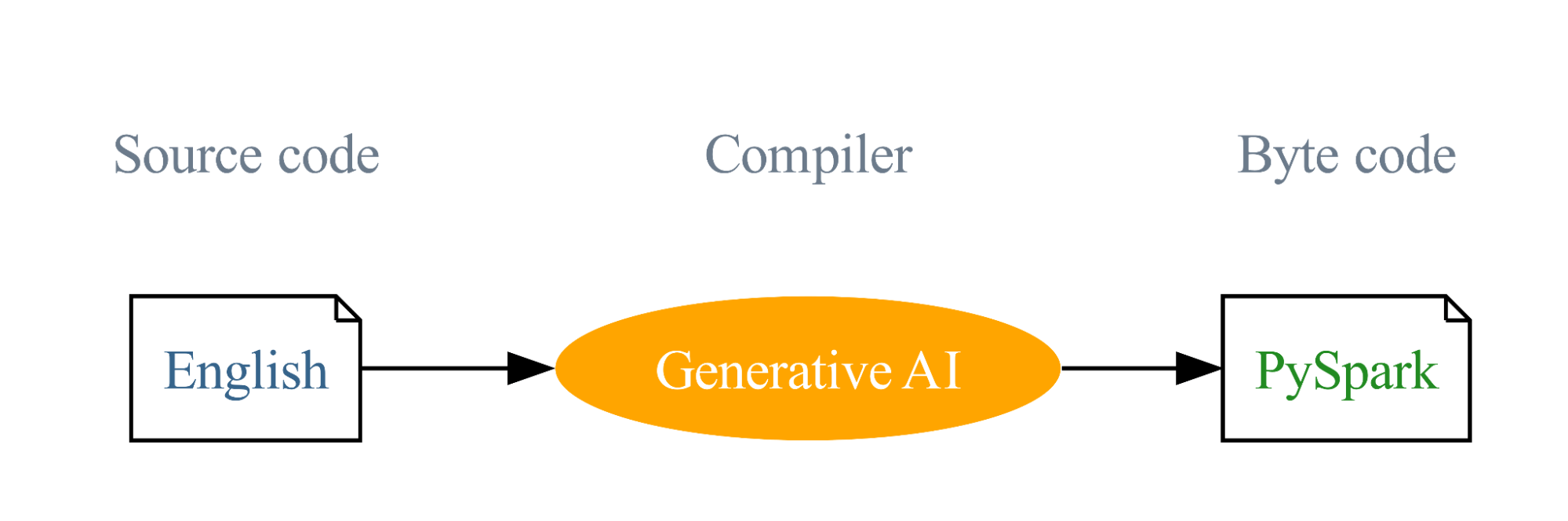

Our journey started with the imaginative and prescient of utilizing English as a programming language, with Generative AI compiling these English directions into PySpark and SQL code. This modern strategy is designed to decrease the boundaries to programming and simplify the educational curve. This imaginative and prescient is the driving drive behind the English SDK and our aim is to broaden the attain of Spark, making this very profitable venture much more profitable.

Options of the English SDK

The English SDK simplifies Spark improvement course of by providing the next key options:

- Information Ingestion: The SDK can carry out an online search utilizing your offered description, make the most of the LLM to find out essentially the most acceptable consequence, after which easily incorporate this chosen internet information into Spark—all completed in a single step.

- DataFrame Operations: The SDK supplies functionalities on a given DataFrame that permit for transformation, plotting, and rationalization primarily based in your English description. These options considerably improve the readability and effectivity of your code, making operations on DataFrames simple and intuitive.

- Person-Outlined Capabilities (UDFs): The SDK helps a streamlined course of for creating UDFs. With a easy decorator, you solely want to supply a docstring, and the AI handles the code completion. This function simplifies the UDF creation course of, letting you concentrate on perform definition whereas the AI takes care of the remainder.

- Caching: The SDK incorporates caching to spice up execution pace, make reproducible outcomes, and save value.

Examples

As an example how the English SDK can be utilized, let’s take a look at just a few examples:

Information Ingestion

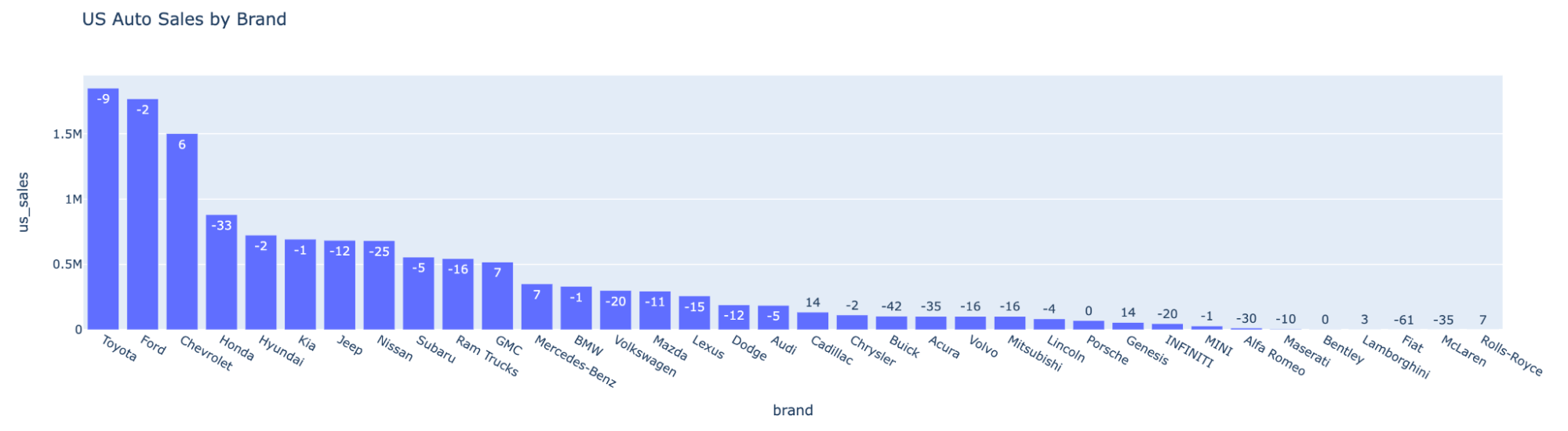

If you happen to’re an information scientist who must ingest 2022 USA nationwide auto gross sales, you are able to do this with simply two strains of code:

spark_ai = SparkAI()

auto_df = spark_ai.create_df("2022 USA nationwide auto gross sales by model")DataFrame Operations

Given a DataFrame df, the SDK means that you can run strategies beginning with df.ai. This consists of transformations, plotting, DataFrame rationalization, and so forth.

To energetic partial capabilities for PySpark DataFrame:

spark_ai.activate()To take an summary of `auto_df`:

auto_df.ai.plot()

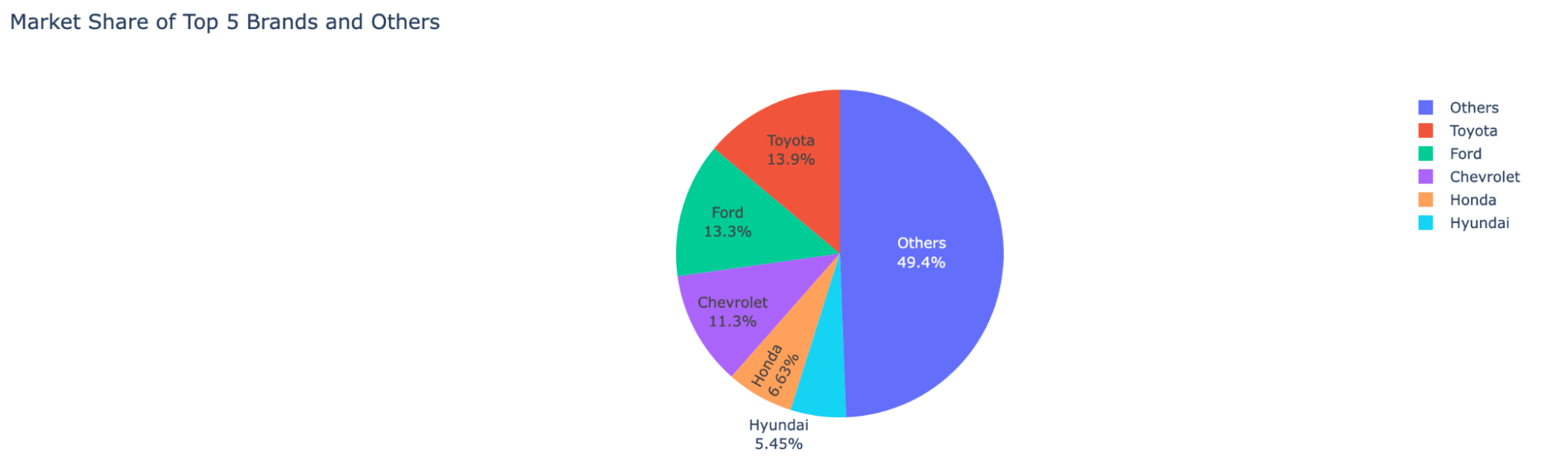

To view the market share distribution throughout automotive corporations:

auto_df.ai.plot("pie chart for US gross sales market shares, present the highest 5 manufacturers and the sum of others")

To get the model with the very best development:

auto_top_growth_df=auto_df.ai.remodel("high model with the very best development")

auto_top_growth_df.present()

| model |

us_sales_2022 |

sales_change_vs_2021 |

|

Cadillac |

134726 |

14 |

To get the reason of a DataFrame:

auto_top_growth_df.ai.clarify()In abstract, this DataFrame is retrieving the model with the very best gross sales change in 2022 in comparison with 2021. It presents the outcomes sorted by gross sales change in descending order and solely returns the highest consequence.

Person-Outlined Capabilities (UDFs)

The SDK helps a easy and neat UDF creation course of. With the @spark_ai.udf decorator, you solely have to declare a perform with a docstring, and the SDK will mechanically generate the code behind the scene:

@spark_ai.udf

def convert_grades(grade_percent: float) -> str:

"""Convert the grade p.c to a letter grade utilizing normal cutoffs"""

...Now you should utilize the UDF in SQL queries or DataFrames

SELECT student_id, convert_grades(grade_percent) FROM grade

Conclusion

The English SDK for Apache Spark is an very simple but highly effective device that may considerably improve your improvement course of. It is designed to simplify advanced duties, cut back the quantity of code required, and assist you to focus extra on deriving insights out of your information.

Whereas the English SDK is within the early phases of improvement, we’re very enthusiastic about its potential. We encourage you to discover this modern device, expertise the advantages firsthand, and contemplate contributing to the venture. Do not simply observe the revolution—be part of it. Discover and harness the ability of the English SDK at pyspark.ai at this time. Your insights and participation might be invaluable in refining the English SDK and increasing the accessibility of Apache Spark.