Introduction

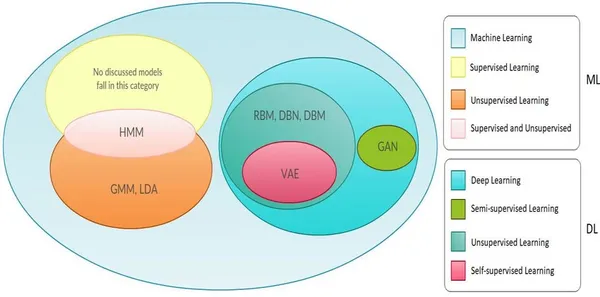

Within the dynamic world of machine studying, one fixed problem is harnessing the total potential of restricted labeled information. Enter the realm of semi-supervised studying—an ingenious strategy that harmonizes a small batch of labeled information with a trove of unlabeled information. On this article, we discover a game-changing technique: leveraging generative fashions, particularly Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs). By the tip of this charming journey, you’ll perceive how these generative fashions can profoundly improve the efficiency of semi-supervised studying algorithms, like a masterful twist in a gripping narrative.

Studying Goals

- We’ll begin by diving into semi-supervised studying, understanding why it issues, and seeing the way it’s utilized in real-life machine-learning situations.

- Subsequent, we’ll introduce you to the fascinating world of generative fashions, specializing in VAEs and GANs. We’ll learn the way they supercharge semi-supervised studying.

- Get able to roll up your sleeves as we information you thru the sensible facet. You’ll learn to combine these generative fashions into real-world machine-learning initiatives, from information prep to mannequin coaching.

- We’ll spotlight the perks, like improved mannequin generalization and price financial savings. Plus, we’ll showcase how this strategy applies throughout completely different fields.

- Each journey has its challenges, and we’ll navigate these. We may even see the vital moral issues, making certain you’re well-equipped to responsibly use generative fashions in semi-supervised studying.

This text was printed as part of the Knowledge Science Blogathon.

Introduction to Semi-Supervised Studying

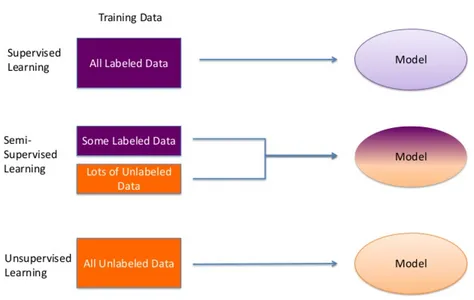

Within the giant panorama of machine studying, buying labeled information might be daunting. It typically entails time-consuming and expensive efforts to annotate information, which might restrict the scalability of supervised studying. Enter semi-supervised studying, a intelligent strategy that bridges the hole between the labeled and unlabeled information realms. It acknowledges that whereas labeled information is essential, huge swimming pools of unlabeled information typically lie dormant, able to be harnessed.

Think about you’re tasked with educating a pc to acknowledge varied animals in photos however labeling every one is a Herculean effort. That’s the place semi-supervised studying is available in. It suggests mixing a small batch of labeled photos with a big pool of unlabeled ones for coaching machine studying fashions.This strategy lets the mannequin faucet into the untapped potential of unlabeled information, bettering its efficiency and adaptableness. It’s like having a handful of guiding stars to navigate by a galaxy of data.

In our journey by semi-supervised studying, we’ll discover its significance, basic rules, and progressive methods, with a selected deal with how generative fashions like VAEs and GANs can amplify its capabilities. Let’s unlock the ability of semi-supervised studying, hand in hand with generative fashions.

Generative Fashions: Enhancing Semi-Supervised Studying

Within the charming world of machine studying, generative fashions emerge as actual game-changers, respiratory new life into semi-supervised studying. These fashions possess a novel expertise—they cannot solely take the intricacies of knowledge but in addition conjure new information that mirrors what they’ve discovered. Among the many greatest performers on this area are Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs). Let’s embark on a journey to learn the way these generative fashions develop into catalysts, pushing the boundaries of semi-supervised studying.

VAEs excel at capturing the essence of knowledge distributions. They achieve this by mapping enter information right into a hidden area after which meticulously reconstructing it. This potential finds a profound function in semi-supervised studying, the place VAEs encourage fashions to distill significant and concise information representations. These representations, cultivated with out the necessity for an abundance of labeled information, maintain the important thing to improved generalization even when confronted with restricted labeled examples. On the opposite stage, GANs have interaction in an intriguing adversarial dance. Right here, a generator strives to craft information just about indistinguishable from actual information, whereas a discriminator thinks the function of a vigilant critic. This dynamic duet ends in information augmentation and paves the way in which for producing fully new information values. It’s by these charming performances that VAEs and GANs take the highlight, ushering in a brand new period of semi-supervised studying.

Sensible Implementation Steps

Now that we’ve explored the theoretical elements, it’s time to roll up our sleeves and delve into the sensible implementation of semi-supervised studying with generative fashions. That is the place the magic occurs, the place we convert concepts into real-world options. Listed below are the wanted steps to deliver this synergy to life:

Step 1: Knowledge Preparation – Setting the Stage

Like every well-executed manufacturing, we want a very good and greatest basis. Begin by gathering your information. It’s best to have a small set of labeled information and a considerable reservoir of unlabeled information. Be sure that your information is clear, well-organized, and prepared for the limelight.

# Instance code for information loading and preprocessing

import pandas as pd

from sklearn.model_selection import train_test_split

# Load labeled information

labeled_data = pd.read_csv('labeled_data.csv')

# Load unlabeled information

unlabeled_data = pd.read_csv('unlabeled_data.csv')

# Preprocess information (e.g., normalize, deal with lacking values)

labeled_data = preprocess_data(labeled_data)

unlabeled_data = preprocess_data(unlabeled_data)

# Break up labeled information into prepare and validation units

train_data, validation_data = train_test_split(labeled_data, test_size=0.2, random_state=42)

#import csvStep 2: Incorporating Generative Fashions – The Particular Results

Generative fashions, our stars of the present, take middle stage. Combine Variational Autoencoders (VAEs) or Generative Adversarial Networks (GANs) into your semi-supervised studying pipeline. You may select to coach a generative mannequin in your unlabeled information or use it for information augmentation. These fashions add the particular results that make your semi-supervised studying shine.

# Instance code for integrating VAE for information augmentation

from tensorflow.keras.fashions import Sequential

from tensorflow.keras.layers import Dense, Enter, Lambda

from tensorflow.keras import Mannequin

# Outline VAE structure (encoder and decoder)

# ... (Outline encoder layers)

# ... (Outline decoder layers)

# Create VAE mannequin

vae = Mannequin(inputs=input_layer, outputs=decoded)

# Compile VAE mannequin

vae.compile(optimizer="adam", loss="mse")

# Pretrain VAE on unlabeled information

vae.match(unlabeled_data, unlabeled_data, epochs=10, batch_size=64)

#import csvStep 3: Semi-Supervised Coaching – Rehearsing the Ensemble

Now, it’s time to coach your semi-supervised studying mannequin. Mix the labeled information with the augmented information generated by the generative fashions. This ensemble solid of knowledge will empower your mannequin to extract vital options and generalize successfully, identical to a seasoned actor nailing their function.

# Instance code for semi-supervised studying utilizing TensorFlow/Keras

from tensorflow.keras.fashions import Sequential

from tensorflow.keras.layers import Dense

# Create a semi-supervised mannequin (e.g., neural community)

mannequin = Sequential()

# Add layers (e.g., enter layer, hidden layers, output layer)

mannequin.add(Dense(128, activation='relu', input_dim=input_dim))

mannequin.add(Dense(64, activation='relu'))

mannequin.add(Dense(num_classes, activation='softmax'))

# Compile the mannequin

mannequin.compile(optimizer="adam", loss="categorical_crossentropy", metrics=['accuracy'])

# Practice the mannequin with each labeled and augmented information

mannequin.match(

x=train_data[['feature1', 'feature2']], # Use related options

y=train_data['label'], # Labeled information labels

epochs=50, # Alter as wanted

batch_size=32,

validation_data=(validation_data[['feature1', 'feature2']], validation_data['label'])

)Step 4: Analysis and Positive-Tuning – The Gown Rehearsal

As soon as the mannequin is educated, it’s time for the costume rehearsal. Consider its efficiency utilizing a separate validation dataset. Positive-tune your mannequin based mostly on the outcomes. Iterate and refine till you obtain optimum outcomes, simply as a director fine-tunes a efficiency till it’s flawless.

# Instance code for mannequin analysis and fine-tuning

from sklearn.metrics import accuracy_score

# Predict on the validation set

y_pred = mannequin.predict(validation_data[['feature1', 'feature2']])

# Calculate accuracy

accuracy = accuracy_score(validation_data['label'], y_pred.argmax(axis=1))

# Positive-tune hyperparameters or mannequin structure based mostly on validation outcomes

# Iterate till optimum efficiency is achievedIn these sensible steps, we convert ideas into motion, full with code snippets to information you. It’s the place the script involves life, and your semi-supervised studying mannequin, powered by generative fashions, takes its place within the highlight. So, let’s transfer ahead and see this implementation in motion.

Advantages and Actual-world Purposes

Once we mix generative fashions with semi-supervised studying, the outcomes are game-changing. Right here’s why it issues:

1. Enhanced Generalization: By harnessing unlabeled information, fashions educated on this means carry out exceptionally effectively on restricted labeled examples, very like a gifted actor who shines on stage even with minimal rehearsal.

2. Knowledge Augmentation: Generative fashions,like VAEs and GANs, present a wealthy supply of augmented information. This boosts mannequin robustness and prevents overfitting, like a novel prop division creating countless scene variations.

3. Decreased Annotation Prices: Labeling information might be costly. Integrating generative fashions reduces the necessity for in depth information annotation, optimizing your manufacturing finances.

4. Area Adaptation: This strategy excels in adapting to new, unseen domains with minimal labeled information, just like an actor seamlessly transitioning between completely different roles.

5. Actual-World Purposes: The probabilities are giant. In pure language processing, it improve sentiment evaluation, language translation, and textual content era. In laptop imaginative and prescient, it elevates picture classification, object detection, and facial recognition. It’s a beneficial asset in healthcare for illness prognosis, in finance for fraud detection, and in autonomous driving for improved notion.

This isn’t simply principle—it’s a sensible game-changer throughout various industries, promising charming outcomes and efficiency, very like a well-executed movie that leaves a long-lasting influence.

Challenges and Moral Concerns

In our journey by the thrilling terrain of semi-supervised studying with generative fashions, it’s wanted to make clear the challenges and moral issues that accompany this progressive strategy.

- Knowledge High quality and Distribution: One of many foremost challenges lies in making certain the standard and representativeness of the information used for coaching generative fashions and subsequent semi-supervised studying. Biased or noisy information can result in skewed outcomes, very like a flawed script affecting the complete manufacturing.

- Complicated Mannequin Coaching: Integrating generative fashions can introduce complexity into the coaching course of. It wants experience in not solely conventional machine studying however within the nuances of generative modeling.

- Knowledge Privateness and Safety: As we work with giant quantities of knowledge, making certain information privateness and safety turns into paramount. Dealing with delicate or private info requires strict protocols, just like safeguarding confidential scripts within the leisure business.

- Bias and Equity: The usage of generative fashions should be compiled with vigilance to forestall biases from being perpetuated within the generated information or influencing the mannequin’s choices.

- Regulatory Compliance: A number of industries, reminiscent of healthcare and finance, have stringent laws governing information utilization. Adhering to those laws is obligatory, very like making certain a manufacturing complies with business requirements.

- Moral AI: There’s the overarching moral consideration of the influence of AI and machine studying on society. Making certain that the advantages of those applied sciences are accessible and equitable to all is akin to selling variety and inclusion within the leisure world.

As we navigate these challenges and moral issues, it’s essential to strategy the combination of generative fashions into semi-supervised studying with diligence and duty. Very similar to crafting a thought-provoking and socially aware piece of artwork, this strategy ought to purpose to counterpoint society whereas minimizing hurt.

Experimental Outcomes and Case Research

Now, let’s delve into the center of the matter: experimental outcomes that showcase the tangible influence of mixing generative fashions with semi-supervised studying

- Improved Picture Classification: Within the realm of laptop imaginative and prescient, researchers carried out experiments utilizing generative fashions to reinforce restricted labeled datasets for picture classification. The outcomes have been outstanding; fashions educated with this strategy demonstrated considerably larger accuracy in comparison with conventional supervised studying strategies.

- Language Translation with Restricted Knowledge: Within the subject of pure language processing, case research proved the effectiveness of semi-supervised studying with generative fashions for language translation. With solely a minimal quantity of labeled translation information and a considerable amount of monolingual information, the fashions have been in a position to obtain spectacular translation accuracy.

- Healthcare Diagnostics: Turning our consideration to healthcare, experiments showcased the potential of this strategy in medical diagnostics. With a scarcity of labeled medical photos, semi-supervised studying, boosted by generative fashions, allowed for correct illness detection.

- Fraud Detection in Finance: Within the finance business, case research showcased the prowess of generative fashions in semi-supervised studying for fraud detection. By augmenting labeled information with examples, fashions achieved excessive precision in figuring out fraudulent transactions.

Semi-supervised studying illustrate how this synergy can result in outstanding outcomes throughout various domains, very like the collaborative efforts of execs in numerous fields coming collectively to create one thing nice.

Conclusion

On this exploration between generative fashions and semi-supervised studying, we have now uncovered a groundbreaking strategy that holds the promise of revolutionizing ML. This highly effective synergy addresses the perennial problem of knowledge shortage, enabling fashions to thrive in domains the place labeled information is scarce. As we conclude, it’s evident that this integration represents a paradigm shift, unlocking new prospects and redefining the panorama of synthetic intelligence.

Key Takeaways

1. Effectivity By means of Fusion: Semi-supervised studying with generative fashions bridges the hole between labeled and unlabeled information, giving a extra environment friendly and cost-effective path to machine studying.

2. Generative Mannequin Stars: Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) play pivotal roles in augmenting the training course of, akin to gifted co-stars elevating a efficiency.

3. Sensible Implementation Blueprint: Implementation entails cautious information preparation, seamless integration of generative fashions, rigorous coaching, iterative refinement, and vigilant moral issues, mirroring the meticulous planning of a serious manufacturing.

4. Versatile Actual-World Affect: The advantages prolong throughout various domains, from healthcare to finance. Exhibiting the adaptability and real-world applicability of this strategy, very like a special and distinctive script that resonates with completely different audiences.

5. Moral Duty: As with all instrument, moral issues are on the forefront. Making certain equity, privateness, and accountable AI utilization is paramount, just like sustaining moral requirements within the arts and leisure business.

Ceaselessly Requested Questions

A. It’s a machine-learning strategy that makes use of a restricted set of labeled information along with a bigger pool of unlabeled information. Its significance lies in its potential to enhance studying in situations the place there may be restricted labeled information obtainable.

A. VAEs and GANs enhance semi-supervised studying by producing significant information representations and augmenting labeled datasets, boosting mannequin efficiency.

A. Certain! Implementation entails information preparation, integrating generative fashions, semi-supervised coaching, and iterative mannequin refinement, resembling a manufacturing course of.

A. A number of domains, reminiscent of healthcare, finance, and pure language processing, profit from improved mannequin generalization, decreased annotation prices, and improved efficiency, just like various fields benefiting from completely different and distinctive scripts.

The media proven on this article just isn’t owned by Analytics Vidhya and is used on the Writer’s discretion.