(jittawit21/Shutterstock)

Giant language fashions (LLMs) have dominated the info and AI dialog by the primary eight months of 2023, courtesy of the whirlwind that’s ChatGPT. Regardless of the buyer success, few corporations have concrete plans to place business LLMs into manufacturing, with issues about privateness and ethics main the best way.

A brand new report launched by Predibase this week highlights the surprisingly low adoption fee of economic LLMs amongst companies. For the report, titled “Past the Buzz: A Take a look at Giant Language,” Predibase commissioned a survey of 150 executives, information scientists, machine studying engineers, builders, and product managers at giant and small corporations world wide.

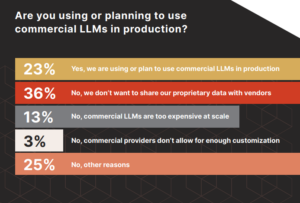

The survey, which you’ll be able to examine right here, discovered that, whereas 58% of companies have began to work with LLMs, many stay within the experimentation section, and solely 23% of respondents had already deployed business LLMs or deliberate to.

Privateness and issues about sharing information with distributors was cited as the highest cause why companies weren’t deploying business LLMs, adopted by expense and an absence of customization.

“This report highlights the necessity for the business to give attention to the true alternatives and challenges versus blindly following the hype,” says Piero Molino, co-founder and CEO of Predibase, in a press launch.

Whereas few corporations have plans to make use of business LLMs like Google’s Bard and OpenAI’s GPT-4, extra corporations are open to utilizing open supply LLMs, similar to Llama, Alpaca, and Vicuna, the survey discovered.

Privateness, or the dearth thereof, is a rising concern, notably with regards to LLMs, that are educated predominantly on human phrases. Giant tech companies, similar to Zoom, have come below hearth for his or her coaching practices.

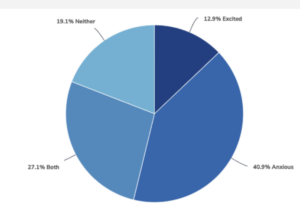

Now a brand new survey by PrivacyHawk has discovered that belief in massive tech companies is at a nadir. The survey of 1,000 People, carried out by Propeller Analysis, discovered that almost half of the U.S. inhabitants (45%) are “very or extraordinarily involved about their private information being exploited, breached, or uncovered,” whereas about 94% are “usually involved.”

Solely about 6% of the survey respondents are usually not involved in any respect about their private information danger, the corporate says in its report. Nevertheless, almost 90% mentioned they want to get a “privateness rating,” much like a credit score rating, that exhibits how expose their information is.

Greater than 3 instances as many individuals are anxious about AI than excited, based on PrivacyHawk’s “Shopper Privateness, Private Knowledge, & AI Sentiment 2023” report

“The individuals have spoken: They need privateness; they demand trusted establishments like banks shield their information; they universally need congress to go a nationwide privateness regulation; and they’re involved about how their private information could possibly be misused by synthetic intelligence,” mentioned Aaron Mendes, CEO and co-founder of PrivacyHawk, in a press launch. “Our private information is core to who we’re, and it must be protected…”

Along with privateness, moral issues are additionally offering headwinds to LLM adoption, based on a separate survey launched this week by Conversica.

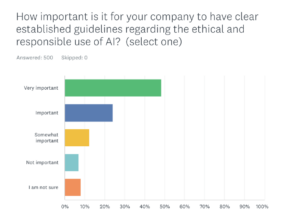

The chatbot developer surveyed 500 enterprise homeowners, C-suite executives, and senior management personnel for its 2023 AI Ethics & Company Duty Survey. The survey discovered that whereas greater than 40% of corporations had adopted AI-powered providers, solely 6% have “established clear pointers for the moral and accountable use of AI.”

Clearly, there’s a spot between the AI aspirations that corporations have versus the steps they’ve taken to attain these aspirations. The excellent news is that, amongst corporations which can be additional alongside of their AI implementations, 13% extra state they acknowledge the necessity for clear pointers for moral and accountable AI in comparison with the survey inhabitants as an entire.

“These already using AI have seen firsthand the challenges arising from implementation, rising their recognition of the urgency of coverage creation,” the corporate says in its report. “Nevertheless, this alignment with the precept doesn’t essentially equate to implementation, as many corporations [have] but to formalize their insurance policies.”

One in 5 respondents which have already deployed AI “admitted to restricted or no information about their group’s AI-related insurance policies,” the corporate says in its report. “Much more disconcerting, 36% of respondents claimed to be solely ‘considerably acquainted’ with these issues. This information hole might hinder knowledgeable decision-making and doubtlessly expose companies to unexpected dangers.”

Associated Objects:

Zoom Knowledge Debacle Shines Mild on SaaS Knowledge Snooping

Digital Assistants Mixing Proper Into Actual World

Anger Builds Over Huge Tech’s Huge Knowledge Abuses