(Lightspring/Shutterstock)

The likelihood of AI going off the rails and hurting folks has elevated significantly because of the explosion in use of generative AI applied sciences, corresponding to ChatGPT. That, in flip, is making it obligatory to control sure high-risk AI use circumstances in america, the authors of a brand new Affiliation of Computing Equipment paper stated final week.

Utilizing a know-how like ChatGPT to put in writing a poem or to put in writing smooth-sounding language when you understand the content material properly and may error-check the outcomes your self is one factor, stated Jeanna Matthews, one of many authors of the ACM Expertise Coverage Council’s new paper, titled “Ideas for the Growth, Deployment, and Use of Generative AI Applied sciences.”

“It’s a totally totally different factor to count on the data you discover there to be correct in a state of affairs the place you aren’t able to error-checking it for your self,” Matthews stated. “These are two very totally different use circumstances. And what we’re saying is there needs to be limits and steerage on deployments and use. ‘It’s secure to make use of this for this goal. It’s not secure to make use of it for this goal.’”

The brand new ACM paper, which you may entry right here, lays out eight rules that it recommends customers observe when creating AI techniques. The primary 4 rules–concerning transparency; auditability and contestability; limiting environmental influence; and heightened safety and coverage–had been borrowed from a earlier paper revealed in October 2022.

Generative AI adoption is accelerating the dialogue over AI regulation (khunkornStudio/Shutterstock)

OpenAI launched ChatGPT the next month, which set off one of the fascinating, and tumultuous, durations in AI’s historical past. The next surge in generative AI utilization and recognition necessitated the addition of 4 extra rules within the just-released paper, together with limits and steerage on deployment and use; possession; private knowledge management; and correctability.

The ACM paper recommends new legal guidelines needs to be put in place to restrict using generative AI in sure conditions. “No high-risk AI system needs to be allowed to function with out clear and sufficient safeguards, together with a ‘human within the loop’ and clear consensus amongst related stakeholders that the system’s advantages will considerably outweigh its potential adverse impacts,” the ACM paper says.

As using generative AI has widened over the previous eight months, the necessity to restrict probably the most hurtful and high-risk makes use of has grow to be extra clear, says Matthews, who co-write the paper with Ravi Jain and Alejandro Saucedo. The aim of the paper was to tell not solely the engineers and enterprise leaders in regards to the doable ramifications of use of high-risk generative AI, but additionally to offer policymakers in Washington, D.C. a framework to observe as they craft new regulation.

After incubating for a couple of years, generative AI jumped into the scene in a giant means, says Jain. “It’s been within the works for years, but it surely grew to become actually seen and tangible with issues like Midjourney and ChatGPT and people sorts of issues,” Jain says. “Typically you want issues to grow to be… actually instantiated for folks to actually recognize the hazards and alternatives as properly.”

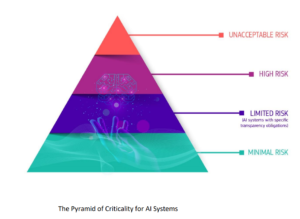

There are some parallels between the brand new ACM rules and the EU AI Act (on observe to probably grow to be regulation in 2024), particularly across the idea of a hierarchy of threat ranges, Matthews says. Some AI use circumstances are simply positive, whereas others can have detrimental penalties on folks’s lives if dangerous selections are made.

The US ought to undertake a risk-based method just like the Europeans have proposed within the EU AI Act, which regulates probably the most high-risk AI use circumstances, authors of a brand new ACM report say

“We see an actual hole there,” Matthews tells Datanami. “There’s been an implication that you should utilize this in eventualities that we expect are simply not accountable. However there are different eventualities by which it may be nice. So retaining these straight is vital and people people who find themselves releasing applied sciences like this, we expect could be way more concerned in establishing these boundaries.”

The tendency for giant language fashions (LLMs) to hallucinate issues that aren’t there’s a actual downside in sure conditions, Matthews says.

“It’s really not a software that needs to be used primarily for info discovery as a result of it produces inaccurate solutions. It makes up stuff,” she says. “Loads of it’s the means it’s introduced or marketed to folks. If it was marketed to folks as this creates believable language, however fairly often inaccurate language, that will be a totally totally different phrasing than ‘Oh my gosh, it is a genius. Go ask your whole questions.’”

The ACM’s new rules concerning possession of AI and management over private knowledge are additionally byproducts of the LLM and Gen AI explosion. Consideration needs to be paid to understanding looming problems with copyright and mental property points, Jain says.

“Who owns an artifact that’s generated by AI?” says Jain, who’s the Chair of the ACM Expertise Coverage Council’s Working Group on Generative AI. “However on the flip facet, who has the legal responsibility or the accountability for it? Is it the supplier of the bottom mannequin? Is the supplier of the service? Is it the unique creator of the content material from which the AI-based content material was derived? That’s not clear. That’s an space of IP, regulation and coverage that must be discovered–and fairly shortly, for my part.”

Points over private knowledge are additionally coming to the fore, pitting a conflict between the Web’s traditionally open underpinnings and the aptitude for folks to revenue off knowledge shared overtly by way of Gen AI merchandise. Matthews makes use of an analogy in regards to the expectations one has when inviting a housecleaner into one’s residence.

“Sure, they’re going to wish to have entry to your private areas to scrub your own home,” she says. “And in the event you discovered that they had been taking footage of all the things in your closet, all the things in your lavatory, all the things in your bed room, every bit of paper they encountered, after which doing stuff with it that you simply had no [idea] that they had been going to do, you wouldn’t say, oh, that’s an amazing, modern enterprise mannequin. You’d say, that’s horrible, get out of my home.”

Whereas using AI just isn’t broadly regulated outdoors of industries which can be already closely regulated, corresponding to healthcare and monetary companies, there’s extensive, bi-partisan assist constructing for doable regulation, Matthews says. The ACM has been working with the workplace of Senator Chuck Schumer (D-NY) on doable regulation. Regulation might come before anticipated, as Sen. Schumer has stated that U.S. Federal regulation is probably months away, not years, Matthews says.

There are a number of points round generative AI, however the necessity to rein in probably the most harmful, high-risk use circumstances seems to be high of thoughts. The ACM recommends following the European mannequin right here by making a hierarchy of dangers and specializing in probably the most high-risk use circumstances. That may enable AI innovation to proceed whereas stamping out probably the most harmful elements, Matthews says.

“The best way to have some regulation with out harming innovation inappropriately is to laser-focus regulation on the very best severity, highest likelihood of consequence techniques,” Matthews says. “And people techniques, I wager you most individuals would agree, deserve some necessary threat administration. And the truth that we don’t have something presently requiring that looks as if an issue, I believe to most individuals.”

Whereas the ACM has not known as for a pause in AI analysis, as different teams have performed, it’s clear that the no-holds-barred method to American AI innovation can’t proceed as is, Matthews says. “I believe it’s a case of the Wild West,” she provides.

Associated Gadgets:

Europe Strikes Ahead with AI Regulation

AI Researchers Subject Warning: Deal with AI Dangers as World Precedence

Self-Regulation Is the Normal in AI, for Now

ACM, AI risks, AI regulation, AI threat, ChatGPT, EU AI Act, Gen AI, generative AI, high-risk AI, human within the loop, LLM, threat