We’re thrilled to announce which you could run much more workloads on Databricks’ extremely environment friendly multi-user clusters because of new safety and governance options in Unity Catalog Knowledge groups can now develop and run SQL, Python and Scala workloads securely on shared compute assets. With that, Databricks is the one platform within the trade providing fine-grained entry management on shared compute for Scala, Python and SQL Spark workloads.

Beginning with Databricks Runtime 13.3 LTS, you’ll be able to seamlessly transfer your workloads to shared clusters, because of the next options which are obtainable on shared clusters:

- Cluster libraries and Init scripts: Streamline cluster setup by putting in cluster libraries and executing init scripts on startup, with enhanced safety and governance to outline who can set up what.

- Scala: Securely run multi-user Scala workloads alongside Python and SQL, with full consumer code isolation amongst concurrent customers and implementing Unity Catalog permissions.

- Python and Pandas UDFs. Execute Python and (scalar) Pandas UDFs securely, with full consumer code isolation amongst concurrent customers.

- Single-node Machine Studying: Run scikit-learn, XGBoost, prophet and different standard ML libraries utilizing the Spark driver node,, and use MLflow for managing the end-to-end machine studying lifecycle.

- Structured Streaming: Develop real-time knowledge processing and evaluation options utilizing structured streaming.

Simpler knowledge entry in Unity Catalog

When making a cluster to work with knowledge ruled by Unity Catalog, you’ll be able to select between two entry modes:

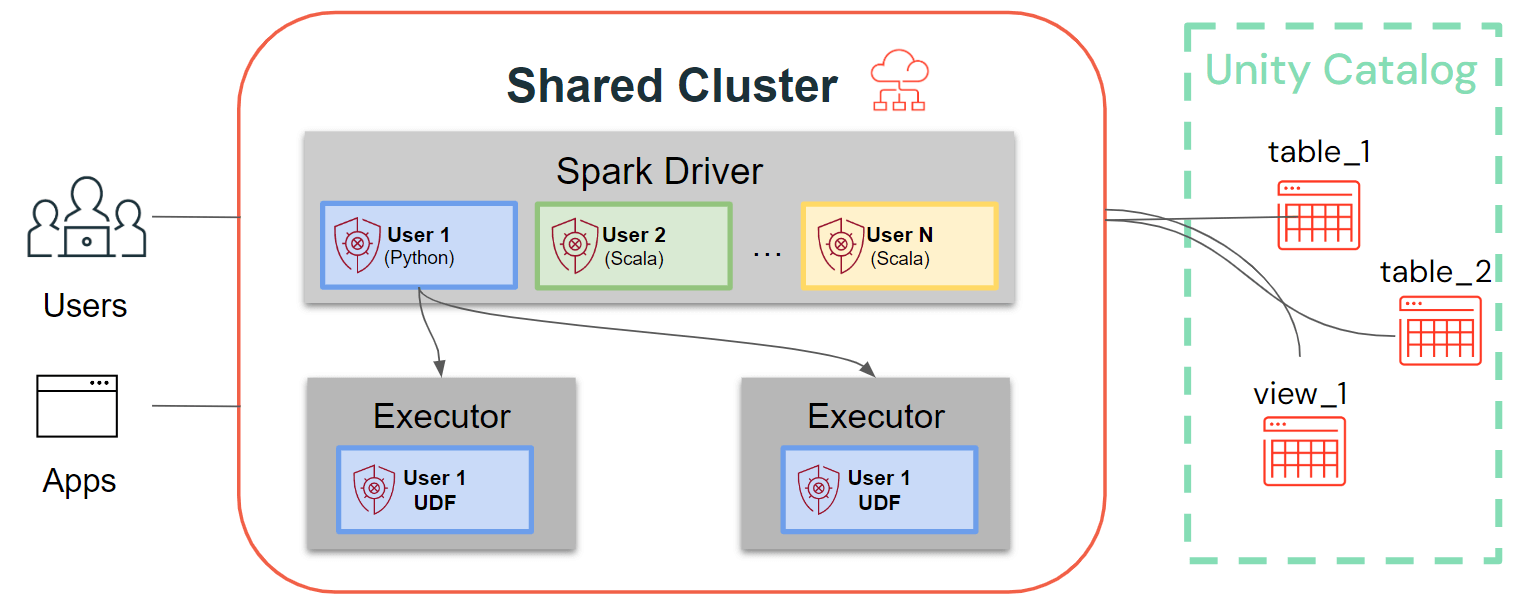

- Clusters in shared entry mode – or simply shared clusters – are the advisable compute choices for many workloads. Shared clusters enable any variety of customers to connect and concurrently execute workloads on the identical compute useful resource, permitting for important price financial savings, simplified cluster administration, and holistic knowledge governance together with fine-grained entry management. That is achieved by Unity Catalog’s consumer workload isolation which runs any SQL, Python and Scala consumer code in full isolation with no entry to lower-level assets.

- Clusters in single-user entry mode are advisable for workloads requiring privileged machine entry or utilizing RDD APIs, distributed ML, GPUs, Databricks Container Service or R.

Whereas single-user clusters comply with the standard Spark structure, the place consumer code runs on Spark with privileged entry to the underlying machine, shared clusters guarantee consumer isolation of that code. The determine under illustrates the structure and isolation primitives distinctive to shared clusters: Any client-side consumer code (Python, Scala) runs absolutely remoted and UDFs working on Spark executors execute in remoted environments. With this structure, we are able to securely multiplex workloads on the identical compute assets and provide a collaborative, cost-efficient and safe resolution on the similar time.

Newest enhancements for Shared Clusters: Cluster Libraries, Init Scripts, Python UDFs, Scala, ML, and Streaming Help

Configure your shared cluster utilizing cluster libraries & init scripts

Cluster libraries assist you to seamlessly share and handle libraries for a cluster and even throughout a number of clusters, guaranteeing constant variations and decreasing the necessity for repetitive installations. Whether or not you should incorporate machine studying frameworks, database connectors, or different important parts into your clusters, cluster libraries present a centralized and easy resolution now obtainable on shared clusters.

Libraries may be put in from Unity Catalog volumes (AWS, Azure, GCP) , Workspace recordsdata (AWS, Azure, GCP), PyPI/Maven and cloud storage places, utilizing the present Cluster UI or API.

Utilizing init scripts, as a cluster administrator you’ll be able to execute customized scripts in the course of the cluster creation course of to automate duties comparable to establishing authentication mechanisms, configuring community settings, or initializing knowledge sources.

Init scripts may be put in on shared clusters, both straight throughout cluster creation or for a fleet of clusters utilizing cluster insurance policies (AWS, Azure, GCP). For max flexibility, you’ll be able to select whether or not to make use of an init script from Unity Catalog volumes (AWS, Azure, GCP) or cloud storage.

As an extra layer of safety, we introduce an allowlist (AWS, Azure, GCP) that governs the set up of cluster libraries (jars) and init scripts. This places directors accountable for managing them on shared clusters. For every metastore, the metastore admin can configure the volumes and cloud storage places from which libraries (jars) and init scripts may be put in, thereby offering a centralized repository of trusted assets and stopping unauthorized installations. This enables for extra granular management over the cluster configurations and helps keep consistency throughout your group’s knowledge workflows.

Carry your Scala workloads

Scala is now supported on shared clusters ruled by Unity Catalog. Knowledge engineers can leverage Scala’s flexibility and efficiency to deal with all kinds of huge knowledge challenges, collaboratively on the identical cluster and profiting from the Unity Catalog governance mannequin.

Integrating Scala into your present Databricks workflow is a breeze. Merely choose Databricks runtime 13.3 LTS or later when making a shared cluster, and you can be prepared to jot down and execute Scala code alongside different supported languages.

Leverage Consumer-Outlined Capabilities (UDFs), Machine Studying & Structured Streaming

That is not all! We’re delighted to unveil extra game-changing developments for shared clusters.

Help for Python and Pandas Consumer Outlined Capabilities (UDFs): Now you can harness the ability of each Python and (scalar) Pandas UDFs additionally on shared clusters. Simply deliver your workloads to shared clusters seamlessly – no code diversifications are wanted. By isolating the execution of UDF consumer code on Spark executors in a sandboxed setting, shared clusters present an extra layer of safety in your knowledge, stopping unauthorized entry and potential breaches.

Help for all standard ML libraries utilizing Spark driver node and MLflow: Whether or not you are working with Scikit-learn, XGBoost, prophet, and different standard ML libraries, now you can seamlessly construct, prepare, and deploy machine studying fashions straight on shared clusters. To put in ML libraries for all customers, you need to use the brand new cluster libraries. With built-in help for MLflow (2.2.0 or later), managing the end-to-end machine studying lifecycle has by no means been simpler.

Structured Streaming is now additionally obtainable on Shared Clusters ruled by Unity Catalog. This transformative addition permits real-time knowledge processing and evaluation, revolutionizing how your knowledge groups deal with streaming workloads collaboratively.

Begin at the moment, extra good issues to come back

Uncover the ability of Scala, Cluster libraries, Python UDFs, single-node ML, and streaming on shared clusters at the moment just by utilizing Databricks Runtime 13.3 LTS or above. Please discuss with the fast begin guides (AWS, Azure, GCP) to be taught extra and begin your journey towards knowledge excellence.

Within the coming weeks and months, we’ll proceed to unify the Unity Catalog’s compute structure and make it even easier to work with Unity Catalog!