For over a 12 months now, Databricks and dbt Labs have been working collectively to comprehend the imaginative and prescient of simplified real-time analytics engineering, combining dbt’s extremely in style analytics engineering framework with the Databricks Lakehouse Platform, one of the best place to construct and run knowledge pipelines. Collectively, Databricks and dbt Labs allow knowledge groups to collaborate on the lakehouse, simplifying analytics and turning uncooked knowledge into insights effectively and cost-effectively. Lots of our prospects resembling Conde Nast, Chick-fil-A, and Zurich Insurance coverage are constructing options with Databricks and dbt Cloud.

The collaboration between Databricks and dbt Labs brings collectively two business leaders with complementary strengths. Databricks, the info and AI firm, supplies a unified setting that seamlessly integrates knowledge engineering, knowledge science, and analytics. dbt Labs helps knowledge practitioners work extra like software program engineers to provide trusted datasets for reporting, ML modeling, and operational workflows, utilizing SQL and python. dbt Labs calls this observe analytics engineering.

What’s troublesome about Analytics right now?

Organizations searching for streamlined analytics ceaselessly encounter three important obstacles:

- Knowledge Silos Hinder Collaboration: Inside organizations, a number of groups function with totally different approaches to working with knowledge, leading to fragmented processes and purposeful silos. This lack of cohesion results in inefficiencies, making it troublesome for knowledge engineers, analysts, and scientists to collaborate successfully and ship end-to-end knowledge options.

- Excessive Complexity and Prices for Knowledge Transformations: To realize analytics excellence, organizations typically depend on separate ingestion pipelines or decoupled integration instruments. Sadly, this strategy introduces pointless prices and complexities. Manually refreshing pipelines when new knowledge turns into accessible or when modifications are made is a time-consuming and resource-intensive course of. Incremental modifications typically require full recompute, resulting in extreme cloud consumption and elevated bills.

- Lack of Finish-to-Finish Lineage and Entry Management: Complicated knowledge tasks deliver quite a few dependencies and challenges. With out correct governance, organizations face the chance of utilizing incorrect knowledge or inadvertently breaking crucial pipelines throughout modifications. The absence of full visibility into mannequin dependencies creates a barrier to understanding knowledge lineage, compromising knowledge integrity and reliability.

Collectively, Databricks and dbt Labs search to resolve these issues. Databricks’ easy, unified lakehouse platform supplies the optimum setting for operating dbt, a broadly used knowledge transformation framework. dbt Cloud is the quickest and best strategy to deploy dbt, empowering knowledge groups to construct scalable and maintainable knowledge transformation pipelines.

Databricks and dbt Cloud collectively are a sport changer

Databricks and dbt Cloud allow knowledge groups to collaborate on the lakehouse. By simplifying analytics on the lakehouse, knowledge practitioners can successfully flip uncooked knowledge into insights in essentially the most environment friendly, cost-effective manner. Collectively, Databricks and dbt Cloud assist customers break down knowledge silos to collaborate successfully, simplify ingestion and transformation to decrease TCO, and unify governance for all their real-time and historic knowledge.

Collaborate on knowledge successfully throughout your group

The Databricks Lakehouse Platform is a single, built-in platform for all knowledge, analytics and AI workloads. With help for a number of languages, CI/CD and testing, and unified orchestration throughout the lakehouse, dbt Cloud on Databricks is one of the best place for all knowledge practitioners – knowledge engineers, knowledge scientists, analysts, and analytics engineers – to simply work collectively to construct knowledge pipelines and ship options utilizing the languages, frameworks and instruments they already know.

Simplify ingestion and transformation to decrease TCO

Construct and run pipelines mechanically utilizing the best set of sources. Simplify ingestion and automate incrementalization inside dbt fashions to extend growth agility and eradicate waste so that you pay for less than what’s required, no more.

We additionally not too long ago introduced two new capabilities for analytics engineering on Databricks that simplify ingestion and transformation for dbt customers to decrease TCO: Streaming Tables and Materialized Views.

1. Streaming Tables

Knowledge ingest from cloud storage and queues in dbt tasks

Beforehand, to ingest knowledge from cloud storage (e.g. AWS S3) or message queues (e.g. Apache Kafka), dbt customers must first arrange a separate pipeline, or use a third-party knowledge integration device, earlier than gaining access to that knowledge in dbt.

Databricks Streaming Tables allow steady, scalable ingestion from any knowledge supply together with cloud storage, message buses and extra.

And now, with dbt Cloud + Streaming Tables on the Databricks Lakehouse Platform, ingesting from these sources comes built-in to dbt tasks.

2. Materialized Views

Computerized incrementalization for dbt fashions

Beforehand, to make a dbt pipeline refresh in an environment friendly, incremental method, analytics engineers must outline incremental fashions and manually craft particular incremental methods for varied workload sorts (e.g. coping with partitions, joins/aggs, and so forth.).

With dbt + Materialized Views on the Databricks Lakehouse Platform, it’s a lot simpler to construct environment friendly pipelines with out complicated person enter. Leveraging Databricks’ highly effective incremental refresh capabilities, dbt leverages Materialized Views inside its pipelines to considerably enhance runtime and ease, enabling knowledge groups to entry insights sooner and extra effectively. This empowers customers to construct and run pipelines backed by Materialized Views to scale back infrastructure prices with environment friendly, incremental computation.

Though the thought of materialized views is itself not a brand new idea, the dbt Cloud/Databricks integration is critical as a result of now each batch and streaming pipelines are accessible in a single place, to your complete knowledge group, combining the streaming capabilities of Delta Reside Tables (DLT) infrastructure with the accessibility of the dbt framework. Because of this, knowledge practitioners working in dbt Cloud on the Databricks Lakehouse Platform can merely use SQL to outline a dataset that’s mechanically, incrementally stored updated. Materialized Views are a sport changer for simplifying person expertise with computerized, incremental refreshing of dbt fashions, saving time and prices.

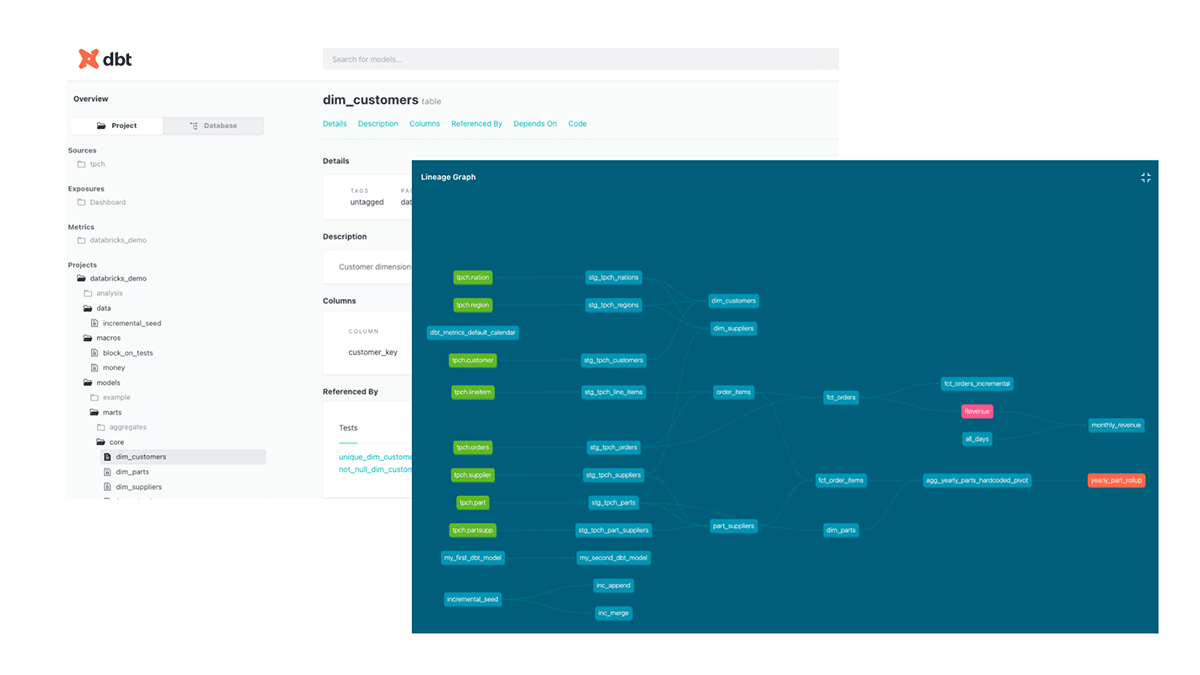

Unify governance for all of your real-time and historic knowledge with dbt and Unity Catalog

From knowledge ingestion to transformation, with full visibility into upstream and downstream object dependencies, dbt and Databricks Unity Catalog present the whole knowledge lineage and governance organizations must believe of their knowledge. Understanding dependencies turns into easy, mitigating dangers and forming a stable basis for efficient decision-making.

Reworking the Insurance coverage Trade to Create Enterprise Worth with Superior Analytics and AI

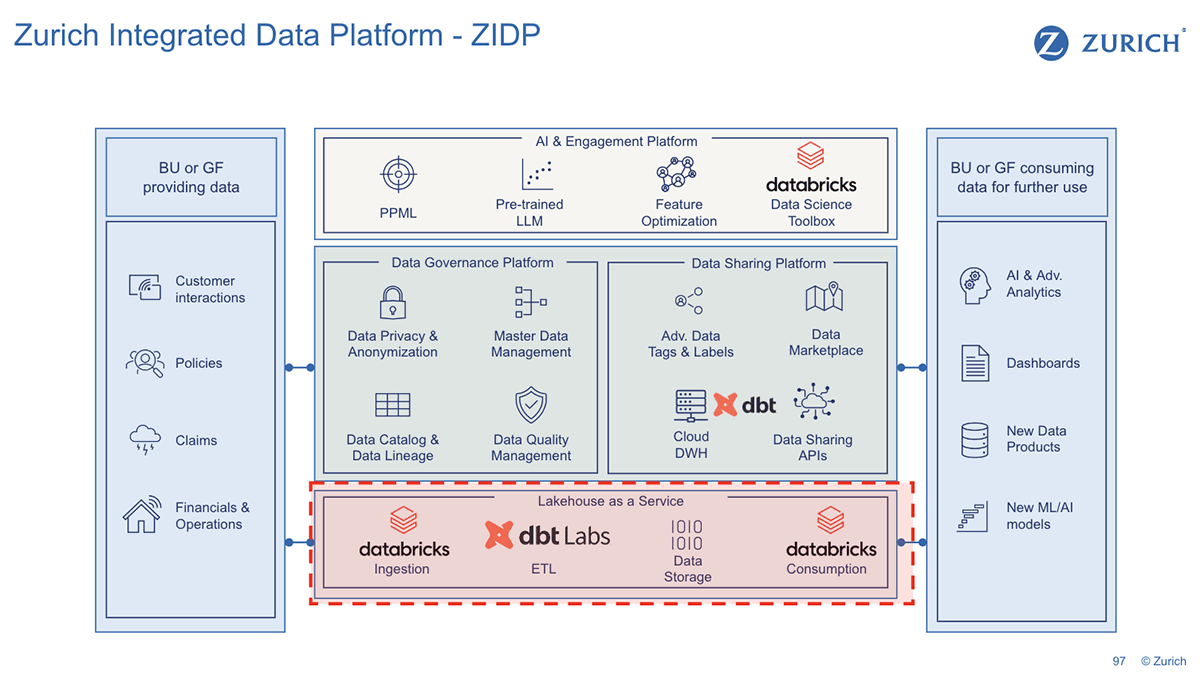

Zurich Insurance coverage is altering the way in which the insurance coverage business leverages knowledge and AI. Shifting focus from conventional inner use instances to the wants of its prospects and distribution companions, Zurich has constructed a business analytics platform that gives insights and proposals on underwriting, claims and threat engineering to strengthen prospects’ enterprise operations and enhance servicing for key stakeholder teams.

The Databricks Lakehouse Platform and dbt Cloud are the inspiration of Zurich’s Built-in Knowledge Platform for superior analytics and AI, knowledge governance and knowledge sharing. Databricks and dbt Labs type the ETL layer, Lakehouse as a Service, the place knowledge lands from totally different geographies, organizations, and departments right into a multi-cloud lakehouse implementation. The information is then remodeled from its uncooked format to Silver (analytics-ready) and Gold (business-ready) with dbt Cloud. “Zurich’s knowledge customers at the moment are capable of ship knowledge science and AI use instances together with pre-trained LLM fashions, scoring and proposals for its world groups”, stated Jose Luis Sanchez Ros, Head of Knowledge Resolution Structure, Zurich Insurance coverage Firm Ltd. “Unity Catalog simplifies entry administration and supplies collaborative knowledge exploration with a company-wide view of knowledge that’s shared throughout the group with none replication.”

Hear from Zurich Insurance coverage at Knowledge + AI Summit: Modernizing the Knowledge Stack: Classes Discovered From the Evolution at Zurich Insurance coverage

Get began with Databricks and dbt Labs

Regardless of the place your knowledge groups wish to work, dbt Cloud on the Databricks Lakehouse Platform is a foundationally great spot to start out. Collectively, dbt Labs and Databricks assist your knowledge groups collaborate successfully, run less complicated and cheaper knowledge pipelines, and unify knowledge governance.

Speak to your Databricks or dbt Labs rep on best-in-class analytics pipelines with Databricks and dbt, or get began right now with Databricks and dbt Cloud. Join The Case for Transferring to the Lakehouse digital occasion for a deep dive with Databricks and dbt Labs co-founders and see all of it in motion with a dbt Cloud on Databricks product demo.