Introduction

Consideration fashions, often known as consideration mechanisms, are enter processing strategies utilized in neural networks. They permit the community to concentrate on completely different elements of complicated enter individually till the whole knowledge set is categorized. The aim is to interrupt down complicated duties into smaller areas of consideration which can be processed sequentially. This method is much like how the human thoughts solves new issues by breaking them down into easier duties and fixing them step-by-step. Consideration fashions can higher adapt to particular duties, optimize their efficiency, and enhance their means to take care of related data.

The eye mechanism in NLP is likely one of the most useful developments in deep studying within the final decade. The Transformer structure and pure language processing (NLP) corresponding to Google’s BERT have led to a latest surge of progress.

Studying Goals

- Perceive the necessity for consideration mechanisms in deep studying, how they work, and the way they will enhance mannequin efficiency.

- Get to know the varieties of consideration mechanisms and examples of their use.

- Discover your software and the professionals and cons of utilizing the eye mechanism.

- Get hands-on expertise by following an instance of consideration implementation.

This text was printed as part of the Knowledge Science Blogathon.

When to Use the Consideration Framework?

The eye framework was initially utilized in encoder-decoder-based neural machine translation methods and pc imaginative and prescient to boost their efficiency. Conventional machine translation methods relied on giant datasets and complicated features to deal with translations, whereas consideration mechanisms simplified the method. As a substitute of translating phrase by phrase, consideration mechanisms assign fixed-length vectors to seize the general that means and sentiment of the enter, leading to extra correct translations. The eye framework is especially helpful when coping with the constraints of the encoder-decoder translation mannequin. It allows exact alignment and translation of enter phrases and sentences.

Not like encoding the whole enter sequence right into a single fixed-content vector, the eye mechanism generates a context vector for every output, which permits for extra environment friendly translations. It’s essential to notice that whereas consideration mechanisms enhance the accuracy of translations, they might not all the time obtain linguistic perfection. Nonetheless, they successfully seize the intention and common sentiment of the unique enter. In abstract, consideration frameworks are a useful software for overcoming the constraints of conventional machine translation fashions and reaching extra correct and context-aware translations.

How do Consideration Fashions Function?

In broad phrases, consideration fashions make use of a operate that maps a question and a set of key-value pairs to generate an output. These components, together with the question, keys, values, and last output, are all represented as vectors. The output is calculated by taking a weighted sum of the values, with the weights decided by a compatibility operate that evaluates the similarity between the question and the corresponding key.

In sensible phrases, consideration fashions allow neural networks to approximate the visible consideration mechanism employed by people. Just like how people course of a brand new scene, the mannequin focuses intensely on a selected level in a picture, offering a “high-resolution” understanding, whereas perceiving the encompassing areas with much less element, akin to “low-resolution.” Because the community beneficial properties a greater understanding of the scene, it adjusts the focus accordingly.

Implementing the Common Consideration Mechanism with NumPy and SciPy

On this part, we’ll study the implementation of the final consideration mechanism using the Python libraries NumPy and SciPy.

To start, we outline the phrase embeddings for a sequence of 4 phrases. For the sake of simplicity, we’ll manually outline the phrase embeddings, though in follow, they might be generated by an encoder.

import numpy as np

# encoder representations of 4 completely different phrases

word_1 = np.array([1, 0, 0])

word_2 = np.array([0, 1, 0])

word_3 = np.array([1, 1, 0])

word_4 = np.array([0, 0, 1])Subsequent, we generate the burden matrices that shall be multiplied with the phrase embeddings to acquire the queries, keys, and values. For this instance, we randomly generate these weight matrices, however in actual eventualities, they might be realized throughout coaching.

np.random.seed(42)

W_Q = np.random.randint(3, dimension=(3, 3))

W_K = np.random.randint(3, dimension=(3, 3))

W_V = np.random.randint(3, dimension=(3, 3))We then calculate the question, key, and worth vectors for every phrase by performing matrix multiplications between the phrase embeddings and the corresponding weight matrices.

query_1 = np.dot(word_1, W_Q)

key_1 = np.dot(word_1, W_K)

value_1 = np.dot(word_1, W_V)

query_2 = np.dot(word_2, W_Q)

key_2 = np.dot(word_2, W_K)

value_2 = np.dot(word_2, W_V)

query_3 = np.dot(word_3, W_Q)

key_3 = np.dot(word_3, W_K)

value_3 = np.dot(word_3, W_V)

query_4 = np.dot(word_4, W_Q)

key_4 = np.dot(word_4, W_K)

value_4 = np.dot(word_4, W_V)Transferring on, we rating the question vector of the primary phrase towards all the important thing vectors utilizing a dot product operation.

scores = np.array([np.dot(query_1,key_1),

np.dot(query_1,key_2),np.dot(query_1,key_3),np.dot(query_1,key_4)])To generate the weights, we apply the softmax operation to the scores.

weights = np.softmax(scores / np.sqrt(key_1.form[0]))Lastly, we compute the eye output by taking the weighted sum of all the worth vectors.

consideration=(weights[0]*value_1)+(weights[1]*value_2)+(weights[2]*value_3)+(weights[3]*value_4)

print(consideration)For a quicker computation, these calculations could be carried out in matrix type to acquire the eye output for all 4 phrases concurrently. Right here’s an instance:

import numpy as np

from scipy.particular import softmax

# Representing the encoder representations of 4 completely different phrases

word_1 = np.array([1, 0, 0])

word_2 = np.array([0, 1, 0])

word_3 = np.array([1, 1, 0])

word_4 = np.array([0, 0, 1])

# phrase embeddings.

phrases = np.array([word_1, word_2, word_3, word_4])

# Producing the burden matrices.

np. random.seed(42)

W_Q = np. random.randint(3, dimension=(3, 3))

W_K = np. random.randint(3, dimension=(3, 3))

W_V = np. random.randint(3, dimension=(3, 3))

# Producing the queries, keys, and values.

Q = np.dot(phrases, W_Q)

Okay = np.dot(phrases, W_K)

V = np.dot(phrases, W_V)

# Scoring vector question.

scores = np.dot(Q, Okay.T)

# Computing the weights by making use of a softmax operation.

weights = softmax(scores / np.sqrt(Okay.form[1]), axis=1)

# Computing the eye by calculating the weighted sum of the worth vectors.

consideration = np.dot(weights, V)

print(consideration)Kinds of Consideration Fashions

- World and Native Consideration (local-m, local-p)

- Arduous and Smooth Consideration

- Self-Consideration

World Consideration Mannequin

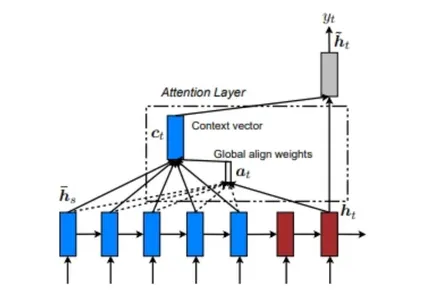

The worldwide consideration mannequin considers enter from each supply state (encoder) and decoder state previous to the present state to compute the output. It takes into consideration the connection between the supply and goal sequences. Under is a diagram illustrating the worldwide consideration mannequin.

Within the international consideration mannequin, the alignment weights or consideration weights (a<t>) are calculated utilizing every encoder step and the decoder’s earlier step (h<t>). The context vector (c<t>) is then calculated by taking the weighted sum of the encoder outputs utilizing the alignment weights. This reference vector is fed to the RNN cell to find out the decoder output.

Native Consideration Mannequin

The Native consideration mannequin differs from the World Consideration Mannequin in that it solely considers a subset of positions from the supply (encoder) when calculating the alignment weights (a<t>). Under is a diagram illustrating the Native consideration mannequin.

The Native consideration mannequin could be understood from the diagram offered. It entails discovering a single-aligned place (p<t>) after which utilizing a window of phrases from the supply (encoder) layer, together with (h<t>), to calculate alignment weights and the context vector.

There are two varieties of Native Consideration: Monotonic alignment and Predictive alignment. In monotonic alignment, the place (p<t>) is just set as “t”, whereas in predictive alignment, the place (p<t>) is predicted by a predictive mannequin as an alternative of assuming it as “t”.

Arduous and Smooth Consideration

Smooth consideration and the World consideration mannequin share similarities of their performance. Nonetheless, there are distinct variations between exhausting consideration and native consideration fashions. The first distinction lies within the differentiability property. The native consideration mannequin is differentiable at each level, whereas exhausting consideration lacks differentiability. This suggests that the native consideration mannequin allows gradient-based optimization all through the mannequin, whereas exhausting consideration poses challenges for optimization because of non-differentiable operations.

Self-Consideration Mannequin

The self-attention mannequin entails establishing relationships between completely different areas in the identical enter sequence. In precept, self-attention can use any of the beforehand talked about rating features, however the goal sequence is changed with the identical enter sequence.

Transformer Community

The transformer community is constructed fully based mostly on self-attention mechanisms, with out using recurrent community structure. The transformer makes use of multi-head self-attention fashions.

Benefits and Disadvantages of Consideration Mechanisms

Consideration mechanisms are a robust software for enhancing the efficiency of deep studying fashions and have a number of key benefits. A few of the principal benefits of the eye mechanism are:

- Enhanced Accuracy: Consideration mechanisms contribute to enhancing the accuracy of predictions by enabling the mannequin to focus on essentially the most pertinent data.

- Elevated Effectivity: By processing solely an important knowledge, consideration mechanisms improve the effectivity of the mannequin. This reduces the computational assets required and enhances the scalability of the mannequin.

- Improved Interpretability: The eye weights realized by the mannequin present useful insights into essentially the most crucial elements of the information. This helps enhance the interpretability of the mannequin and aids in understanding its decision-making course of.

Nonetheless, the eye mechanism additionally has drawbacks that have to be thought-about. The key drawbacks are:

- Coaching Problem: Coaching consideration mechanisms could be difficult, notably for big and complicated duties. Studying the eye weights from knowledge usually necessitates a considerable quantity of knowledge and computational assets.

- Overfitting: Attentional mechanisms could be prone to overfitting. Whereas the mannequin could carry out nicely on the coaching knowledge, it could wrestle to generalize successfully to new knowledge. Using regularization strategies can mitigate this drawback, but it surely stays difficult for big and complicated duties.

- Publicity Bias: Consideration mechanisms can endure from publicity bias points throughout coaching. This happens when the mannequin is educated to generate the output sequence one step at a time however is evaluated by producing the whole sequence without delay. This discrepancy can lead to poor efficiency on take a look at knowledge, because the mannequin could wrestle to precisely reproduce the whole output sequence.

It is very important acknowledge each the benefits and downsides of consideration mechanisms as a way to make knowledgeable selections concerning their utilization in deep studying fashions.

Suggestions for Utilizing Consideration Frameworks

When implementing an consideration framework, take into account the next tricks to improve its effectiveness:

- Perceive Completely different Fashions: Familiarize your self with the assorted consideration framework fashions accessible. Every mannequin has distinctive options and benefits, so evaluating them will allow you to select essentially the most appropriate framework for reaching correct outcomes.

- Present Constant Coaching: Constant coaching of the neural community is essential. Make the most of strategies corresponding to back-propagation and reinforcement studying to enhance the effectiveness and accuracy of the eye framework. This permits the identification of potential errors within the mannequin and helps refine and improve its efficiency.

- Apply Consideration Mechanisms to Translation Initiatives: They’re notably well-suited for language translations. By incorporating consideration mechanisms into translation duties, you may improve the accuracy of the translations. The eye mechanism assigns applicable weights to completely different phrases, capturing their relevance and enhancing the general translation high quality.

Software of Consideration Mechanisms

A few of the principal makes use of of the eye mechanism are:

- Make use of consideration mechanisms in pure language processing (NLP) duties, together with machine translation, textual content summarization, and query answering. These mechanisms play a vital position in serving to fashions comprehend the that means of phrases inside a given textual content and emphasize essentially the most pertinent data.

- Laptop imaginative and prescient duties corresponding to picture classification and object recognition additionally profit from consideration mechanisms. By using consideration, fashions can determine parts of a picture and focus their evaluation on particular objects.

- Speech recognition duties contain transcribing recorded sounds and recognizing voice instructions. Consideration mechanisms show useful in duties by enabling fashions to focus on segments of the audio sign and precisely acknowledge spoken phrases.

- Attentional mechanisms are additionally helpful in music manufacturing duties, corresponding to melody technology and chord progressions. By using consideration, fashions can emphasize important musical components and generate coherent and expressive compositions.

Conclusion

Consideration mechanisms have gained widespread utilization throughout varied domains, together with pc imaginative and prescient. Nonetheless, the vast majority of analysis and growth in attentional mechanisms has centered round Neural Machine Translation (NMT). Standard automated translation methods closely depend on in depth labeled datasets with complicated options that map the statistical properties of every phrase.

In distinction, attentional mechanisms supply an easier method for NMT. On this method, we encode the that means of a sentence right into a fixed-length vector and put it to use to generate a translation. Somewhat than translating phrase by phrase, the eye mechanism focuses on capturing the general sentiment or high-level data of a sentence. By adopting this learning-driven method, NMT methods not solely obtain important accuracy enhancements but additionally profit from simpler development and quicker coaching processes.

Key Takeaways

- The eye mechanism is a neural community layer that integrates into deep studying fashions.

- It allows the mannequin to concentrate on particular components of the enter by assigning weights based mostly on their relevance to the duty.

- Consideration mechanisms have confirmed to be extremely efficient in varied duties, together with machine translation, picture captioning, and speech recognition.

- They’re notably advantageous when coping with lengthy enter sequences, as they permit the mannequin to selectively concentrate on essentially the most related components.

- Consideration mechanisms can improve mannequin interpretability by visually representing the components of the enter the mannequin is attending to.

Steadily Requested Questions

A. The eye mechanism is a layer added to deep studying fashions that assigns weights to completely different components of the information, enabling the mannequin to focus consideration on particular components.

A. World consideration considers all accessible knowledge, whereas native consideration focuses on a selected subset of the general knowledge.

A. In machine translation, the eye mechanism selectively adjusts and focuses on related components of the supply sentence in the course of the translation course of, assigning extra weight to essential phrases and phrases.

A. The transformer is a neural community structure that closely depends on consideration mechanisms. It makes use of self-attention to seize dependencies between phrases in enter sequences and might mannequin long-range dependencies extra successfully than conventional recurrent neural networks.

A. One instance is the “present, attend, and inform” mannequin utilized in picture description duties. It makes use of an consideration mechanism to dynamically concentrate on completely different areas of the picture whereas producing related descriptive captions.

The media proven on this article is just not owned by Analytics Vidhya and is used on the Writer’s discretion.