Inside the common giant enterprise, delicate information is being shared to generative AI apps each hour of the working day

Netskope, a frontrunner in Safe Entry Service Edge (SASE), right now unveiled new analysis exhibiting that for each 10,000 enterprise customers, an enterprise group is experiencing roughly 183 incidents of delicate information being posted to the app per 30 days. Supply code accounts for the most important share of delicate information being uncovered.

The findings are a part of Cloud & Menace Report: AI Apps within the Enterprise, Netskope Menace Labs’ first complete evaluation of AI utilization within the enterprise and the safety dangers at play. Based mostly on information from thousands and thousands of enterprise customers globally, Netskope discovered that generative AI app utilization is rising quickly, up 22.5% over the previous two months, amplifying the possibilities of customers exposing delicate information.

Rising AI App Utilization

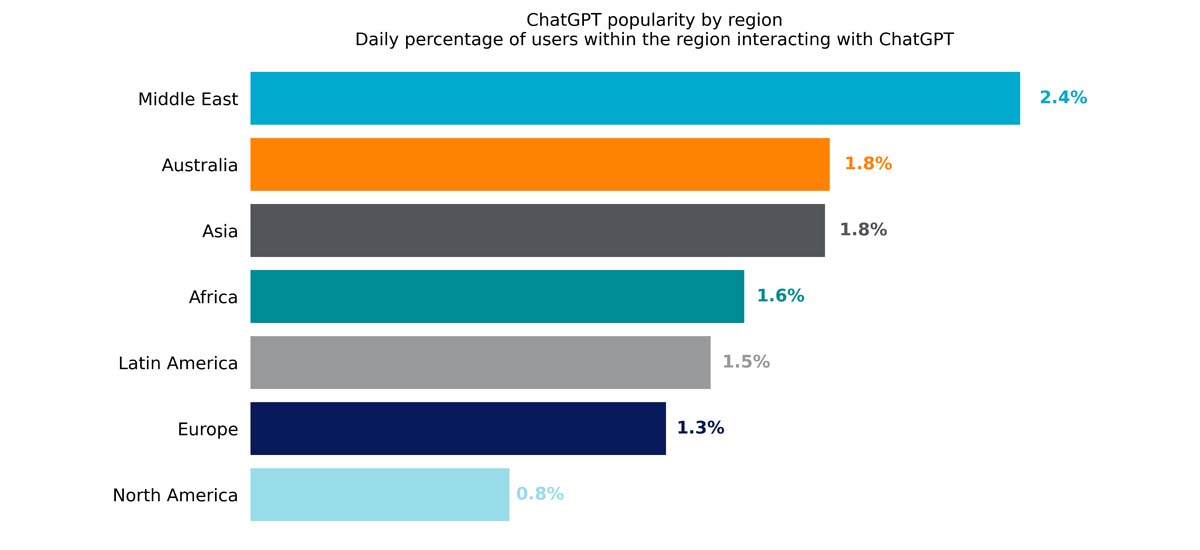

Netskope discovered that organizations with 10,000 customers or extra use a median of 5 AI apps every day, with ChatGPT seeing greater than 8 occasions as many every day energetic customers as some other generative AI app. On the present development fee, the variety of customers accessing AI apps is anticipated to double inside the subsequent seven months.

Over the previous two months, the quickest rising AI app was Google Bard, presently including customers at a fee of seven.1% per week, in comparison with 1.6% for ChatGPT. At present charges, Google Bard will not be poised to catch as much as ChatGPT for over a 12 months, although the generative AI app area is anticipated to evolve considerably earlier than then, with many extra apps in growth.

Customers Inputting Delicate Knowledge into ChatGPT

Netskope discovered that supply code is posted to ChatGPT greater than some other sort of delicate information, at a fee of 158 incidents per 10,000 customers per 30 days. Different delicate information being shared in ChatGPT contains regulated data- together with monetary and healthcare information, personally identifiable info – together with mental property excluding supply code, and, most concerningly, passwords and keys, often embedded in supply code.

“It’s inevitable that some customers will add proprietary supply code or textual content containing delicate information to AI instruments that promise to assist with programming or writing,” mentioned Ray Canzanese, Menace Analysis Director, Netskope Menace Labs. “Due to this fact, it’s crucial for organizations to put controls round AI to stop delicate information leaks. Controls that empower customers to reap the advantages of AI, streamlining operations and enhancing effectivity, whereas mitigating the dangers are the final word purpose. The best controls that we see are a mixture of DLP and interactive consumer teaching.”

Blocking or Granting Entry to ChatGPT

Netskope Menace Labs is presently monitoring ChatGPT proxies and greater than 1,000 malicious URLs and domains from opportunistic attackers searching for to capitalize on the AI hype, together with a number of phishing campaigns, malware distribution campaigns, and spam and fraud web sites.

Blocking entry to AI associated content material and AI functions is a brief time period answer to mitigate danger, however comes on the expense of the potential advantages AI apps provide to complement company innovation and worker productiveness. Netskope’s information exhibits that in monetary companies and healthcare – each extremely regulated industries – practically 1 in 5 organizations have carried out a blanket ban on worker use of ChatGPT, whereas within the expertise sector, just one in 20 organizations have carried out likewise.

“As safety leaders, we can’t merely resolve to ban functions with out impacting on consumer expertise and productiveness,” mentioned James Robinson, Deputy Chief Data Safety Officer at Netskope. “Organizations ought to deal with evolving their workforce consciousness and information insurance policies to satisfy the wants of workers utilizing AI merchandise productively. There’s a good path to secure enablement of generative AI with the best instruments and the best mindset.”

To ensure that organizations to allow the secure adoption of AI apps, they have to middle their method on figuring out permissible apps and implementing controls that empower customers to make use of them to their fullest potential, whereas safeguarding the group from dangers. Such an method ought to embrace area filtering, URL filtering, and content material inspection to guard in opposition to assaults. Different steps to safeguard information and securely use AI instruments embrace:

- Block entry to apps that don’t serve any reputable enterprise goal or that pose a disproportionate danger to the group.

- Make use of consumer teaching to remind customers of firm coverage surrounding the usage of AI apps.

- Use fashionable information loss prevention (DLP) applied sciences to detect posts containing probably delicate info.

At the side of the report, Netskope introduced new answer choices from SkopeAI, the Netskope suite of synthetic intelligence and machine studying (AI/ML) improvements. SkopeAI leverages the facility of AI/ML to overcome the constraints of complicated legacy instruments and supply safety utilizing AI-speed strategies not present in different SASE merchandise.