I blocked two of our rating pages utilizing robots.txt. We misplaced a place right here or there and all the featured snippets for the pages. I anticipated much more influence, however the world didn’t finish.

Warning

I don’t suggest doing this, and it’s totally potential that your outcomes could also be completely different from ours.

I used to be making an attempt to see the influence on rankings and site visitors that the removing of content material would have. My concept was that if we blocked the pages from being crawled, Google must depend on the hyperlink indicators alone to rank the content material.

Nevertheless, I don’t suppose what I noticed was truly the influence of eradicating the content material. Perhaps it’s, however I can’t say that with 100% certainty, because the influence feels too small. I’ll be working one other check to verify this. My new plan is to delete the content material from the web page and see what occurs.

My working concept is that Google should be utilizing the content material it used to see on the web page to rank it. Google Search Advocate John Mueller has confirmed this habits within the previous.

To date, the check has been working for almost 5 months. At this level, it doesn’t look like Google will cease rating the web page. I believe, after some time, it is going to seemingly cease trusting that the content material that was on the web page continues to be there, however I haven’t seen proof of that occuring.

Maintain studying to see the check setup and influence. The principle takeaway is that unintentionally blocking pages (that Google already ranks) from being crawled utilizing robots.txt most likely isn’t going to have a lot influence in your rankings, and they’ll seemingly nonetheless present within the search outcomes.

I selected the identical pages as used within the “influence of hyperlink” research, aside from the article on web optimization pricing as a result of Joshua Hardwick had simply up to date it. I had seen the influence of eradicating the hyperlinks to those articles and wished to check the influence of eradicating the content material. As I stated within the intro, I’m undecided that’s truly what occurred.

I blocked these two pages on January 30, 2023:

These traces have been added to our robots.txt file:

Disallow: /weblog/top-bing-searches/Disallow: /weblog/top-youtube-searches/

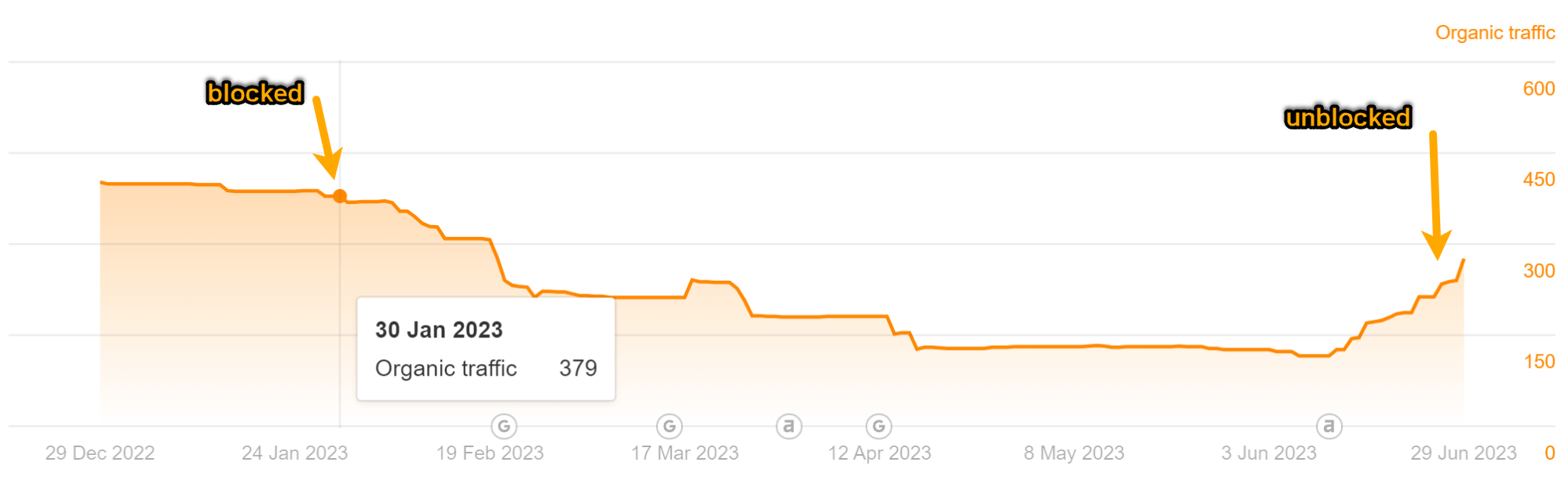

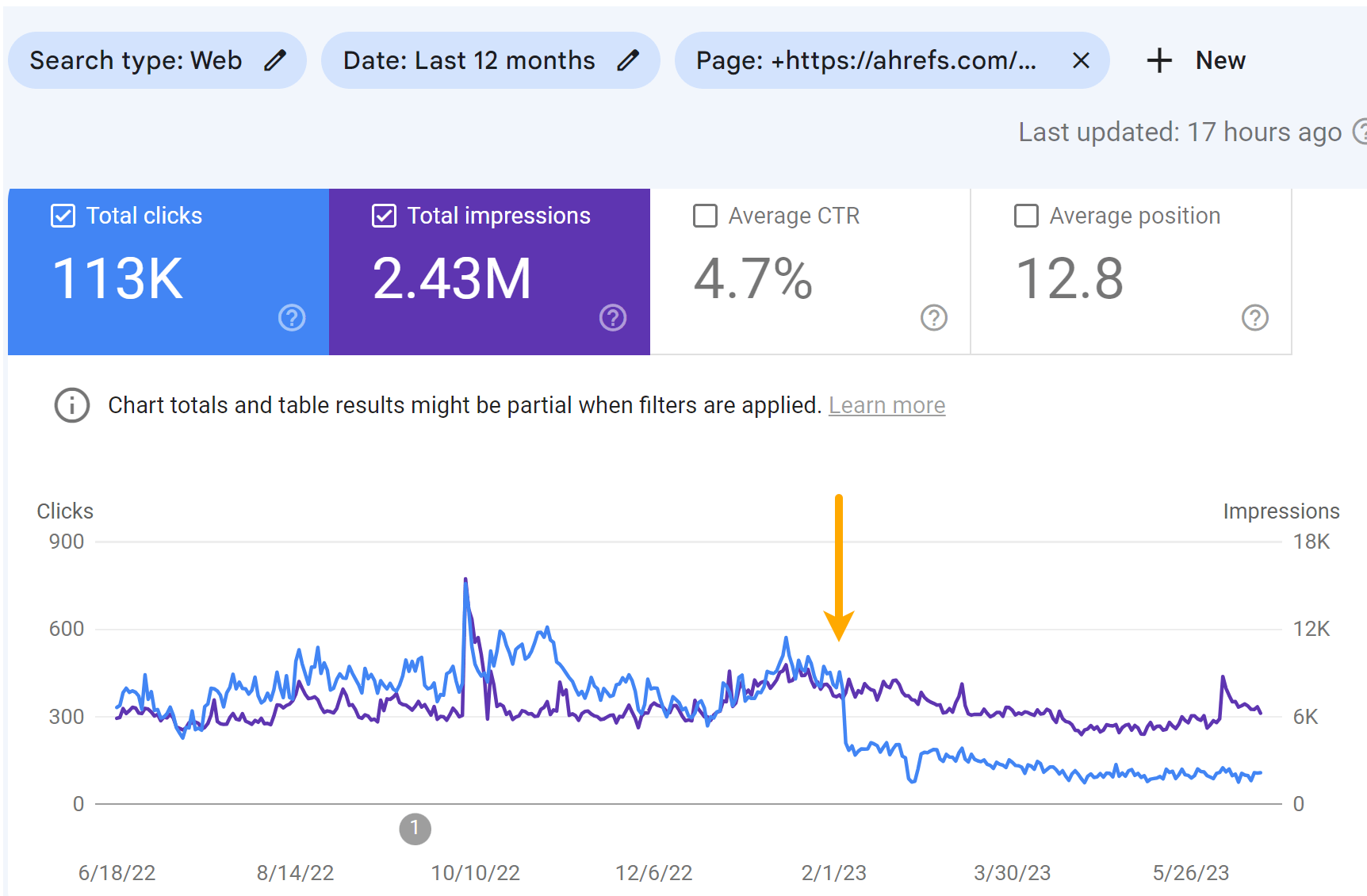

As you possibly can see within the charts beneath, each pages misplaced some site visitors. But it surely didn’t end in a lot change to our site visitors estimate like I used to be anticipating.

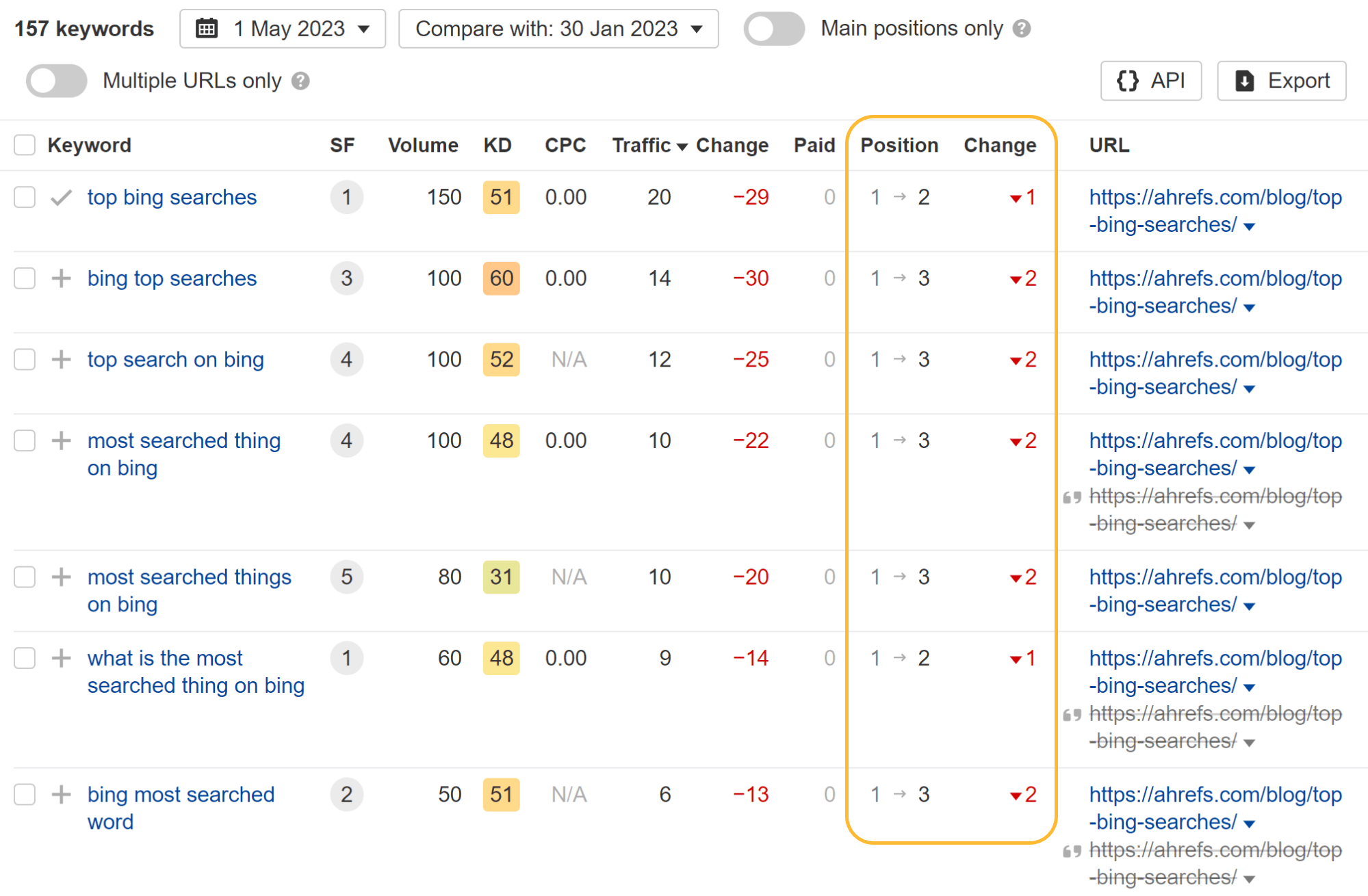

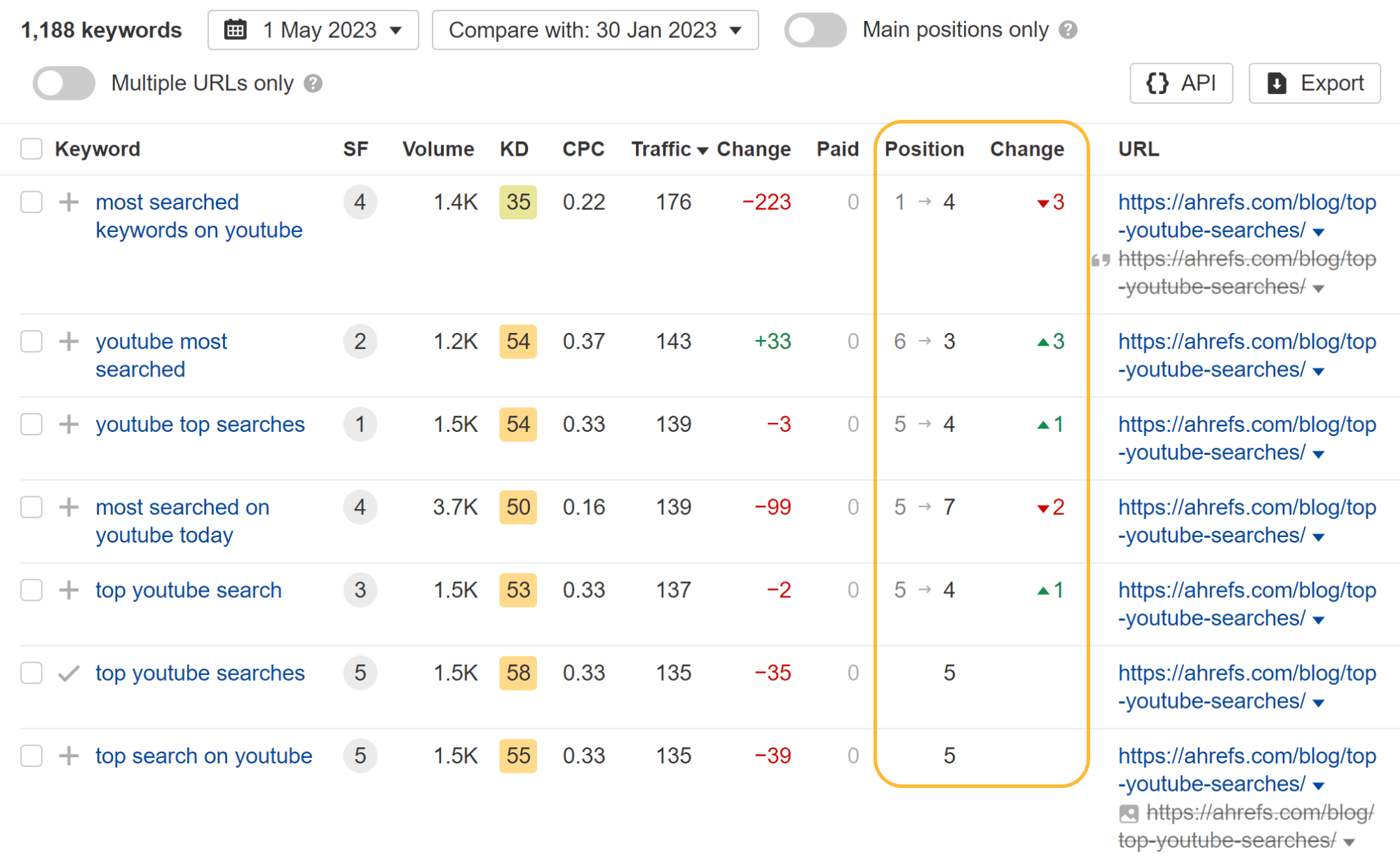

Trying on the particular person key phrases, you possibly can see that some key phrases misplaced a place or two and others truly gained rating positions whereas the web page was blocked from crawling.

Essentially the most fascinating factor I observed is that they misplaced all featured snippets. I assume that having the pages blocked from crawling made them ineligible for featured snippets. Once I later eliminated the block, the article on Bing searches rapidly regained some snippets.

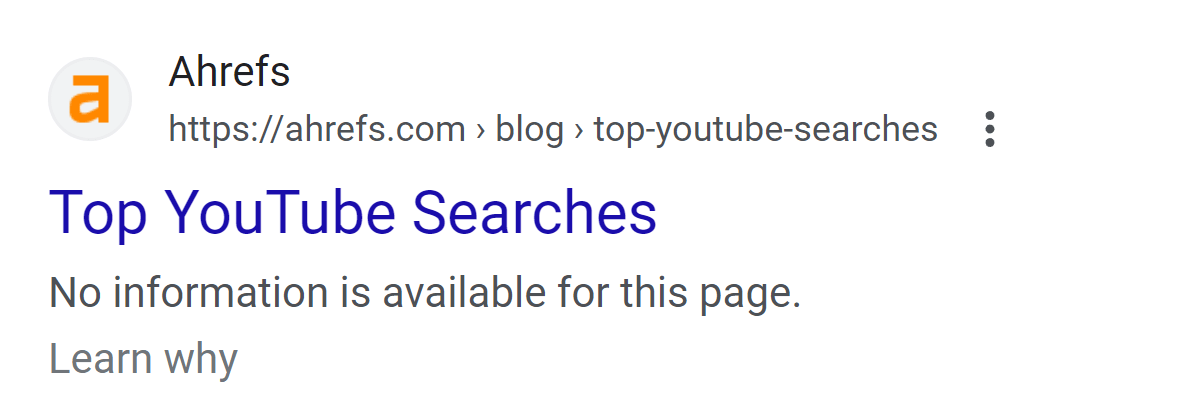

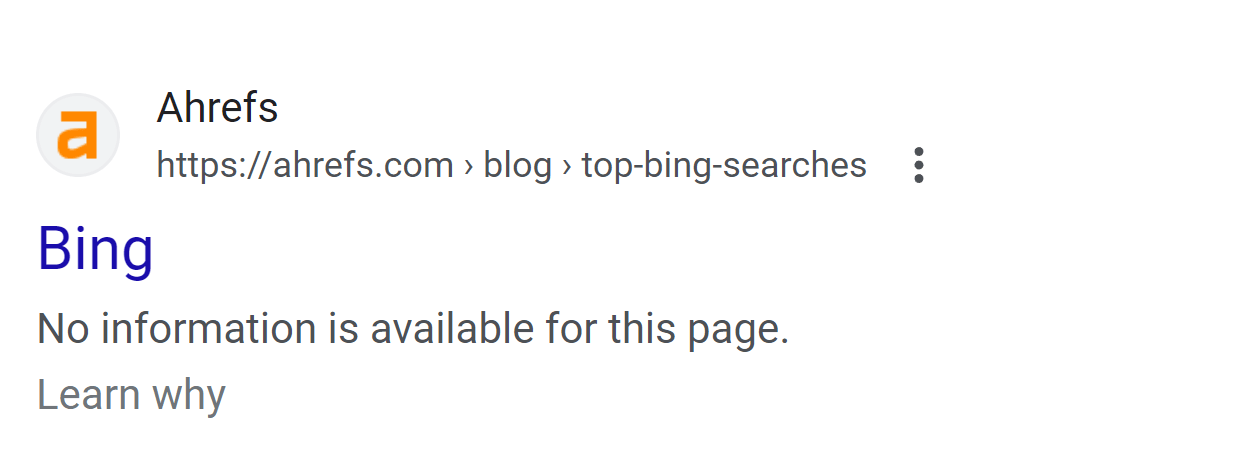

Essentially the most noticeable influence to the pages is on the SERP. The pages misplaced their customized titles and displayed a message saying that no info was out there as a substitute of the meta description.

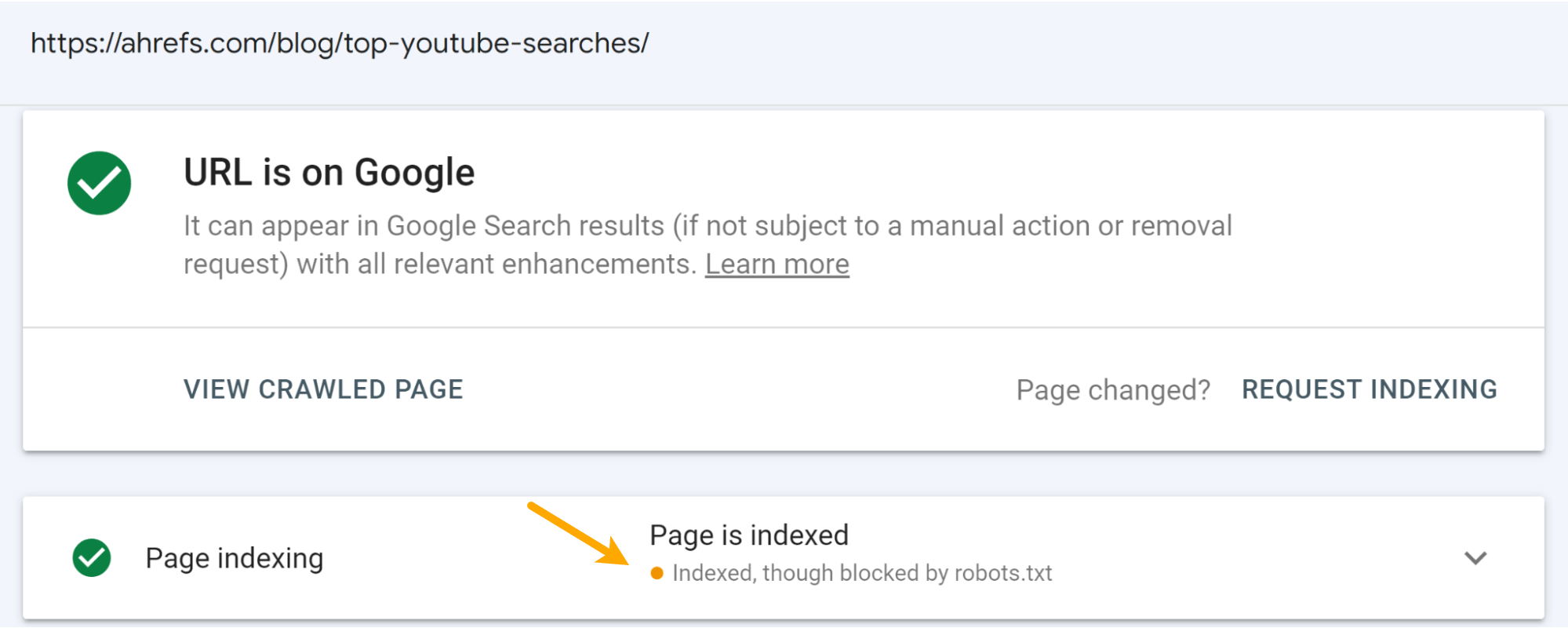

This was anticipated. It occurs when a web page is blocked by robots.txt. Moreover, you’ll see the “Listed, although blocked by robots.txt” standing in Google Search Console for those who examine the URL.

I consider that the message on the SERPs harm the clicks to the pages greater than the rating drops. You may see some drop within the impressions, however a bigger drop within the variety of clicks for the articles.

Visitors for the “Prime YouTube Searches” article:

Visitors for the “Prime Bing Searches” article:

Closing ideas

I don’t suppose any of you’ll be shocked by my commentary on this. Don’t block pages you need listed. It hurts. Not as dangerous as you may suppose it does—nevertheless it nonetheless hurts.