Hollywood could also be embroiled in ongoing labor disputes that contain AI, however the know-how infiltrated movie and TV lengthy, way back. At SIGGRAPH in LA, algorithmic and generative instruments have been on show in numerous talks and bulletins. We could not know the place the likes of GPT-4 and Steady Diffusion slot in but, however the inventive facet of manufacturing is able to embrace them — if it may be executed in a manner that augments moderately than replaces artists.

SIGGRAPH isn’t a movie and TV manufacturing convention, however one about pc graphics and visible results (for 50 years now!), and the matters naturally have overlapped increasingly in recent times.

This yr, the elephant within the room was the strike, and few shows or talks received into it; nonetheless, at afterparties and networking occasions it was roughly the very first thing anybody introduced up. Even so, SIGGRAPH could be very a lot a convention about bringing collectively technical and inventive minds, and the vibe I received was “it sucks, however within the meantime we are able to proceed to enhance our craft.”

The fears round AI in manufacturing are, to not say illusory, however definitely a bit deceptive. Generative AI like picture and textual content fashions have improved tremendously, resulting in worries that they may exchange writers and artists. And definitely studio executives have floated dangerous — and unrealistic — hopes of partly changing writers and actors utilizing AI instruments. However AI has been current in movie and TV for fairly some time, performing necessary and artist-driven duties.

I noticed this on show in quite a few panels, technical paper shows and interviews. After all a historical past of AI in VFX can be attention-grabbing, however for the current listed below are some methods AI in its varied kinds was being proven on the chopping fringe of results and manufacturing work.

Pixar’s artists put ML and simulations to work

One early instance got here in a pair of Pixar shows about animation strategies used of their newest movie, Elemental. The characters on this film are extra summary than others, and the prospect of constructing an individual who’s made of fireplace, water or air is not any straightforward one. Think about wrangling the fractal complexity of those substances right into a physique that may act and categorical itself clearly whereas nonetheless wanting “actual.”

As animators and results coordinators defined one after one other, procedural era was core to the method, simulating and parameterizing the flames or waves or vapors that made up dozens of characters. Hand sculpting and animating each little wisp of flame or cloud that wafts off a personality was by no means an possibility — this may be extraordinarily tedious, labor-intensive and technical moderately than inventive work.

However because the shows made clear, though they relied closely on sims and complex materials shaders to create the specified results, the inventive crew and course of have been deeply intertwined with the engineering facet. (In addition they collaborated with researchers at ETH Zurich for the aim.)

One instance was the general look of one of many predominant characters, Ember, who’s made from flame. It wasn’t sufficient to simulate flames or tweak the colours or modify the numerous dials to have an effect on the result. In the end the flames wanted to mirror the look the artist needed, not simply the way in which flames seem in actual life. To that finish they employed “volumetric neural model switch” or NST; model switch is a machine studying approach most can have skilled by, say, having a selfie modified to the model of Edvard Munch or the like.

On this case the crew took the uncooked voxels of the “pyro simulation,” or generated flames, and handed it by a mode switch community skilled on an artist’s expression of what they needed the character’s flames to seem like: extra stylized, much less simulated. The ensuing voxels have the pure, unpredictable look of a simulation but additionally the unmistakable forged of the artist’s selection.

Simplified instance of NST in motion including model to Ember’s flames. Picture Credit: Pixar

After all the animators are delicate to the concept that they simply generated the movie utilizing AI, which isn’t the case.

“If anybody ever tells you that Pixar used AI to make Elemental, that’s flawed,” stated Pixar’s Paul Kanyuk pointedly through the presentation. “We used volumetric NST to form her silhouette edges.”

(To be clear, NST is a machine studying approach we’d determine as falling underneath the AI umbrella, however the level Kanyuk was making is that it was used as a instrument to realize a creative consequence — nothing was merely “made with AI.”)

Later, different members of the animation and design groups defined how they used procedural, generative or model switch instruments to do issues like recolor a panorama to suit an artist’s palette or temper board, or fill in metropolis blocks with distinctive buildings mutated from “hero” hand-drawn ones. The clear theme was that AI and AI-adjacent instruments have been there to serve the needs of the artists, dashing up tedious guide processes and offering a greater match with the specified look.

AI accelerating dialogue

Photos from Nimona, which DNEG animated. Picture Credit: DNEG

I heard an analogous notice from Martine Bertrand, senior AI researcher at DNEG, the VFX and post-production outfit that the majority lately animated the wonderful and visually gorgeous Nimona. He defined that many current results and manufacturing pipelines are extremely labor-intensive, particularly look improvement and atmosphere design. (DNEG additionally did a presentation, “The place Proceduralism Meets Efficiency” that touches on these matters.)

“Individuals don’t understand that there’s an unlimited period of time wasted within the creation course of,” Bertrand instructed me. Working with a director to seek out the proper search for a shot can take weeks per try, throughout which rare or unhealthy communication usually results in these weeks of labor being scrapped. It’s extremely irritating, he continued, and AI is an effective way to speed up this and different processes which are nowhere close to ultimate merchandise, however merely exploratory and normal.

Artists utilizing AI to multiply their efforts “permits dialogue between creators and administrators,” he stated. Alien jungle, certain — however like this? Or like this? A mysterious cave, like this? Or like this? For a creator-led, visually complicated story like Nimona, getting quick suggestions is particularly necessary. Losing per week rendering a glance that the director rejects per week later is a severe manufacturing delay.

The truth is new ranges of collaboration and interactivity are being achieved in early inventive work like pre-visualization, as one discuss by Sokrispy CEO Sam Wickert defined. His firm was tasked with doing pre-vis for the outbreak scene on the very begin of HBO’s “The Final of Us” — a posh “oner” in a automobile with numerous extras, digital camera actions and results.

Whereas using AI was restricted in that extra grounded scene, it’s straightforward to see how improved voice synthesis, procedural atmosphere era and different instruments might and did contribute to this more and more tech-forward course of.

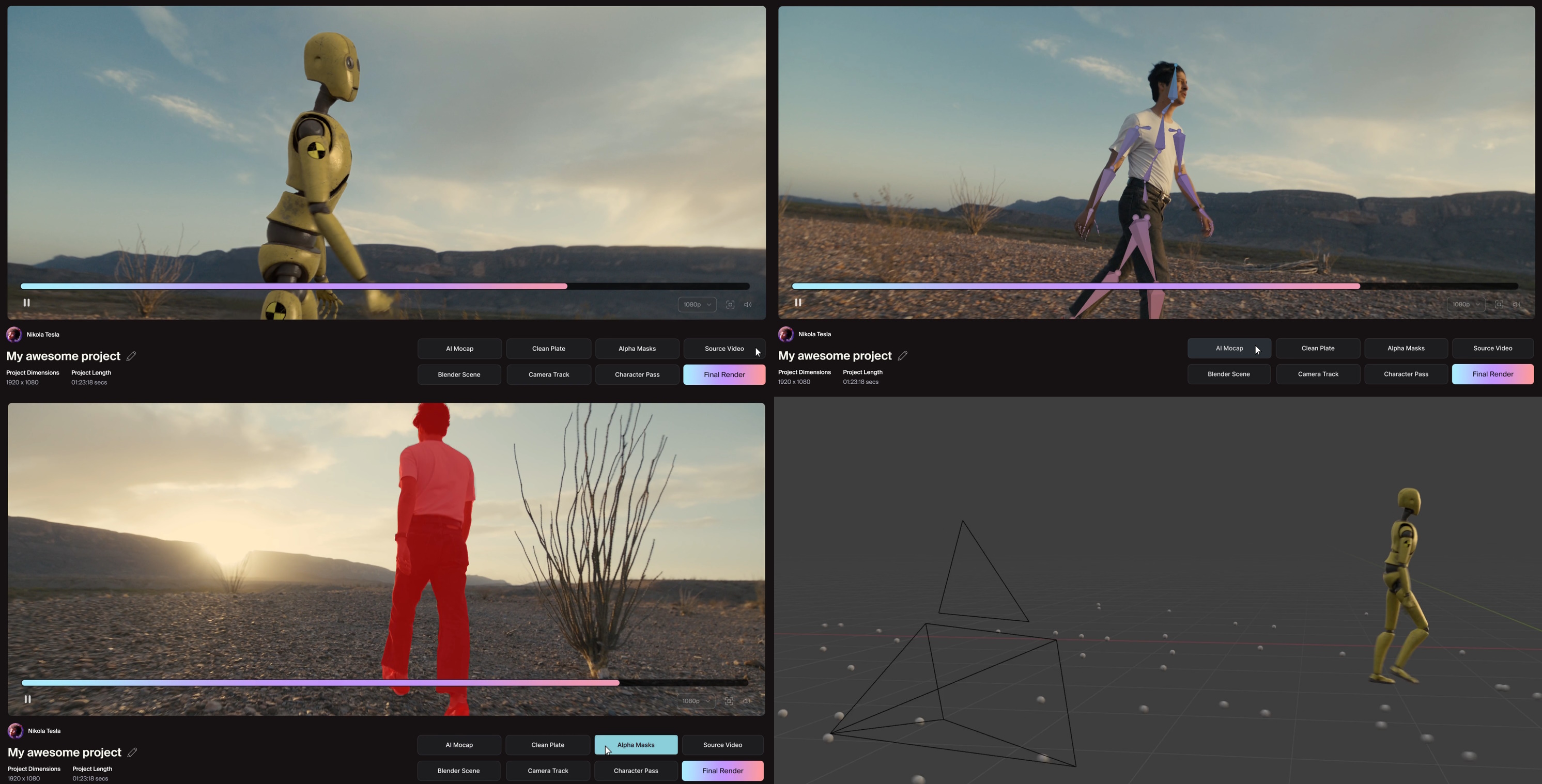

Ultimate shot, mocap knowledge, masks and 3D atmosphere generated by Surprise Studio. Picture Credit: Surprise Studio

Surprise Dynamics, which was cited in a number of keynotes and shows, affords one other instance of use of machine studying processes in manufacturing — totally underneath the artists’ management. Superior scene and object recognition fashions parse regular footage and immediately exchange human actors with 3D fashions, a course of that after took weeks or months.

However as they instructed me just a few months in the past, the duties they automate will not be the inventive ones — it’s grueling rote (typically roto) labor that includes nearly no inventive choices. “This doesn’t disrupt what they’re doing; it automates 80-90% of the target VFX work and leaves them with the subjective work,” co-founder Nikola Todorovic stated then. I caught up with him and his co-founder, actor Tye Sheridan at SIGGRAPH, and so they have been having fun with being the toast of the city: it was clear that the business was transferring within the course that they had began off in years in the past. (By the way, come see Sheridan on the AI stage at TechCrunch Disrupt in September.)

That stated, the warnings of writers and actors placing are on no account being dismissed by the VFX neighborhood. They echo them, the truth is, and their issues are related — if not fairly as existential. For an actor, one’s likeness or efficiency (or for a author, one’s creativeness and voice) is one’s livelihood, and the specter of it being appropriated and automatic totally is a terrifying one.

For artists elsewhere within the manufacturing course of, the specter of automation can be actual, and in addition extra of a individuals downside than a know-how one. Many individuals I spoke to agreed that unhealthy choices by uninformed leaders are the actual downside.

“AI appears so sensible that you could be defer your decision-making course of to the machine,” stated Bertrand. “And when people defer their duties to machines, that’s the place it will get scary.”

If AI may be harnessed to reinforce or streamline the inventive course of, similar to by decreasing time spent on repetitive duties or enabling creators with smaller groups or budgets to match their better-resourced friends, it might be transformative. But when the inventive course of is seconded to AI, a path some executives appear eager to discover, then regardless of the know-how already pervading Hollywood, the strikes will simply be getting began.