Because the Particular Operations Command (SOCOM) commander, you might be informed that intelligence has found that an adversary has sudden capabilities. Subsequently, you must reprioritize capabilities. You inform this system supervisor (PM) on your superior plane platform that the decrease precedence functionality for the whiz-bang software program for sensor fusion that was on the roadmap 18 months from now might want to change into the highest precedence and be delivered within the subsequent six months. However the subsequent two priorities are nonetheless necessary and are wanted as near the unique dates (three months and 9 months out) as doable.

It’s good to know

- What choices to supply the brand new functionality and the following two precedence capabilities (with lowered functionality) will be supplied with no change in staffing?

- What number of extra groups would must be added to get the sensor-fusion software program within the subsequent six months and to remain on schedule for the opposite two capabilities? And what’s the price?

On this weblog put up, excerpted and tailored from a lately revealed white paper, we discover the choices that PMs make and data they should confidently make selections like these with the assistance of knowledge that’s obtainable from DevSecOps pipelines.

As in industrial corporations, DoD PMs are accountable for the general price, schedule, and efficiency of a program. Nonetheless, the DoD PM operates in a distinct setting, serving army and political stakeholders, utilizing authorities funding, and making selections inside a posh set of procurement laws, congressional approval, and authorities oversight. They train management, decision-making, and oversight all through a program and a system’s lifecycle. They should be the leaders of this system, perceive necessities, stability constraints, handle contractors, construct help, and use fundamental administration abilities. The PM’s job is much more complicated in giant packages with a number of software-development pipelines the place price, schedule, efficiency, and danger for the merchandise of every pipeline should be thought of when making selections, in addition to the interrelationships amongst merchandise developed on totally different pipelines.

The objective of the SEI analysis mission referred to as Automated Price Estimation in a Pipeline of Pipelines (ACE/PoPs) is to indicate PMs gather and remodel unprocessed DevSecOps improvement knowledge into helpful program-management data that may information selections they need to make throughout program execution. The flexibility to constantly monitor, analyze, and supply actionable knowledge to the PM from instruments in a number of interconnected pipelines of pipelines (PoPs) may also help maintain the general program on monitor.

What Knowledge Do Program Managers Want?

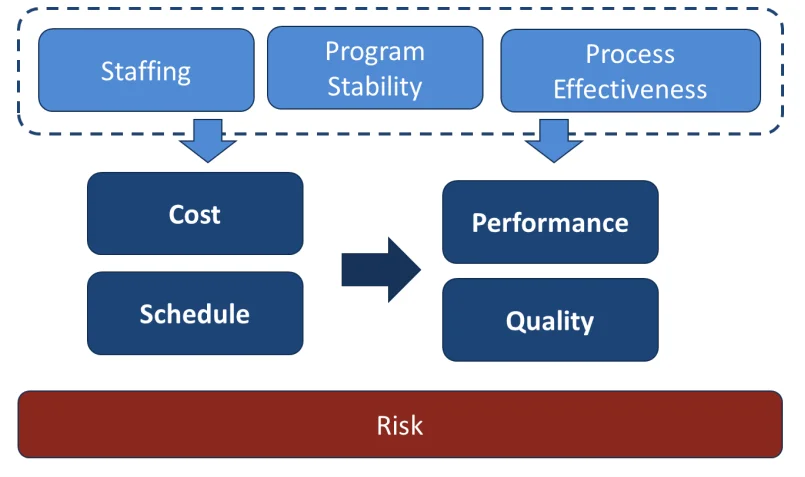

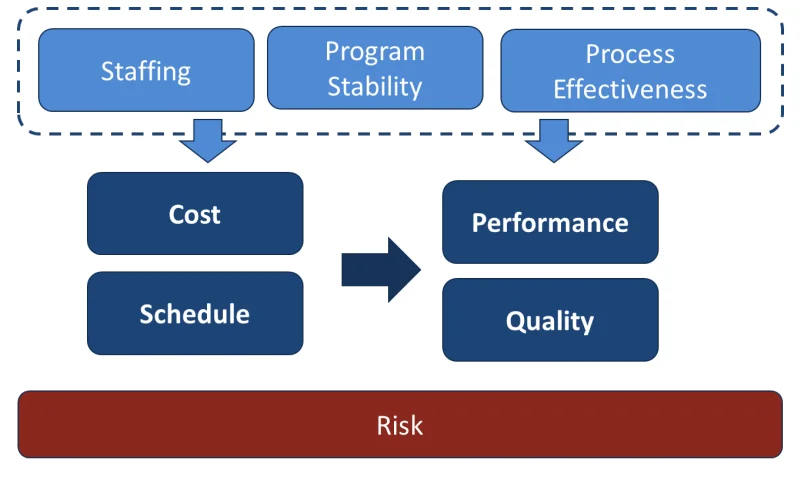

PMs are required to make selections nearly constantly over the course of program execution. There are various totally different areas the place the PM wants goal knowledge to make one of the best choice doable on the time. These knowledge fall into the primary classes of price, schedule, efficiency, and danger. Nonetheless, these classes, and plenty of PM selections, are additionally impacted by different areas of concern, together with staffing, course of effectiveness, program stability, and the standard and knowledge supplied by program documentation. You will need to acknowledge how these knowledge are associated to one another, as proven in Determine 1.

Determine 1: Notional Program Efficiency Mannequin

All PMs monitor price and schedule, however adjustments in staffing, program stability, and course of effectiveness can drive adjustments to each price and schedule. If price and schedule are held fixed, these adjustments will manifest in the long run product’s efficiency or high quality. Dangers will be present in each class. Managing dangers requires gathering knowledge to quantify each the likelihood of incidence and influence of every danger if it happens.

Within the following subsections, we describe these classes of PM issues and recommend methods through which metrics generated by DevSecOps instruments and processes may also help present the PM with actionable knowledge inside these classes. For a extra detailed remedy of those matters, please learn our white paper.

Price

Price is often one of many largest drivers of choices for a PM. Price charged by the contractor(s) on a program has many aspects, together with prices for administration, engineering, manufacturing, testing, documentation, and so forth. This weblog put up focuses on offering metrics for one side of price: software program improvement.

For software-development initiatives, labor is often the one most important contributor to price, together with prices for software program structure, modeling, design, improvement, safety, integration, testing, documentation, and launch. For DoD PMs, the necessity for correct price knowledge is exacerbated by the requirement to plan budgets 5 years prematurely and to replace finances numbers yearly. It’s subsequently vital for PMs to have high quality metrics to allow them to higher perceive total software-development prices and assist estimate future prices.

The DevSecOps pipeline supplies knowledge that may assist PMs make selections relating to price. Whereas the pipeline usually doesn’t immediately present data on {dollars} spent, it may feed typical earned worth administration (EVM) programs and may present EVM-like knowledge even when there isn’t any requirement for EVM. Price is most evident from work utilized to particular work gadgets, which in flip requires data on staffing and the actions carried out. For software program developed utilizing Agile processes in a DevSecOps setting, measures obtainable by the pipeline can present knowledge on group measurement, precise labor hours, and the particular work deliberate and accomplished. Though clearly not the identical as price, monitoring labor prices (hours labored) and full-time equivalents (FTEs) can present a sign of price efficiency. On the group degree, the DevSecOps cadence of planning increments and sprints supplies labor hours, and labor hours scale linearly with price.

A PM can use metrics on work accomplished vs. deliberate to make knowledgeable selections about potential price overruns for a functionality or function. These metrics can even assist a PM prioritize work and determine whether or not to proceed work in particular areas or transfer funding to different capabilities. The work will be measured in estimated/precise price, and optionally an estimated/precise measurement will be measured. The anticipated price of labor deliberate vs. precise price of labor delivered measures predictability. The DevSecOps pipeline supplies a number of direct measurements, together with the precise work gadgets taken by improvement and manufacturing, and the time they enter the DevSecOps pipeline, as they’re constructed and as they’re deployed. These measurements lead us to schedule knowledge.

Schedule

The PM wants correct data to make selections that rely on supply timelines. Schedule adjustments can have an effect on the supply of functionality within the subject. Schedule can be necessary when contemplating funding availability, want for take a look at belongings, commitments to interfacing packages, and plenty of different points of this system. On packages with a number of software program pipelines, you will need to perceive not solely the technical dependencies, but additionally the lead and lag occasions between inter-pipeline capabilities and rework. Schedule metrics obtainable from the DevSecOps pipeline may also help the PM make selections based mostly on how software-development and testing actions on a number of pipelines are progressing.

The DevSecOps pipeline can present progress in opposition to plan at a number of totally different ranges. A very powerful degree for the PM is the schedule associated to delivering functionality to the customers. The pipeline usually tracks tales and options, however with hyperlinks to a work-breakdown construction (WBS), options will be aggregated to indicate progress vs. the plan for functionality supply as properly. This traceability doesn’t naturally happen, nevertheless, nor will the metrics if not adequately deliberate and instantiated. Program work should be prioritized, the hassle estimated, and a nominal schedule derived from the obtainable employees and groups. The granularity of monitoring ought to be sufficiently small to detect schedule slips however giant sufficient to keep away from extreme plan churn as work is reprioritized.

The schedule might be extra correct on a short-term scale, and the plans should be up to date every time priorities change. In Agile improvement, one of many most important metrics to search for with respect to schedule is predictability. Is the developer working to a repeatable cadence and delivering what was promised when anticipated? The PM wants credible ranges for program schedule, price, and efficiency. Measures that inform predictability, corresponding to effort bias and variation of estimates versus actuals, throughput, and lead occasions, will be obtained from the pipeline. Though the seventh precept of the Agile Manifesto states that working software program is the first measure of progress, you will need to distinguish between indicators of progress (i.e., interim deliverables) and precise progress.

Story factors generally is a main indicator. As a program populates a burn-up or burndown chart displaying accomplished story factors, this means that work is being accomplished. It supplies a number one indication of future software program manufacturing. Nonetheless, work carried out to finish particular person tales or sprints will not be assured to generate working software program. From the PM perspective, solely accomplished software program merchandise that fulfill all situations for completed are true measures of progress (i.e., working software program).

A typical drawback within the multi-pipeline state of affairs—particularly throughout organizational boundaries—is the achievement of coordination occasions (milestones). Applications mustn’t solely independently monitor the schedule efficiency of every pipeline to find out that work is progressing towards key milestones (often requiring integration of outputs from a number of pipelines), but additionally confirm that the work is really full.

Along with monitoring the schedule for the operational software program, the DevSecOps instruments can present metrics for associated software program actions. Software program for help gadgets corresponding to trainers, program-specific help tools, knowledge evaluation, and so forth., will be very important to this system’s total success. The software program for all of the system parts ought to be developed within the DevSecOps setting so their progress will be tracked and any dependencies acknowledged, thereby offering a clearer schedule for this system as a complete.

Within the DoD, understanding when capabilities might be accomplished will be vital for scheduling follow-on actions corresponding to operational testing and certification. As well as, programs typically should interface to different programs in improvement, and understanding schedule constraints is necessary. Utilizing knowledge from the DevSecOps pipeline permits DoD PMs to raised estimate when the capabilities below improvement might be prepared for testing, certification, integration, and fielding.

Efficiency

Practical efficiency is vital in making selections relating to the precedence of capabilities and options in an Agile setting. Understanding the required degree of efficiency of the software program being developed can enable knowledgeable selections on what capabilities to proceed creating and which to reassess. The idea of fail quick can’t achieve success except you may have metrics to shortly inform the PM (and the group) when an concept results in a technical lifeless finish.

A mandatory situation for a functionality supply is that each one work gadgets required for that functionality have been deployed by the pipeline. Supply alone, nevertheless, is inadequate to think about a functionality full. A whole functionality should additionally fulfill the desired necessities and fulfill the wants within the supposed setting. The event pipeline can present early indicators for technical efficiency. Technical efficiency is generally validated by the shopper. Nonetheless, technical efficiency contains indicators that may be measured by metrics obtainable within the DevSecOps pipeline.

Check outcomes will be collected utilizing modeling and simulation runs or by varied ranges of testing inside the pipeline. If automated testing has been applied, assessments will be run with each construct. With a number of pipelines, these outcomes will be aggregated to offer choice makers perception into test-passage charges at totally different ranges of testing.

A second strategy to measure technical efficiency is to ask customers for suggestions after dash demos and end-of-increment demos. Suggestions from these demos can present worthwhile details about the system efficiency and its skill to fulfill person wants and expectations.

A 3rd strategy to measure technical efficiency is thru specialised testing within the pipeline. Stress testing that evaluates necessities for key efficiency parameters, corresponding to whole variety of customers, response time with most customers, and so forth, may also help predict system functionality when deployed.

High quality

Poor-quality software program can have an effect on each efficiency and long-term upkeep of the software program. Along with performance, there are a lot of high quality attributes to think about based mostly on the area and necessities of the software program. Further efficiency components change into extra outstanding in a pipeline-of-pipelines setting. Interoperability, agility, modularity, and compliance with interface specs are just a few of the obvious ones.

This system should be happy that the event makes use of efficient strategies, points are recognized and remediated, and the delivered product has ample high quality for not simply the first delivering pipeline however for all upstream pipelines as properly. Earlier than completion, particular person tales should move by a DevSecOps toolchain that features a number of automated actions. As well as, the general workflow contains duties, design, and evaluations that may be tracked and measured for all the PoP.

Categorizing work gadgets is necessary to account for, not just for work that builds options and functionality, but additionally work that’s typically thought of overhead or help. Mik Kersten makes use of function, bug, danger merchandise, and technical debt. We’d add adaptation.

The work kind stability can present a number one measure of program well being. Every work merchandise is given a piece kind class, an estimated price, and an precise price. For the finished work gadgets, the portion of labor in every class will be in comparison with plans and baselines. Variance from the plan or sudden drift in one of many measures can point out an issue that ought to be investigated. For instance, a rise in bug work suggests high quality issues whereas a rise in technical-debt points can sign design or architectural deficiencies that aren’t addressed.

Usually, a DevSecOps setting contains a number of code-analysis purposes that mechanically run day by day or with each code commit. These analyzers output weaknesses that had been found. Timestamps from evaluation execution and code commits can be utilized to deduce the time delay that was launched to handle the problems. Situation density, utilizing bodily measurement, purposeful measurement, or manufacturing effort can present a first-level evaluation of the general high quality of the code. Massive lead occasions for this stage point out a excessive price of high quality. A static scanner can even determine points with design adjustments in cyclomatic or interface complexity and will predict technical debt. For a PoP, analyzing the upstream and downstream outcomes throughout pipelines can present perception as to how efficient high quality packages are on the ultimate product.

Automated builds help one other indicator of high quality. Construct points often contain inconsistent interfaces, out of date libraries, or different international inconsistencies. Lead time for builds and variety of failed builds point out high quality failures and will predict future high quality points. By utilizing the length of a zero-defect construct time as a baseline, the construct lead time supplies a strategy to measure the construct rework.

For PoPs, construct time following integration of upstream content material immediately measures how properly the person pipelines collaborated. Check capabilities inside the DevSecOps setting additionally present perception into total code high quality. Defects discovered throughout testing versus after deployment may also help consider the general high quality of the code and the event and testing processes.

Threat

Dangers usually threaten price, schedule, efficiency, or high quality. The PM wants data to evaluate the likelihood and influence of the dangers if not managed and doable mitigations (together with the price of the mitigations and discount in danger consequence) for every doable plan of action. The dangers concerned in software program improvement may end up from inadequacy of the technical answer, supply-chain points, obsolescence, software program vulnerabilities, and points with the DevSecOps setting and total staffing.

Threat outcomes from uncertainty and contains potential threats to the product functionality and operational points corresponding to cyberattack, supply schedule, and value. This system should be certain that dangers have been recognized, quantified, and, as acceptable, tracked till mitigated. For the needs of the PM, danger exposures and mitigations ought to be quantified when it comes to price, schedule, and technical efficiency.

Threat mitigations also needs to be prioritized, included among the many work gadgets, and scheduled. Effort utilized to burning down danger will not be obtainable for improvement, so danger burndown should be explicitly deliberate and tracked. The PM ought to monitor the danger burndown and value ratios of danger to the general interval prices. Two separate burndowns ought to be monitored: the fee and the worth (publicity). The price assures that danger mitigations have been adequately funded and executed. The worth burndown signifies precise discount in danger degree.

Growth groups might assign particular dangers to capabilities or options. Growth-team dangers are often mentioned throughout increment planning. Threat mitigations added to the work gadgets ought to be recognized as danger and the totals ought to be included in reviews to the PM.

Different Areas of Concern to the Program Supervisor

Along with the standard PM duties of creating selections associated to price, schedule, efficiency, and danger, the PM should additionally contemplate extra contributing components when making program selections, particularly with respect to software program improvement. Every of those components can have an effect on price, schedule, and efficiency.

- Group/staffing—PMs want to know the group/staffing for each their very own program administration workplace (PMO) group and the contractor’s group (together with any subcontractors or authorities personnel on these groups). Acquiring this understanding is particularly necessary in an Agile or Lean improvement. The PMO and customers want to supply subject-matter consultants to the creating group to make sure that the event is assembly the customers’ wants and expectations. Customers can embody operators, maintainers, trainers, and others. The PMO additionally must contain acceptable employees with particular abilities in Agile occasions and to evaluation the artifacts developed.

- Processes—For multi-pipeline packages, course of inconsistencies (e.g., definition of completed) and variations within the contents of software program deliverables can create huge integration points. It will be important for a PM to make sure that PMO, contractor, and provider processes are outlined and repeatably executed. In single pipelines, all program companions should perceive the processes and practices of the upstream and downstream DevSecOps actions, together with coding practices and requirements and the pipeline tooling environments. For multi-pipeline packages, course of inconsistencies and variations within the contents of software program deliverables can create huge integration points, with each price and schedule impacts.

- Stability—Along with monitoring metrics for gadgets like staffing, price, schedule, and high quality, a PM additionally must know if these areas are steady. Even when some metrics are constructive (for instance, this system is beneath price), tendencies or volatility can level to doable points sooner or later if there are huge swings within the knowledge that aren’t defined by program circumstances. As well as, stability in necessities and long-term function prioritization may be necessary to trace. Whereas agility encourages adjustments in priorities, the PM wants to know the prices and dangers incurred. Furthermore, the Agile precept to fail quick can enhance the speed of studying the software program’s strengths and weaknesses. These are a traditional a part of Agile improvement, however the total stability of the Agile course of should be understood by the PM.

- Documentation—The DoD requirement for documentation of acquisition packages creates a PM problem to stability the Agile follow of avoiding non-value-added documentation. You will need to seize mandatory design, structure, coding, integration, and testing information in a fashion that’s helpful to engineering employees answerable for software program sustainment whereas additionally assembly DoD documentation necessities.

Creating Dashboards from Pipelines to Establish Dangers

Though the quantity of knowledge obtainable from a number of pipelines can get overwhelming, there are instruments obtainable to be used inside pipelines that may mixture knowledge and create a dashboard of the obtainable metrics. Pipelines can generate a number of totally different dashboards to be used by builders, testers, and PMs. The important thing to creating a helpful dashboard is to pick out acceptable metrics to make selections, tailor-made to the wants of the particular program at varied occasions throughout the lifecycle. The dashboard ought to change to focus on metrics associated to these altering aspects of program wants.

It takes effort and time to find out what dangers will drive selections and what metrics may inform these selections. With instrumented DevSecOps pipelines, these metrics are extra available, and plenty of will be supplied in actual time with out the necessity to look forward to a month-to-month metrics report. Instrumentation may also help the PM to make selections based mostly on well timed knowledge, particularly in giant, complicated packages with a number of pipelines.