“AI won’t substitute you. An individual utilizing AI will. ”

-Santiago @svpino

In our work as advisors in software program and AI engineering, we are sometimes requested concerning the efficacy of enormous language mannequin (LLM) instruments like Copilot, GhostWriter, or Tabnine. Current innovation within the constructing and curation of LLMs demonstrates highly effective instruments for the manipulation of textual content. By discovering patterns in giant our bodies of textual content, these fashions can predict the subsequent phrase to write down sentences and paragraphs of coherent content material. The priority surrounding these instruments is robust – from New York colleges banning the usage of ChatGPT to Stack Overflow and Reddit banning solutions and artwork generated from LLMs. Whereas many purposes are strictly restricted to writing textual content, just a few purposes discover the patterns to work on code, as properly. The hype surrounding these purposes ranges from adoration (“I’ve rebuilt my workflow round these instruments”) to worry, uncertainty, and doubt (“LLMs are going to take my job”). Within the Communications of the ACM, Matt Welsh goes as far as to declare we’ve reached “The Finish of Programming.” Whereas built-in growth environments have had code technology and automation instruments for years, on this put up I’ll discover what new developments in AI and LLMs imply for software program growth.

Overwhelming Want and the Rise of the Citizen Developer

First, a little bit context. The necessity for software program experience nonetheless outstrips the workforce out there. Demand for prime quality senior software program engineers is rising. The U.S. Bureau of Labor Statistics estimates progress to be 25 % yearly from 2021 to 2031. Whereas the top of 2022 noticed giant layoffs and closures of tech firms, the demand for software program just isn’t slacking. As Marc Andreessen famously wrote in 2011, “Software program is consuming the world.” We’re nonetheless seeing disruptions of many industries by improvements in software program. There are new alternatives for innovation and disruption in each trade led by enhancements in software program. Gartner not too long ago launched the time period citizen developer:

an worker who creates utility capabilities for consumption by themselves or others, utilizing instruments that aren’t actively forbidden by IT or enterprise items. A citizen developer is a persona, not a title or focused position.

Citizen builders are non-engineers leveraging low/no code environments to develop new workflows or processes from elements developed by extra conventional, skilled builders.

Enter Giant Language Fashions

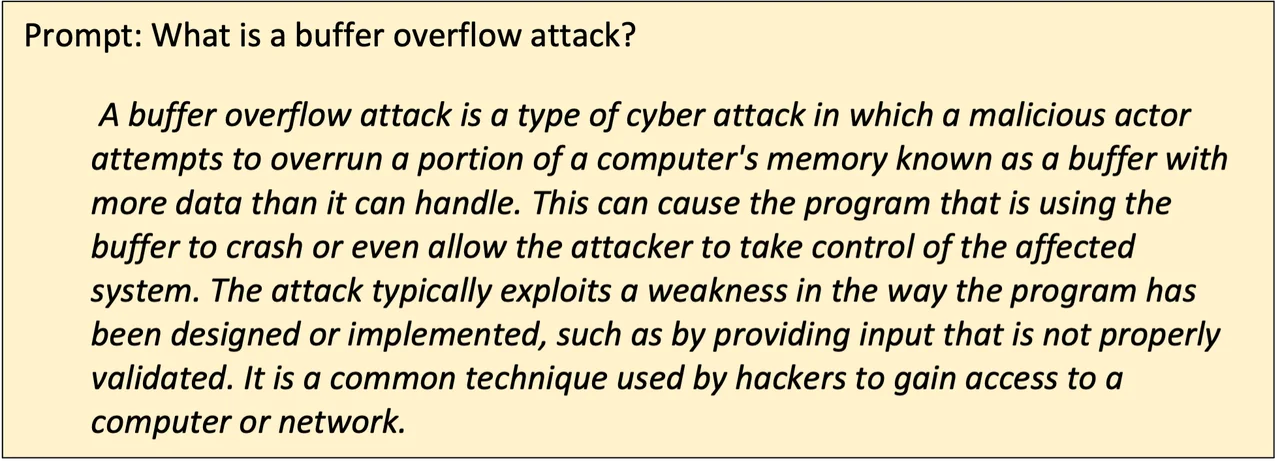

Giant language fashions are neural networks skilled on giant datasets of textual content information, from terabytes to petabytes of knowledge from the Web. These information units vary from collections of on-line communities, comparable to Reddit, Wikipedia, and Github, to curated collections of well-understood reference supplies. Utilizing the Transformer structure, the brand new fashions can construct relationships between totally different items of knowledge, studying connections between phrases and ideas. Utilizing these relationships, LLMs are in a position to generate materials primarily based on several types of prompts. LLMs take inputs and might discover associated phrases or ideas and sentences that they return as output to the consumer. The next examples had been generated with ChatGPT:

LLMs will not be actually producing new ideas as a lot as recalling what they’ve seen earlier than within the semantic area. Relatively than considering of LLMs as oracles that produce content material from the ether, it could be useful to consider LLMs as subtle search engines like google that may recall and synthesize options from these they’ve seen of their coaching information units. A technique to consider generative fashions is that they take as enter the coaching information and produce outcomes which can be the “lacking” members of the coaching set.

For instance, think about you discovered a deck of enjoying playing cards with fits of horseshoes, rainbows, unicorns, and moons. If just a few of the playing cards had been lacking, you’ll almost certainly have the ability to fill within the blanks out of your data of card decks. The LLM handles this course of with large quantities of statistics primarily based on large quantities of associated information, permitting some synthesis of recent code primarily based on issues the mannequin won’t have been skilled on however can infer from the coaching information.

Tips on how to Leverage LLMs

In lots of trendy built-in growth environments (IDEs), code completion permits programners to start out typing out key phrases or capabilities and full the remainder of the part with the operate name or skeletons to customise on your wants. LLM instruments like CoPilot permit customers to start out writing code and supply a wiser completion mechanism, taking pure language prompts written as feedback and finishing the snippet or operate with what they predict to be related code. For instance, ChatGPT can reply to the immediate “write me a UIList instance in Swift” with a code instance. Code technology like this may be extra tailorable than lots of the different no-code options being revealed. These instruments will be highly effective in workforce growth, offering suggestions for staff who’re inexperienced or who lack programming expertise. I take into consideration this within the context of no-code instruments—the options supplied by LLMs aren’t excellent, however they’re extra expressive and extra seemingly to supply affordable inline explanations of intent.

ChatGPT lowers the entry level for attempting a brand new language or evaluating options on languages by filling in gaps in data. A junior engineer or inexperienced programmer may use an LLM in the identical approach they could strategy a busy skilled engineer mentor: asking for examples to get pointed in the correct route. As an experiment, I requested ChatGPT to clarify a Python program I wrote for the Introduction of Code two years in the past. It gave me some prose. I requested for inline feedback, and it gave me again a line-by-line clarification for what it was doing. Not all the explanations had been clear, however neither are all the reasons supplied by engineers. In comparison with Google or Stack Overflow, ChatGPT has extra affordances for clarifying questions. By asking it to supply extra particulars or to focus on totally different audiences (“Clarify this idea to a 7-year-old, to a 17-year-old, and to a graduate scholar”), a consumer can get ChatGPT to current the fabric in a approach that permits higher understanding of the code generated. This strategy can permit new programmers or citizen builders to work quick and, if , dig deeper into why this system works the way in which it does.

Trusting LLMs

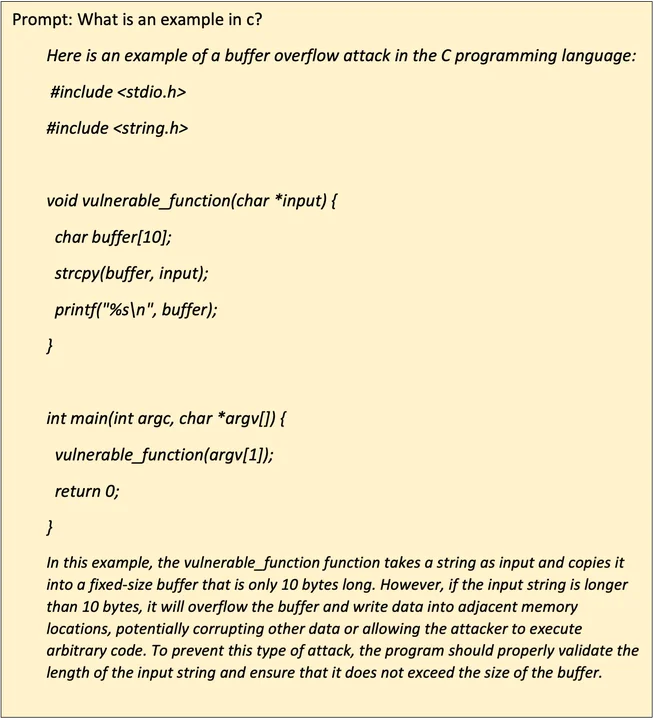

In latest information we’ve seen an explosion of curiosity in LLMs through the brand new Open AI beta for ChatGPT. ChatGPT is predicated off of the GPT 3.5 mannequin that has been enhanced with reinforcement studying to supply higher high quality responses to prompts. Folks have demonstrated utilizing ChatGPT for every little thing from product pitches to poetry. In experiments with a colleague, we requested ChatGPT to clarify buffer overflow assaults and supply examples. ChatGPT supplied a superb description of buffer overflows and an instance of C code that was susceptible to that assault. We then requested it to rewrite the outline for a 7-year-old. The outline was nonetheless moderately correct and did a pleasant job of explaining the idea with out too many superior ideas. For enjoyable we tried to push it additional –

This consequence was fascinating however gave us a little bit pause. A haiku is historically three traces in a 5/seven/5 sample: 5 syllables within the first line, seven within the second, and 5 within the final. It seems that whereas the output regarded like a haiku it was subtly flawed. A better look reveals the poem returned six syllables within the first line and eight within the second, straightforward to miss for readers not properly versed in haiku, however nonetheless flawed. Let’s return to how the LLMs are skilled. An LLM is skilled on a big dataset and builds relationships between what it’s skilled on. It hasn’t been instructed on methods to construct a haiku: It has loads of information labeled as haiku, however little or no in the way in which of labeling syllables on every line. By way of commentary, the LLM has discovered that haikus use three traces and quick sentences, however it doesn’t perceive the formal definition.

Related shortcomings spotlight the truth that LLMs largely recall info from their datasets: Current articles from Stanford and New York College level out that LLM primarily based options generate insecure code in lots of examples. This isn’t shocking; many examples and tutorials on the Web are written in an insecure strategy to convey instruction to the reader, offering an comprehensible instance if not a safe one. To coach a mannequin that generates safe code, we have to present fashions with a big corpus of safe code. As consultants will attest, a number of code shipped at the moment is insecure. Reaching human degree productiveness with safe code is a reasonably low bar as a result of people are demonstrably poor at writing safe code. There are individuals who copy and paste instantly from Stack Overflow with out excited about the implications.

The place We Go from Right here: Calibrated Belief

It is very important keep in mind that we’re simply getting began with LLMs. As we iterate by the primary variations and study their limitations, we are able to design programs that construct on early strengths and mitigate or guard towards early weaknesses. In “Analyzing Zero-Shot Vulnerability Restore with Giant Language Fashions” the authors investigated vulnerability restore with LLMs. They had been in a position to display that with a mixture of fashions they had been in a position to efficiently restore susceptible code in a number of eventualities. Examples are beginning to seem the place builders are utilizing LLM instruments to develop their unit assessments.

Within the final 40 years, the software program trade and academia have created instruments and practices that assist skilled and inexperienced programmers at the moment generate strong, safe, and maintainable code. We now have code evaluations, static evaluation instruments, safe coding practices, and pointers. All of those instruments can be utilized by a staff that’s trying to undertake an LLM into their practices. Software program engineering practices that assist efficient programming—defining good necessities, sharing understanding throughout groups, and managing for the tradeoffs of “-ities” (high quality, safety, maintainability, and so on.)—are nonetheless arduous issues that require understanding of context, not simply repetition of beforehand written code. LLMs ought to be handled with calibrated belief. Persevering with to do code evaluations, apply fuzz testing, or utilizing good software program engineering strategies will assist adopters of those instruments use them efficiently and appropriately.