(Ole.CNX/Shutterstock)

The jury remains to be out whether or not MLOps will survive as a self-discipline unbiased of DevOps. There are some who imagine MLOps is actual, and others who don’t. However what about LLMOps? There are indicators that practitioners want extra operational management over massive language fashions, together with the inputs fed into them in addition to the data they generate.

Professor Luis Ceze turned one of the crucial outspoken critics of MLOps when the concept and the time period started trending a number of years in the past. MLOps isn’t wanted, the College of Washington laptop science professor argued, as a result of the event and upkeep of machine studying fashions wasn’t to date faraway from conventional software program improvement that the applied sciences and methods that conventional software program builders used–put underneath the rubric of excellent ol’ usual DevOps–was ample for managing the machine studying improvement lifecycle.

“Why ought to we deal with a machine studying mannequin as if it have been a particular beast in comparison with any software program module?” Ceze instructed Datanami in 2022 for the article “Birds Aren’t Actual. And Neither Is MLOps.” “We shouldn’t be giving a reputation that has the identical that means of what individuals name DevOps.”

Since an LLM is a sort of machine studying mannequin, one may surmise that Ceze can be in opposition to LLMOps as a definite self-discipline, too. However that really shouldn’t be the case. In actual fact, the founder and CEO of OctoML, which lately launched a brand new LLM-as-a-service providing, stated a case might be made that LLMs are sufficiently completely different from basic machine studying that DevOps doesn’t cowl them, and that one thing else is required to maintain LLM purposes heading in the right direction.

One of many key drivers of the LLMOps motion is the truth that customers usually sew collectively a number of LLMs to create an AI utility. Whether or not it’s question-answering over a pile of textual content, producing a customized story, or constructing a chatbot to reply buyer questions, many (if not most) LLM purposes will contact a number of foundational fashions, he stated.

“Now that LLMOps is beginning to turn into a factor, [it] touches plenty of subjects,” Ceze stated. “How do you concentrate on immediate administration? How do you handle high quality? How do you sew collectively completely different LLMs or completely different foundational fashions to have an precise characteristic with the properties that you really want? All of that is rising as a brand new mind-set about the way you sew fashions collectively to make them behave the way you need.”

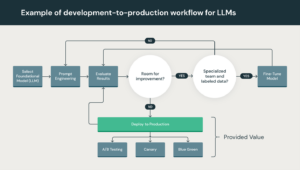

Based on Databricks, the speedy rise of LLMs has necessitated the necessity to outline finest practices on the subject of constructing, deploying, and sustaining these specialised fashions. In comparison with conventional machine studying fashions, working with LLMs is completely different as a result of they require a lot bigger compute cases, they’re based mostly on pre-existing fashions fine-tuned utilizing switch studying, they lean closely on reinforcement studying from human suggestions (RLHF), they usually have completely different tuning targets and metrics, Databricks says in its LLMOps primer.

Different elements to contemplate in LLMs and LLMOps embrace immediate engineering and vector databases. Instruments like LangChain have emerged to assist automate the method of taking enter from prospects and changing it into prompts that may be fed into GPT and different LLMs. Vector databases have additionally proliferated due to their functionality to retailer the pre-generated output from LLMs, which might then be fed again into the LLM immediate at runtime to offer a greater and extra custom-made expertise, whether or not it’s a search engine, a chatbot, or different LLM use case.

Whereas it’s straightforward to get began with LLMs and construct a fast prototype, the shift into manufacturing deployment is one other matter, Databricks says.

“The LLM improvement lifecycle consists of many complicated parts akin to knowledge ingestion, knowledge prep, immediate engineering, mannequin fine-tuning, mannequin deployment, mannequin monitoring, and far more,” the corporate says in its LLMOps primer. “It additionally requires collaboration and handoffs throughout groups, from knowledge engineering to knowledge science to ML engineering. It requires stringent operational rigor to maintain all these processes synchronous and dealing collectively. LLMOps encompasses the experimentation, iteration, deployment and steady enchancment of the LLM improvement lifecycle.”

Ceze largely agrees. “Numerous loads of the issues we simply take as a right in software program engineering type of goes out the window” with LLMs, he stated. “Whenever you write a bit of code and also you run it, it doesn’t matter for those who replace say your Python model, or for those who compile with a brand new compiler. You have got an anticipated habits that that doesn’t change, proper?

“However now, a immediate as a part of one thing that you simply engineer, if it takes the mannequin–even by doing a little weight updates…you may really make that immediate not work as effectively anymore,” he continued. “So managing all of that I feel is admittedly necessary. We’re simply within the infancy of doing that.”

Customers usually view the foundational fashions as unchanging, however they might be altering greater than most individuals notice. For example, a paper lately launched by three researchers, together with Matei Zaharia, a Databricks co-founder and assistant professor at Stanford College, discovered an honest quantity of variance over time within the efficiency of GPT-3.5 and GPT-4. The efficiency of GPT-4 on math issues, particularly, declined 30% from the model OpenAI launched in April in comparison with the one it launched in June, the researchers discovered.

Whenever you consider the entire different shifting components in constructing AI purposes with LLMs–from the shifting foundational fashions to the completely different phrases individuals use to immediate a response out of them, and all the pieces in between–it turns into clear that there’s ample room for error to be launched into the equation.

Luis Ceze is a professor of laptop science on the College of Washington and the CEO and co-founder of OctoML

Going ahead, it’s not clear how mannequin operations will evolve for LLMs. LLMOps may be a short lived phenomenon that goes away as quickly because the developer and neighborhood rally round a core set of established APIs and behaviors with foundational fashions, Ceze stated. Possibly it will get lumped into DevOps, like MLOps earlier than it.

“These ensemble of fashions are going to have increasingly dependable habits. That’s the way it’s trending,” Ceze stated. “Individuals are getting higher at combining output from every of these sources and producing high-reliability output. That’s going to be coupled with individuals realizing that that is based mostly on performance that isn’t all the time going to be 100%, identical to software program isn’t as we speak. So we’re going to get good at testing it and constructing the appropriate safeguards for it.”

The catch is, we’re not there but. ChatGPT shouldn’t be even a 12 months previous, and the entire GenAI trade remains to be in its infancy. The enterprise worth of GenAI and LLMs is being poked and prodded, and enterprises are looking for methods to place this to make use of. There’s a sense that that is the AI know-how we’ve all been ready for, however determining how finest to harness it must be labored out, therefore the concept that LLMOPs is actual.

“Proper now, it appears like a particular factor, as a result of an API whose command is an English sentence versus a structured, well-defined name is one thing that individuals are constructing with,” Ceze stated. “Nevertheless it’s brittle. We’re experiencing among the points proper now and that’s going to should be sorted out.”

Associated Objects:

Immediate Engineer: The Subsequent Sizzling Job in AI

Birds Aren’t Actual. And Neither Is MLOps